| AI Plays The Instrument From The Music |

| Written by Mike James | |||

| Friday, 29 December 2017 | |||

|

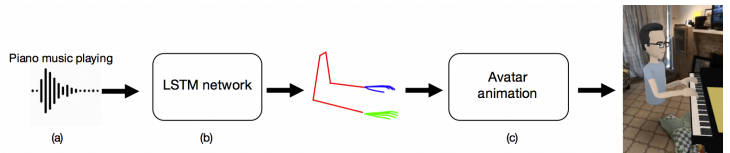

It looks as if air guitar is the next field in which AI is going to crush the puny humans. In this case it is "air" violin and piano, but the principle is the same. I guess the real question is, why is Facebook so interested? This is yet another inverse problem, i.e. work back from the data to how it was produced. In this case the data is the music and the idea is to reconstruct how the instrument was played to produce the music. A team of researchers from Washington, Stanford and Facebook have taken an LSTM - the almost paradoxically named Long Short Term Memory neural network - and let it watch You Tube videos of people playing the piano and the violin and trained it to create the correct arm movements including wrist and finger positions.

This isn't "end to end" processing as the videos were reduced to a set of body positions using either MaskRCNN or OpenPose. In other words, the input to the LSTM network was the music plus positions derived from something like a Kinect Skeleton of the performer. Once trained, the network outputs the positions based on the music input and these can be converted into an avatar playing the music - well pretending to play the music. See what you think of the result:

It clearly is already good enough for many applications, but what are those applications? Notice that all four of the researchers are affiliated with Facebook. What possible application could a musical instrument playing avatar have for Facebook? Apart from whipping us humans at air musical instrument I can't think of a valid use? It's a fun project and it's interesting to know that this particular inverse problem is largely soluble using an LSTM, but beyond this I'm not sure I know why. Perhaps the abstract from the paper will give you food for thought; "We present a method that gets as input an audio of violin or piano playing, and outputs a video of skeleton predictions which are further used to animate an avatar. The key idea is to create an animation of an avatar that moves their hands similarly to how a pianist or violinist would do, just from audio. Aiming for a fully detailed correct arms and fingers motion is the ultimate goal, however, it's not clear if body movement can be predicted from music at all. In this paper, we present the first result that shows that natural body dynamics can be predicted. We built an LSTM network that is trained on violin and piano recital videos uploaded to the Internet. The predicted points are applied onto a rigged avatar to create the animation." Are we about to see musicians replaced by AI composers working with orchestras of avatars?

More InformationRelated ArticlesThe World's Ugliest Music - More than Random How the Music Flows from Place to Place

To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info

|

|||

| Last Updated ( Friday, 29 December 2017 ) |