| More than 48 cores might be too many |

| Monday, 04 October 2010 | |||

|

Old parallel programmers will tell you that one of the first rules of parallelism is that more isn't necessarily better. Now we have some research to back this up with some data. The good news is that for the near future we can carry on as we are but for the long term future we might need something different.

Since the race to ever-faster processors was replaced by the race to cram more cores into a single chip, parallel programming has become part of everyday programming. Something we take for granted is that more cores and more threads equals better performance. Old parallel programmers will tell you that one of the first rules of parallelism is that more isn't necessarily better. Now we have some research to back this up with some data. The good news is that for the near future we can carry on as we are but for the long-term future, where we'll be able to work with more than 48 cores we might need something different.

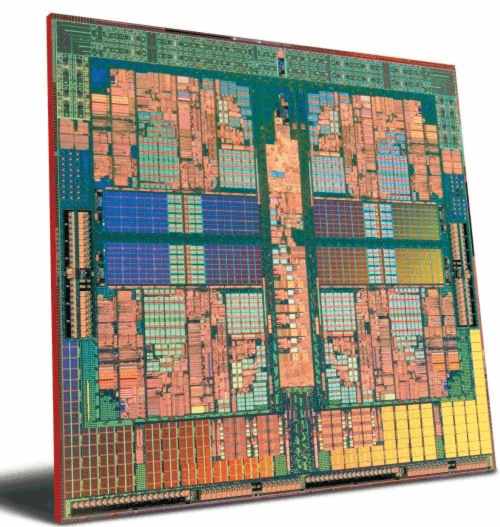

A group of MIT researchers in a paper being presented USENIX Symposium on Operating Systems Design and Implementation in Toronto, argue that, for at least the next few years, the Linux operating system should be able to keep pace with changes in chip design - but in the long term we do need to do things differently. What they did was to take a real system with eight six-core chips and used it to test the performance of algorithms using up tto 48 cores. What they found was that after adding a number of cores further cores slowed the system down rather than speeding it up. The reason in most cases is due to the sharing of memory.To enable the system to delete shared data a central use reference counter is kept by the kernel. As the number of cores and hence threads increases the act of manipulating the use counter in a synchronised manner took more and more time. A simple change to keeping a local count that was occasionally synchronised with a central count speeded things up. So called sloppy counters don't result in an accurate central count at any given moment but sooner or later the count is reliable and this can be used to indicate when to delete the memory referenced. Notice that this isn't a change to the application's use of threading but to the Linux kernel - 3002 lines of changes to be exact. The reason that the changes were so extensive was that the reference counters were implemented in many different subsystems - directory entry objects, file system objects, network routing object and so on. It is also worth noting that proprietary systems have been available with 1000 processors or more but using modified operating systems. This study is about standard off the shelf Linux and the x86 architecture. The authors of the paper conclude that this represents a small change and a partial proof that all of the redesign that has been on-going into making the Linux kernel scale has more or less worked and that the general approach it good. In other words, the only change needed was tinkering rather than a complete re-write. With the changes all of the applications scaled well to 48 cores. What happens beyond 48 is a good question. As the authors of the paper point out, different bottlenecks become important as the number of cores increases.

Other relevant articles:Being threadsafe - an introduction to the pitfalls of parallelism Intel Threading Building Blocks Get ready for 12 core processors!

|

|||

| Last Updated ( Monday, 04 October 2010 ) |