| iNaturalist Kaggle Contest |

| Written by Sue Gee |

| Tuesday, 13 March 2018 |

|

Google has announced the 2018 iNaturalist Challenge being run for the 5th International Workshop on Fine Grained Visual Categorization (FGVC5) and now underway on Kaggle. It is a "long tail" species classification competition, which poses particular challenges for machine learning.

The FGVC5 site explains the relevance of this contest, and the related iMaterialist Challenge for identifying furniture and Home Goods which is also on Kaggle, to its area of interest: Fine categorization, i.e., the fine distinction into species of animals and plants, of car and motorcycle models, of architectural styles, etc., is one of the most interesting and useful open problems that the machine vision community is just beginning to address. Aspects of fine categorization (called 'subordinate categorization' in the psychology literature) are discrimination of related categories, taxonomization, and discriminative vs. generative learning. Fine categorization lies in the continuum between basic level categorization (object recognition) and identification of individuals (face recognition, biometrics). The visual distinctions between similar categories are often quite subtle and therefore difficult to address with today’s general-purpose object recognition machinery. It is likely that radical re-thinking of some of the matching and learning algorithms and models that are currently used for visual recognition will be needed to approach fine categorization. Teams with top submissions to both iNaturalist and iMaterialist will be invited to present their work live at the FGVC5 workshop on June 22, which is being run alongside the CVPR 2018 conference taking place in Salt Lake City, Utah. On the Google blog, Yang Song, Staff Software Engineer and Serge Belongie, Visiting Faculty, Google Research, note: For computers, discriminating fine-grained categories is challenging because many categories have relatively few training examples (i.e., the long tail problem), the examples that do exist often lack authoritative training labels, and there is variability in illumination, viewing angle and object occlusion.

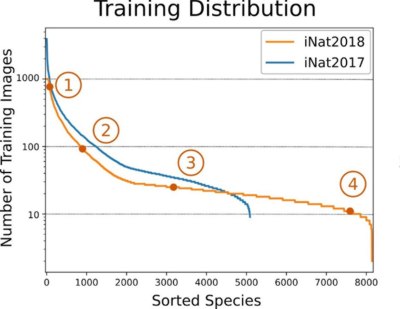

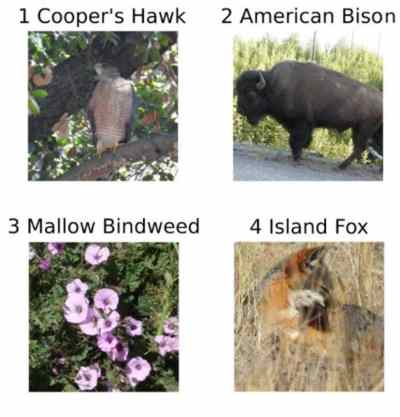

This is the second iNaturalist challenge and as the above graph shows this means a bigger dataset with an even longer tail. The iNat Challenge 2018 dataset contains over 8,000 species, with a combined training and validation set of 450,000 images that have been collected and verified by multiple users from iNaturalist. The dataset features many visually similar species, captured in a wide variety of situations, from all over the world. Training data, annotations, and links to pretrained models can be found on the inat_comp GitHub repo. The contest is now open on Kaggle opened participants to enter the competition on Kaggle, with an entry deadline of May 28th and final submissions due on June 4th. So far 14 teams have registered, which is a much lower number than the 127 for the Google Landmark Challenge which is running simultaneously and also within the remit of CVPR 2018. And with other Kaggle contests attracting hundreds, and some thousands, of competitors, the turnout of just 20 participants last year is vert disappointing. The problem it seems, according to a discussion thread, is that the Kaggle organizers have decreed that ranking points won't be awarded for this challenge. This comment from Kaggle member Shashank Shekhare sums up the sentiment on the thread: It appears as if there is no incentive to take part in this competition :

While I agree ... that research competitions typically don't offer ranking points but most of them do offer prizes for top performing systems. Another CVPR workshop based competition running simultaneously (Landmark Recognition and Retrieval) has enabled ranking points and has prizes too for the best systems. I Programmer has to admit a vested interest in this contest. Some of our team are also iNaturalist members and some photos we have taken may even be part of the dataset. It's very gratifying to submit an observation of something you've never seem before and have it identified by crowd knowledge. But hit the long tail and discover that no one else can recognize it either and you wish for a more perfect system - which hopefully machine learning can provide. More InformationiNaturalist Challenge at FGVC5 Introducing the iNaturalist 2018 Challenge Related ArticlesiNaturalist Launches Deep Learning-Based Identification App Kaggle Enveloped By Google Cloud Google's New Contributions to Landmark Recognition Google Provides Free Machine Learning For All {loadposition signup} {loadposition moreNEWS} {loadposition moreNEWSlist} {loadposition comment} |

| Last Updated ( Tuesday, 13 March 2018 ) |