| Kinect for Windows Near Mode |

| Written by Harry Fairhead | |||

| Tuesday, 24 January 2012 | |||

|

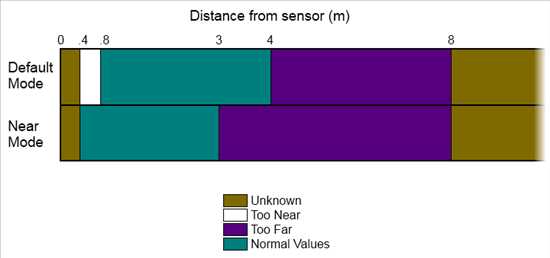

The about-to-be-launched Kinect for Windows brings a solution to one of the Kinect's basic problems - shortsightedness - by introducing Near Mode. But does it do enough to be really useful? One of the problems in using Kinect for Xbox is that it doesn't work when you get too close and it doesn't work when you get too far away. It works well for detecting players in a fairly large room, which is what it was intended to be used for. This really only became a problem when we started to use the Kinect with a PC to implement all sorts of clever systems. For example, a Kinect on top of a mobile robot platform would be so much more useful if it could detect and map objects that are close. The Kinect for PC is very likely going to be used for both close up work and far away player detection. To make this more possible it can be operated in either a near or a far mode. Near mode works down to 50 cm or closer and this really does make it useful for robotic systems and 3D scanner applications. You can buy lenses that modify the depth perception of a Kinect, but this is not how near mode has been implemented. Instead the changes are to the firmware and the accuracy to which the sensor is setup on the production line. The original Kinect was capable of working down to 50cm but only "as long as the light is right". To stop you from using it in areas where its output was variable, the beta SDK returned a zero distance in these regions - effectively signaling an "I give up" from the Kinect. Now the new firmware is optimized to work either in near or far mode and it now returns results even in the areas where it doesn't always get it right. Hence in near mode you could get sensible readings down to 40cm in good conditions. Of course there is a trade off. In near mode the Kinect cannot see much beyond 2 to 3 meters compared to 4 meters in far mode. How well the Kinect works in each of its modes is a bit complicated, so the Microsoft engineers involved have made it easy with a diagram:

The Kinect will return a depth value in the normal zone and a value that indicates which zone it is in otherwise. In addition, the near mode is limited in its tracking abilities. It will return a player index mask but it only returns the position of the center hip joint and not an entire skeleton. There is hope that a full skeleton location might be supported in later versions. So what does this all mean? The main thing to realize is that in near mode you have a depth sensor but not one that can give you the positions of the players' limbs. More importantly, you won't be able to track hand movements unless you do a lot of work. And, as hand gestures are an obvious potential use for a closer Kinect, this is disappointing. Equally if you are using the Kinect for distance sensing on a mobile robot, you are going to have problems implementing algorithms that cope with pixels that report being closer than 50cm in a meaningful way. If you really need a near mode that has full functionality you are probably going to do better with ??some distance changing lens for the device. More Information:Further reading:Kinect for Windows To Launch February 1st Getting started with Microsoft Kinect SDK KinectFusion - instant 3D models KinectFusion - realtime 3D models Getting started with Microsoft Kinect SDK Kinect goes self aware - a warning we think it's funny and something every Kinect programmer should view before proceeding to create something they regret! Avatar Kinect - a holodeck replacement Kinect augmented reality x-ray (video)

Comments

or email your comment to: comments@i-programmer.info

To be informed about new articles on I Programmer, subscribe to the RSS feed, follow us on Google+, Twitter, Linkedin or Facebook or sign up for our weekly newsletter.

<ASIN:B003O6EE4U@COM> <ASIN:B003O6JLZ2@COM> <ASIN:B002BSA298@COM> <ASIN:B0042D7XMO@UK> <ASIN:B003UTUBRA@UK> <ASIN:B0036DDW2G@UK> |

|||

| Last Updated ( Tuesday, 24 January 2012 ) |