| LangGrant Announces MCP Server |

| Written by Kay Ewbank | |||

| Tuesday, 09 December 2025 | |||

|

LangGrant has announced an MCP server that enables LLMs to reason across multiple enterprise databases and generate multi-step analytics plans without transmitting raw data to the model. The system works entirely with metadata and schema context.

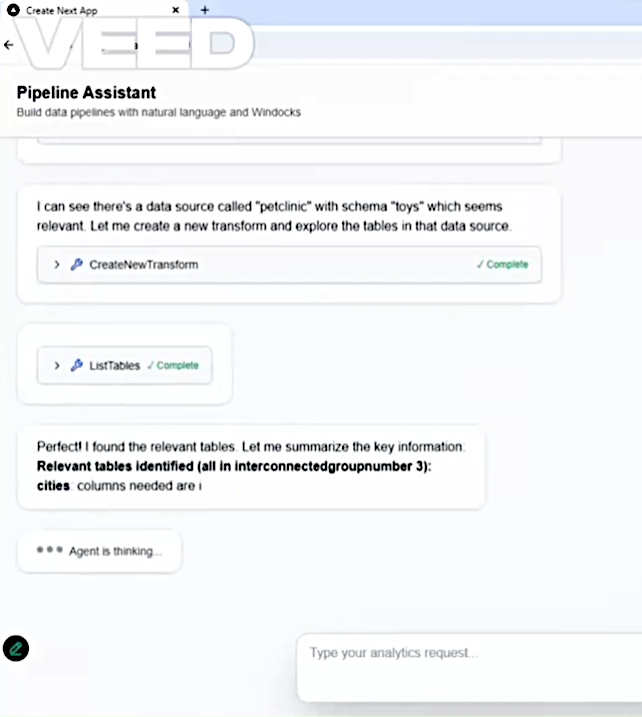

LangGrant was originally known as Windocks, with products for database modernization and synthetic data. It has products for cross platform database subsetting, synthetic data generation, and database movement or migration. The enterprise edition of Windrocks supports SQL Server containers and database cloning (or virtualization), for delivery of complete writable database environments that can include subsets, synthetic data, and database migration. The company has now launched the LEDGE MCP server, a platform that enables LLMs to reason across multiple databases at scale. The MCP server can also execute multi-step analytics plans, and accelerate agentic AI development. The server does this without sending data to the LLM or breaching governed boundaries. LangGrant says LEDGE enables LLMs to deliver accurate results for analytical queries in minutes, a task that usually takes weeks, even with AI-powered coding tools. Ramesh Parameswaran, LangGrants' CEO, CTO, and co-founder, said: "The LEDGE MCP Server removes the friction between LLMs and enterprise data. With this release, enterprises can apply agentic AI directly to existing database environments like Oracle, SQL Server, Postgres, Snowflake — securely, cost-effectively, and with full human oversight." The LangGrants team says that in order for context engineering to fulfil its potential in getting value from existing enterprise data assets, several technical challenges must be addressed. Context engineering refers to the practice of identifying and providing the right information for Large Language Models (LLMs) so they understand what is required from the system, make better decisions and deliver contextual, enterprise-aligned outcomes without relying on manual prompts.

LangGrants says at the moment security and governance policies block LLM adoption because enterprises cannot permit direct access or data movement outside governed systems so limiting the use of LLMs. The cost of pushing raw data into LLMs for analysis is another problem, along with the fact that agent developers need production-like data but lack a safe, on-demand way to clone complex enterprise databases. The databases are not designed for LLM consumption, and software engineers are only doing manual context engineering. The LangGrant LEDGE MCP Server is designed to overcome these limitations. It orchestrates LLMs to deliver results accurately while still complying with enterprise data policies. Analytics and reasoning occur using metadata and schema context so avoiding the need to transmit raw data or large payloads to the LLM, so lowering token costs. LEDGE MCP automates query planning and orchestration, generating precise, multi-stage analytics workflows autonomously. This eliminates manual scripting and reduces LLM hallucination risk in query generation. The server lets developers provision production-like, isolated clones and containers for developing, testing, and tuning AI agents, and also automatically maps schemas, relationships, and metadata so LLMs can "see" the entire data landscape without reading the underlying data. The LangGrant LEDGE MCP Server is now available for trial.

More InformationRelated ArticlesVisualize The Inner Workings Of An LLM Google Announces BigQuery-Managed AI Functions Microsoft Adds IQ Layer To Fabric To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info |

|||

| Last Updated ( Tuesday, 09 December 2025 ) |