| IBM's TrueNorth Rat Brain |

| Written by Mike James | |||

| Wednesday, 30 September 2015 | |||

|

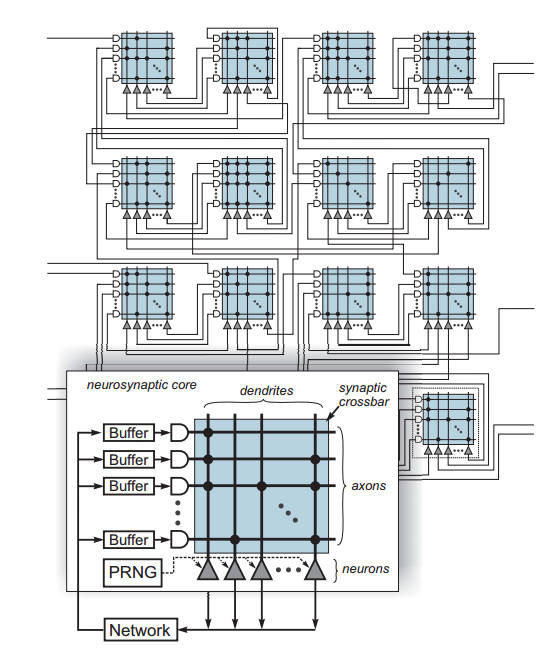

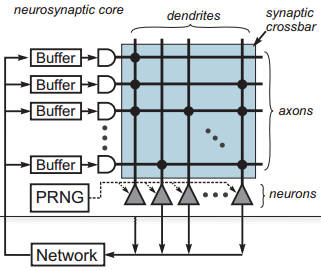

IBM's TrueNorth project has reached a new stage - enough neurons to be comparable to a rat brain. Is this the future of AI? The obvious answer is "yes", but the TrueNorth project is a more subtle proposition than you might think. All of the big breakthroughs in what neural networks can do that you have been reading about are based on a simple model of an artificial neuron. The inputs to the neuron are continuous and they sum up the inputs using a set of weights that represent what it has learned and outputs a simple function of those inputs. IBM's TrueNorth chip isn't quite like this - it is a spiking neural network where the activation is proportional to the rate of impulses a neuron generates and receives. This sort of network is claimed to be closer to the way a biological neuron works but much less is known about its properties and it is much harder to work with. In fact, its use is something of an indication that perhaps the work is a bit too close to biology and is invoking magic rather than science. In this case, however, despite the hype and the use of biological terms such as white matter and grey matter, there does seem to be something worthwhile going on. The TrueNorth chip has 64x64 cores consisting of 256 "neurons" connected by a binary crossbar array. The output of any neuron can be connected to the input of any other and this is an analog of the short range connections in the cortex. Long range connections are made between chips on a point-by-point basis rather than a crossbar array.

The programmability comes from being able to control which neuron is connected to which neuron using the binary crossbar. Activity is synchronous with a 1ms time step and a neuron either fires or it doesn't in each time step. The initial state of the neuron and its threshold can be programmed. The neuron activation decays with time and there is a random number generator which can introduce some randomness. The output spikes are stored in a buffer and subject to a programmable delay to simulate the propagation time in a real neural network. Long distance connections between cores are made by a routing system. When a neuron on a core spikes, it looks up an address which then used to route a signal to the destination core where it finally reaches the intended target neuron via the crossbar. Thus each TrueNorth chip has 1 million neurons and 256 million synapses. The real question is how to put this to work?

To help answer the question, 48 TrueNorth chips have been assembled into a larger "brain" consisting of 48 million neurons - roughly the number that you would find packed into the brain of a rodent - for a recent boot camp designed to get people using the new approach. The big problem is that the learning algorithms used in "deep learning" are not directly applicable to a spiking network because they involve the use of the gradient of neuron's output by its input weights. In the case of a spiking network it is difficult to see what the gradient corresponds to and in this particular case there are no weights only binary connections and a threshold. However, judging by the reports from the bootcamp it seems that progress has been made. There are learning algorithms for spiking neural nets based on the timing of the spikes - Spike Dependent Plasticity for example - but it isn't clear if the TrueNorth architecture is suitable for these approaches. The one account we have from the bootcamp outlines how the network was used for language processing:

In both cases the problem of training the network has been avoided by training a "traditional" neural network and then transferring the learned weights to a TrueNorth network. This is both good news and bad news. As it stands TrueNorth is clearly capable of implementing what has been learned from a deep neural network and it can do it fast and with low power consumption. What it cannot seem to do is learn on the job. Once TrueNorth is set up it simply implements the learned model and has no plasticity of its own. Clearly this is a step in the right direction but not a big enough one just yet. IBM have built a rat brain that seems to be destined to repeat its mistakes rather than learning from its history.

More InformationBuilding Block of a Programmable Neuromorphic TrueNorth's neurons to revolutionize system architecture Related ArticlesIBM's TrueNorth Simulates 530 Billion Neurons The Influence Of Warren McCulloch Near Instant Speech Translation In Your Own Voice Flying Neural Net Avoids Obstacles Google's Deep Learning - Speech Recognition A Neural Network Learns What A Face Is The Paradox of Artificial Intelligence

To be informed about new articles on I Programmer, install the I Programmer Toolbar, subscribe to the RSS feed, follow us on, Twitter, Facebook, Google+ or Linkedin, or sign up for our weekly newsletter.

Comments

or email your comment to: comments@i-programmer.info |

|||

| Last Updated ( Monday, 05 October 2015 ) |