| Turing's Red Flag |

| Written by Mike James | |||

| Wednesday, 02 December 2015 | |||

|

You almost certainly have heard of the Turing Test, but now it is suggested that we need something more, Turing's Red Flag, to protect us from AIs that are aiming to pass the original test.

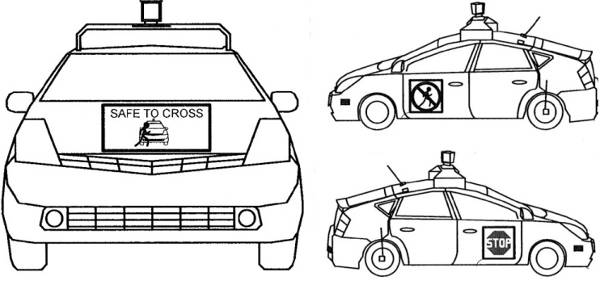

As AI becomes increasingly mainstream, we often have difficulties recognizing the human from the non-human. You may have grown so accustomed to asking Siri or Cortana questions that you forget that they are just programs. Worse, some innocent users may never have realized that they are AI. If you ask Siri if she/it is a computer it comes back with the cute answer: I can neither confirm nor deny my current existential status If you pursue the matter then the answers become increasingly evasive. This, as I said, is cute, but it is also dishonest and perhaps potentially dangerous. What if a user really believed Siri was a human intelligence. Perhaps they might put more faith in her opinions than they deserve. Similarly there are cases where knowing that you are up against an AI would make you think twice about trying to beat it. If you are playing poker with a bot then perhaps you might want to know before betting all your life savings. And what about driverless cars - do you really want them to keep the fact that they are driverless from you? The fact of the matter is that AI is not so advanced that it is equal to human intelligence. In many situations it is worse and untrustworthy and in others it is so much better than the average. To help us avoid making mistakes based on not knowing if we are interacting with a human or an AI agent, computer scientist Toby Walsh at the University of New South Wales suggests the Turing Red Flag Law: "Turing Red Flag law: An autonomous system should be designed so that it is unlikely to be mistaken for anything besides an autonomous system, and should identify itself at the start of any interaction with another agent." The name comes from UK law: the Locomotive Act in 1865 to protect pedestrians from the dangerous motor cars that were newly added to road traffic. The law required a person to walk in front of the vehicle carrying a red flag to make sure everyone knew that there was danger coming. The original red flag law might have been more of a hindrance to the technology than the safety issues required but the Turing version is a more serious proposition. We humans really do need to modify our behavior to take account of the type of agent we are interacting with. There are already indications that self driving cars need humans to treat them differently. At a junction an impatient cyclist can keep a self driving car dithering if they inch forward just at the car is about to move off. Self driving cars are designed to drive more defensively than a human driver. Google has even patented various ways that the car can communicate not only the fact that it is a self driving car, but what it is thinking of doing next - basically a video monitor relays messages to the humans.

Google may be doing the "right" thing, but the others of us AI people aren't quite so ready to put a red flag in front of our programs. The goal of many, or even most, AI projects is the dream of strong AI - AI that is so good you really can't distinguish it from the real thing. This sort of AI is going to pass the Turing test, unless of course it has a red flag sticking out in front of it. Let's face it Siri's response isn't just a bit of fun, it's a small expression of the desire that lurks in every AI researcher to actually fool the user and to pass the Turing test at long last. There are lots of interesting examples in the original paper, which is well worth reading, and I'm sure you can add your own examples of where you would want your behaviour to be different when you realize that you are interacting with an AI. However, things are slightly more complex than you might think. At the moment we are thinking mostly of the flag indicating when you are about to interact with an AI that isn't quite up to it. So you know how much to trust it, so you know to speak slowly and clearly, so you know not to fool about being impatient or agressive at a traffic junction and so on.. But what about when they get so good that they are better than us? Surely then it will be time to repeal the red flag law? Not so fast. Do you remember the example of not betting all your cash against a poker bot? In the future you probably will want to know that there is an AI behind the thinking to avoid going up against it. The flag might just save you from not taking the advice given because you thought it was human advice.

It seems Toby Walsh's Turing's Red Flag Lane is going to have to be a permanent feature of AI and it should be added to Asimov's Laws of Robotics. More InformationTuring's Red Flag Toby Walsh, University of New South Wales and NICTA Sydney, Australia Related ArticlesHalting Problem Used To Prove A Robot Cannot Computably Kill A Human Survey Reveals American Public Suspicious Of Tech

To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on, Twitter, Facebook, Google+ or Linkedin.

Comments

or email your comment to: comments@i-programmer.info |

|||

| Last Updated ( Sunday, 31 July 2016 ) |