| Google Clips - The Death of the Photographer |

| Written by David Conrad | |||

| Sunday, 20 May 2018 | |||

|

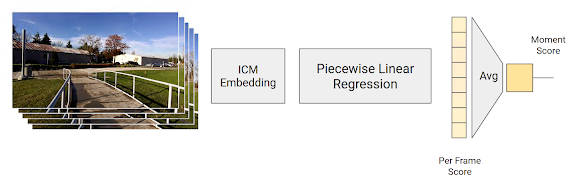

Google has invented a camera that can do what a photographer, or should that be videographer, does - pick the moment. It is the last piece of photography that humans were needed for. How it works is an interesting story that might have applications in other areas. Photography is a strange beast. Is it art or isn't it? You can argue the point until you are tired and really don't care any more, but getting an answer is just a matter of looking at a photo taken by Cartier-Bresson or Ansel Adams. At its best photography is like art because it evokes the same sort of responses in us that other things called art do. This doesn't settle all of the questions, however. It could just be that photography is a matter of selecting the moment. As Cartier-Bresson is well known to have said: To me, photography is the simultaneous recognition, in a fraction of a second, of the significance of an event as well as of a precise organization of forms which give that event its proper expression. Yes, it is the selection of the moment. One way of doing the job, and I've known photographers who work in this way, is to take thousands of photos and throw most of them away. In this sense photography isn't a creative art it is just a matter of knowing when a picture is good - it's a decision art. Google and its AI research team really do think that photography is about deciding what makes a good picture and think it can be automated. Over time photography has become increasingly less skillful. First automatic exposure takes away the skill of setting up the recording equipment and then automatic focus makes it easy to focus on the foreground object. AI driven focus even does away with the need to manually select what should be in focus. All that is left it the moment to press the shutter or record button. Google's Clips app doesn't quite create a Cartier-Bresson in your phone but it does claim to be able to select interesting video clips from an event. To do this it first uses a pairwise comparison technique, common in market research, to rate video clips. Randomly selected pairs of video segments were shown to subjects who were asked to pick the one they preferred. From this data it is possible to compute a subjective rating score. The big shock is that 50 million pairs were rated from 1000 videos. That is a lot of pairwise comparisons! The next part of the idea is to create a way to assign a rating score on new video clips without involving a human. This in itself is a two-step process. First a neural network is used to detect what sorts of objects, concepts and actions are within a clip. This has nothing to do with rating the video, but it is reasonable that the rating has something to do with these things. The network was converted to one of Google's MobileNet implementation which are neural networks optimized to run on mobile devices.

The second stage is to take the output of the neural network and feed it into a comparatively classical statistical model - a piecewise linear regression model. This is fitted so as to best predict the subjective ratings on the videos that the humans rated. It is assumed that this rating will generalize to new videos. The rating is computed for each frame and an average score is computed for a segment of video. The segment of video with the highest score is the one to keep. The procedure isn't quite a pure as this description sounds and some human adjustment was also fed into the system: "While this data-driven score does a great job of identifying interesting (and non-interesting) moments, we also added some bonuses to our overall quality score for phenomena that we know we want Clips to capture, including faces (especially recurring and thus “familiar” ones), smiles, and pets. In our most recent release, we added bonuses for certain activities that customers particularly want to capture, such as hugs, kisses, jumping, and dancing. Recognizing these activities required extensions to the ICM model." The camera itself also has some guiding principles built into it. For example, don't go mad and flatten the battery by taking too many clips. Avoid taking lots of clips of the same type at the same time. Finally, capture more clips than you need and throw away the weakest. There is still room for the human, however: "Clips is designed to work alongside a person, rather than autonomously; to get good results, a person still needs to be conscious of framing, and make sure the camera is pointed at interesting content. We’re happy with how well Google Clips performs, and are excited to continue to improve our algorithms to capture that “perfect” moment!" So great my role as a photographer is now reduced to finding a good spot to place the camera at an interesting event. Google Clips is $249 in the Play store now. How well you think it works probably depends on how good a photographer you are and hence on how much you decide to hate it on principle.

More InformationAutomatic Photography with Google Clips Related ArticlesGoogle Implements AI Landscape Photographer Google Releases Object Detector Nets For Mobile Selfie Righteous - Corrects Selfie Perspective Google Presents --- Motion Stills Removing Reflections And Obstructions From Photos Google - We Have Ways Of Making You Smile New Algorithm Takes Spoilers Out of Pics Google Has Software To Make Cameras Worse To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info |

|||

| Last Updated ( Sunday, 20 May 2018 ) |