| Azure Cosmos DB Gets Multi-Masters |

| Written by Kay Ewbank | |||

| Thursday, 10 May 2018 | |||

|

Microsoft has announced a number of improvements to Azure Cosmos DB, its Azure database announced last year. This year's Build conference heard details of a preview of multi-master support along with shared provisioning and a bulk executor library.

Cosmos DB is a globally distributed, multi-model database service that lets you scale throughput and storage independently across any number of Azure's geographic regions. It indexes all data, and the multi-model service supports document, key-value, graph and column-family data models. Cosmos DB has wire-compatible APIs for MongoDB, Apache Cassandra and Apache Gremlin, along with a native SQL dialect.

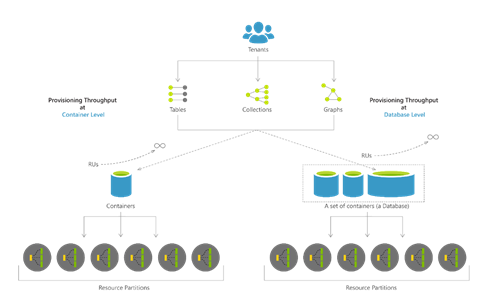

The new multi-master feature should mean that data can be written and synchronized across regions and multiple sites without loss of consistency. This is probably the most difficult thing to achieve in databases, because of the need to work out what happens when the same record is updated in more than one master copy - who's change should override the other? Microsoft says the new feature provides guaranteed consistency. Write masters can be located in any Azure region, and there's a guarantee of single-digit write latency. Similar facilities are already on offer from managed cloud databases from Google and AWS, though Microsoft's claim is that the Cosmos DB solution is preferable since it has automatic conflict detection and you get multiple policy choices on which master should 'win' when clashes occur. The next improvement announced is a provisioning option that means you can allocate provisioning throughput for a set of containers, meaning the containers can share the provisioned throughput. Until now, Cosmos DB let you configure throughput that you need and pay only for the throughput that you have reserved. This is organized via containers made up of collections, tables or graphs. The new option makes capacity planning simpler, as you can configure provisioned throughput at different granularities either programmatically or through the Azure portal. While in theory this should make Cosmos DB more affordable for smaller databases, it's worth noting that the pricing structure for this at the moment on a per-database throughput requires a minimum of 50,000 RU/s, which is almost $3000 a month, and if you set up the minimum Enterprise system of Cosmos, Power BI and Azure API Management, the licensing cost is over $100,000 a year. The final improvement announced is the introduction of a Bulk Executor library in .NET and Java. This lets you perform bulk operations in Azure Cosmos DB through APIs for bulk import and update.

More InformationRelated ArticlesCosmos DB Strengthens Microsoft's Azure Database Microsoft Updates Data Platform Google's Cloud Spanner To Settle the Relational vs NoSQL Debate? To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info |