| Programmer's Guide To Theory - Information Theory |

| Written by Mike James | ||||

| Monday, 27 July 2020 | ||||

Page 2 of 3

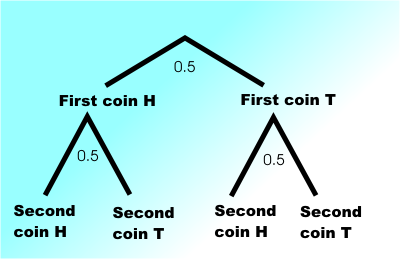

Logs(Skip this section if you are happy with all things logarithmic). Part of the problem here is the use of a negative logarithm, so a brief reminder of what logs are all about is sure to help. The log of a number x to a base b is just the number that you have to raise b to get x. That is, if: by=x then y is the log of x to the base b. For logs to the base 2 we simply have to find the number that we raise 2 by to get the specified value. So log2(4)=2 because 22=4 and continuing in this way you can see that: n log2(n) 1 0 (20=1) 2 1 (21=2) 4 2 (22=4) 8 3 (23=8) 16 4 (24=16) The only problem you might have is with 20=1, which is so just because it makes overall sense. Clearly powers of two have nice simple logs to the base 2 and values in between powers of two have logs which aren’t quite so neat. For example, log2(9) is approximately 3.17 because 23.17 is almost 9. We need to know one more thing about logs and this is how to take the log of a fraction. If you raise a number to a negative power then that’s the same as raising 1 over the number to the same power. For example: The reason this works is that powers add and you can deduce what a negative power must be by looking at expressions like: 2+1 x 2-1 = 21-1 = 20 = 1 and you can see from this that: 2-1 = 1/21 = 1/2 and more generally: a-b = 1/ab This also means that that logs of fractions are negative and, given probabilities are always less than one, log2(p) where p is a probability, is negative – hence the reason for including the minus sign in the definition. n log2n -log2n 1/2 -1 1 1/4 -2 2 1/8 -3 3 1/16 -4 4 BitsNow that we have the mathematics out of the way, we need to return to look again at the idea of information as measured by I(A)=-log2(p). You may have noticed that powers of two seem to figure in the calculation of information and this seems to be very natural and appropriate when it comes to computers. However, to see how deep and natural the connection is we can play a simple game. Suppose we flip a fair coin and you have to find out if it landed as a head or a tail. It’s a very boring game because you can simply ask me and I will tell you, but some information has flowed between us and we can attempt to use our new measure of information to find out how much. Before I answer the question, the two results “Heads” or “Tails” are equally possible and so their probabilities are simply p=0.5 and q=0.5 respectively. So when I give you the answer the amount of information I pass to you is: I(answer) = -log2(.5) = -(-1)= 1 This is quite interesting because in this binary split between two equally likely alternatives I am passing you 1 unit of information. We can extend this a little. Suppose two coins are tossed and you have to ask questions to find out if the result was (Head, Head), (Head, Tail), (Tail, Head) or (Tail, Tail), but you can only ask about one coin at a time.

Coins Outcomes Information

1 2 1

2 4 2

3 8 3

4 16 4

5 32 5

It seems that if you find out which of 32 possible outcomes occurred you only gain 5 units of information – but then you only needed to ask five binary questions! You could of course do the job less efficiently. You could ask 32 questions of the type “Did you get HTHTH?” until you get the right answer but this is up to you. If you want to gather 5 units of information in an inefficient way then that’s your choice and not a reflection of the amount of information you actually gain. Notice that this is also the first hint that information theory may tell you something about optimal ways of doing things. You can choose to be inefficient and ask 32 questions or you can do things in the most efficient way possible and just ask 5. |

||||

| Last Updated ( Monday, 27 July 2020 ) |