| Flink Gets Event-time Streaming |

| Written by Kay Ewbank | |||

| Monday, 30 November 2015 | |||

|

Apache Flink 0.10.0 has been released with improvements for data stream processing, support for event-time streaming and exactly-once processing.

Apache Flink is an open source platform for distributed stream and batch data processing. It consists of a streaming dataflow engine that provides data distribution, communication, and fault tolerance for distributed computations over data streams. Flink includes several APIs, including the DataSet API for static data embedded in Java, Scala, and Python; the DataStream API for unbounded streams embedded in Java and Scala; and the Table API with a SQL-like expression language embedded in Java and Scala. In the new version, the DataStream API has been upgraded from its former beta status, and the developers have worked to make Apache Flink a production-ready stream data processor with a competitive feature set. In practical terms, this means support for event-time and out-of-order streams, exactly-once guarantees in the case of failures, a flexible windowing mechanism, sophisticated operator state management, and a highly-available cluster operation mode. A new monitoring dashboard with real-time system and job monitoring capabilities has also been added.

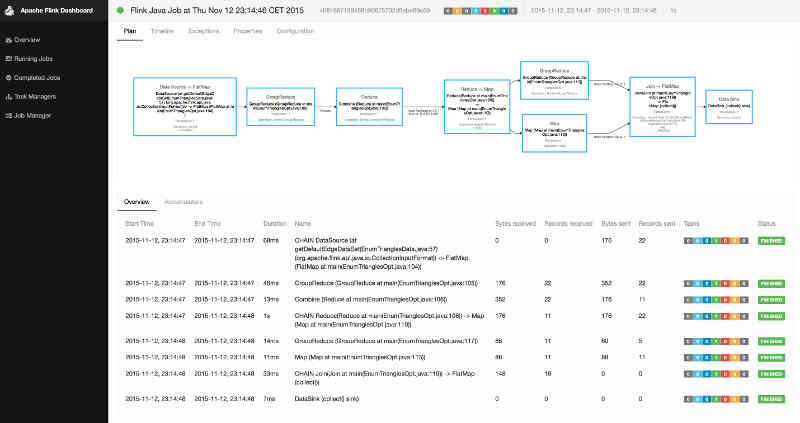

The event-time stream processing is designed for data sources that produce events with associated timestamps such as sensor or user-interaction events. Such data is often drawn from several sources meaning events arrive out-of-order in terms of their timestamps. The stream processor needs to put these into the correct order, and the new version supports event-time processing as well as ingestion-time and processing-time processing. Stateful stream processing is also supported, so that Flink can maintain and update state information in long running applications where operator state must be backed up to persistent storage at regular intervals. Flink 0.10.0 offers flexible interfaces to define, update, and query operator state and hooks to connect various state backends. Another improvement is support for high availability modes for standalone cluster and YARN setups. This feature relies on Apache Zookeeper for leader election and persisting small sized meta-data of running jobs. The DataStream API has also been revised, with improvements to stream partitioning and window operations, with window assigners, triggers, and evictors. There are also new connectors for data streams, including an exactly-once rolling file sink which supports any file system, including HDFS, local FS, and S3. The Apache Kafka producer has been updated to use the new producer API, and connectors have been added for ElasticSearch and Apache Nifi. The new web dashboard and real-time monitoring support lets you view the progress of running jobs and see real-time statistics of processed data volumes and record counts. It also gives access to resource usage and JVM statistics of TaskManagers including JVM heap usage and garbage collection details. Other improvements include off-heap managed memory support; the ability to work with outer joins; and improvements to Gelly, Flink’s API and library for processing and analyzing large-scale graphs. The Flink community held its first conference in October, and the slides and videos from the session are available on the website if you'd like to find out more about Flink.

More InformationRelated ArticlesGoogle Announces Big Data The Cloud Way FLink Reaches Top Level Status

To be informed about new articles on I Programmer, sign up for our weekly newsletter,subscribe to the RSS feed and follow us on, Twitter, Facebook, Google+ or Linkedin.

Comments

or email your comment to: comments@i-programmer.info |

|||

| Last Updated ( Monday, 30 November 2015 ) |