| NVIDIA's Neural Network Drives A Car |

| Written by Mike James | |||

| Tuesday, 26 April 2016 | |||

|

NVIDIA has moved into AI in a big way, both with hardware and software. Now it has implemented an end-to-end neural network approach to driving a car. This is a much bigger breakthrough than winning at Go and raises fundamental questions of what sort of systems we are willing to accept driving cars for us.

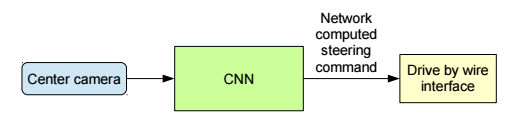

The term "end-to-end" has, in AI, come to mean giving a neural network the most basic raw data as its input and wiring its output directly to whatever directly affects those inputs. This has to be contrasted with feeding in some heavily preprocessed data and allowing the outputs to control something more high-level. NVIDIA is reporting the results of its end-to-end self driving car project, called Dave-2, and in this case the raw input is simply video of the view of the road and the output is steering wheel angle. The neural network in between learns to steer by being shown videos of a human driving and what the human driver did to the steering wheel as a result. You could say that the network learned to drive by sitting next to a human driver. "Training data was collected by driving on a wide variety of roads and in a diverse set of lighting and weather conditions. Most road data was collected in central New Jersey, although highway data was also collected from Illinois, Michigan, Pennsylvania, and New York. Other road types include two-lane roads (with and without lane markings), residential roads with parked cars, tunnels, and unpaved roads. Data was collected in clear, cloudy, foggy, snowy, and rainy weather, both day and night. In some instances, the sun was low in the sky, resulting in glare reflecting from the road surface and scattering from the windshield."

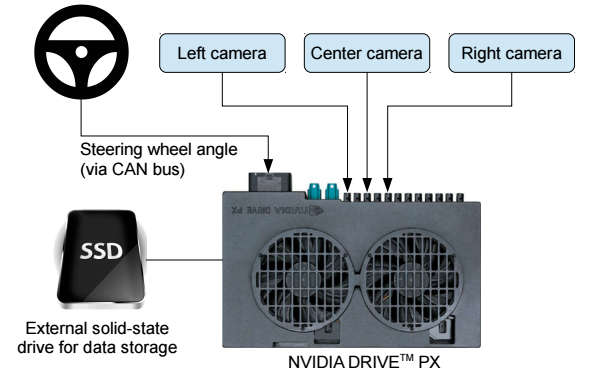

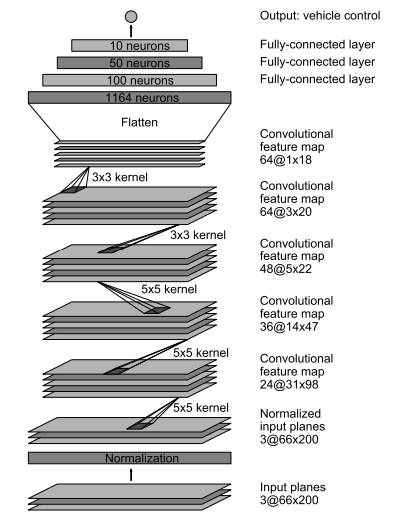

Compare this to the conventional self-driving car approach which breaks the task into different components - lane finding, other car detection, guard rail location and so on. In most cases a detailed and accurate map of the road is also required and the program is essentially a set of if..then.. rules that use the input features to deterministically generate the outputs. This approach to self-driving cars comes with a measure of verifiability that gives confidence that the design works and why it doesn't. For example, the recent Google self driving car crash with a bus resulted in a bus detector being added to the system so it wouldn't happen again. The problem with this "engineering" approach is that the system can't cope with anything that it encounters that is new. If it isn't in the if..then rules the car can't cope. While NVIDIA refers to Dave-2 as a self-driving system it is restricted just one aspect of driving a vehicle - steering which involves perception and what in a human would be eye-to-hand co-ordination. While this is a fundamental aspect of driving it doesn't cater for the other crucial matters of starting, stopping and speed control. As a neural network, Dave-2 can generalize to situations it has never been trained on, or at least we hope it can. This means that a self-driving car using an end-to-end approach could be more capable, but also perhaps less predictable. As there are no if..then rules to examine it is much harder to know what a neural network will do as the way that it works is distributed though the network. While you might not be able to point to a specific "bus detector", the network would still avoid a collision with a bus. After training, Dave-2 was reduced to using a single forward facing camera: The network architecture was a deep convolutional network with 27 million connections and 250,000 parameters.

After testing on a simulation, Dave-2 was taken out on the road - the real road. Performance wasn't perfect, but the system did drive the car for 98% of the time leaving the human just 2% of the driving to do. You can see a video of it in action:

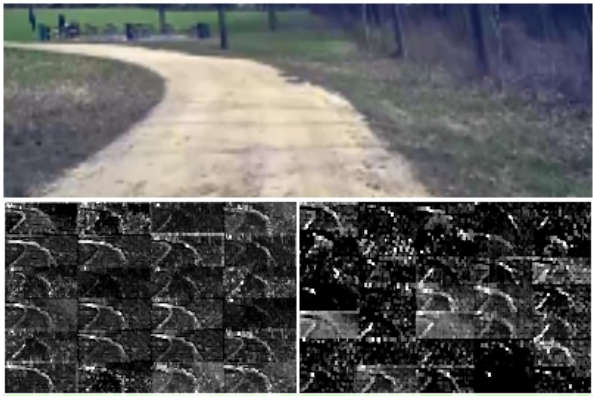

To me it looks as if it drifts in its lane a bit too much to be a human driver, but this could be a control loop problem rather than a deep AI issue. Examining the network's structure suggests that it did learn to detect useful road features, but of course it wasn't trained to learn road features only steering outputs.

It is reasonable to suppose that this sort of approach is superior to fully engineered solutions but are you going to trust it: "Compared to explicit decomposition of the problem, such as lane marking detection, path planning, and control, our end-to-end system optimizes all processing steps simultaneously. We argue that this will eventually lead to better performance and smaller systems. Better performance will result because the internal components self-optimize to maximize overall system performance, instead of optimizing human-selected intermediate criteria, e.g., lane detection. Such criteria understandably are selected for ease of human interpretation which doesn't automatically guarantee maximum system performance." Do we need to understand a system to have confidence that it will work? If we learn the lessons of traditional buggy software the answer seems to be no. More InformationEnd to End Learning for Self-Driving Cars Related ArticlesSelf Parking Chairs - Office Utopia WEpod - First Driverless Passenger Service Arrives Self Driving Vehicles Go Public Google's Self Driving Cars - Not So Smart? Google's Self-Driving Cars Tackle Urban Traffic Hazards Google Self-Driving Car For Daily Commute Driverless Cars - More Than Just Google Driverless Cars Become Legal - The Implications Robot cars - provably uncrashable?

To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on, Twitter, Facebook, Google+ or Linkedin.

Comments

or email your comment to: comments@i-programmer.info

|

|||

| Last Updated ( Tuesday, 26 April 2016 ) |