| IBM's Watson Gets Sensitive |

| Written by Kay Ewbank |

| Thursday, 25 February 2016 |

|

IBM Watson has some new APIs to help developers add emotional analysis to their apps. The services are part of IBM's open Watson platform. IBM Watson is a platform that uses natural language processing and machine learning to discover insights in large sets of unstructured data. According to IBM, Watson represents the new cognitive computing era, where systems understand the world the way humans do: through senses, learning, and experience. The new APIs are all aimed at helping systems based on machine learning interact more naturally with people.

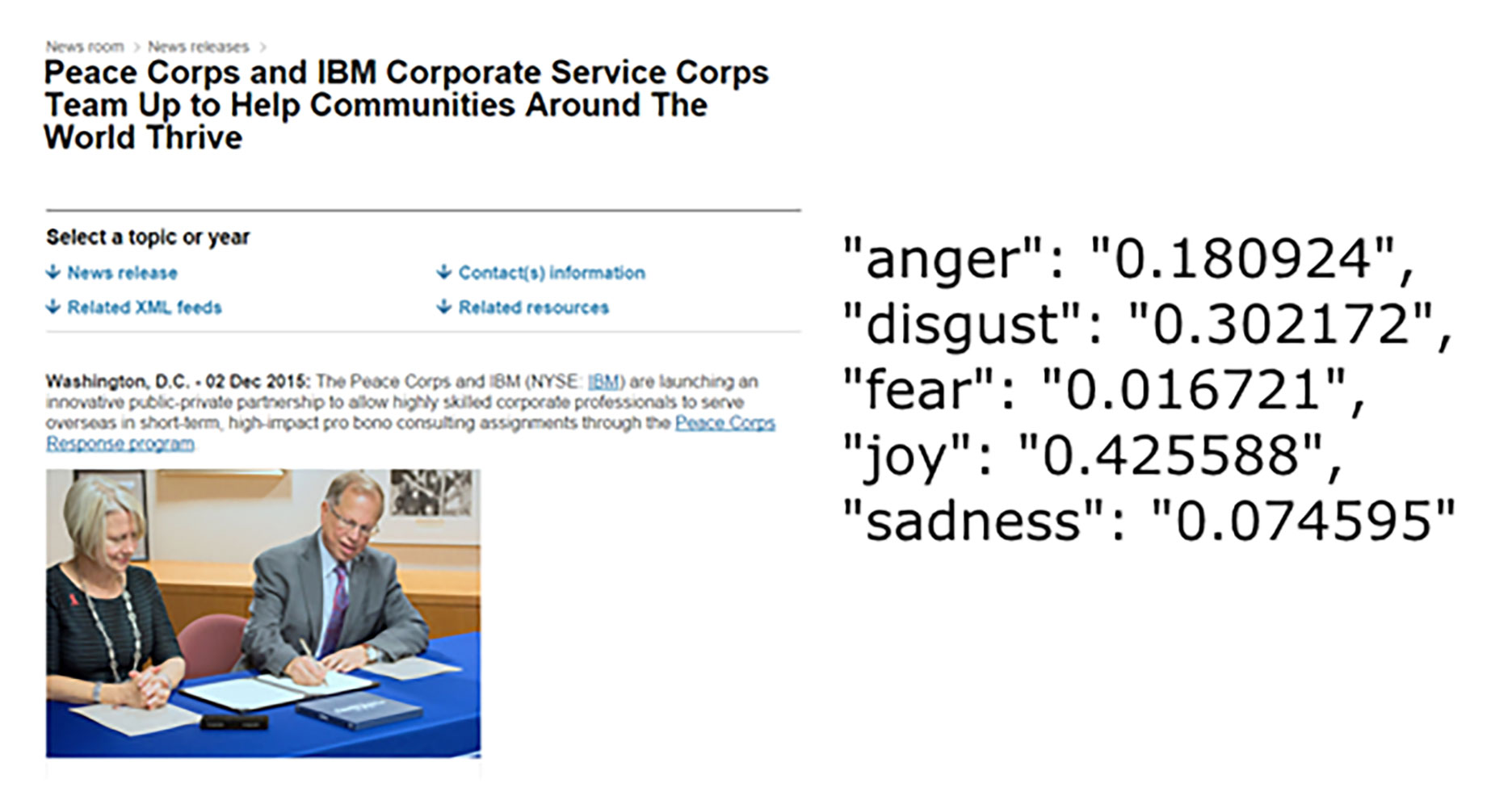

The first of the three new APIs, Tone Analyzer, can be used to return insights about the emotion, social and language tones reflected in a piece of text such as an email. According to a post on the Watson blog, the emotion tone now has five different emotional sub-tones: anger, fear, joy, sadness, and disgust, adopted from Plutchick's Wheel of Emotions. The categories have been expanded from the previous negative, cheerful, and anger because of feedback from users.

The second addition, Emotion Analysis, is part of Watson's AlchemyLanguage suite of APIs. Emotion Analysis takes a piece of text such as a web page, and returns confidence scores for anger, disgust, fear, joy, and sadness.The scores are calculated using a stacked generalization based ensemble framework based on initial results of several machine learning models. Each lower level model uses a combination of machine learning algorithms and language features such as words, phrases, punctuation, and overall sentiments. The idea is users will be able to use on texts such as customer reviews, surveys, and social media posts. According to IBM: "businesses can now identify if, for example, a change in a product feature prompted reactions of joy, anger or sadness among customers." The third API, Visual Recognition, takes an image, and returns scores for relevant classifiers representing things such as objects, events and settings. What sets Visual Recognition apart from other visual search engines is that while most tag images with a fixed set of classifiers or generic terms, Visual Recognition lets you train Watson around custom classifiers for images and build apps that visually identify specific concepts and ideas, so you can produce a search tailored to your client's needs. IBM suggests that a retailer might create a tag specific to a style of its pants in the new spring line so it can identify when an image appears in social media of someone wearing those pants. Obviously on a roll in the emotional stakes, IBM has also added emotional capabilities to its Text-to-speech engine, and is re-releasing it as Expressive TTS. The claim is that it will enable computers to go beyond understanding natural language, tone and context, letting them also respond with the appropriate inflection. The system has been researched and under development for twelve years, and IBM says that until now, automated systems relied on a pre-determined, rules-based corpus of words that used limited emotional cues based on raised or lowered tones. To improve on this, IBM worked out a more subtle and larger set of cues and added the ability to switch across expressive styles. This will give developers more flexibility in building cognitive systems that can demonstrate sensitivity in human interactions. The first three APIs are available in beta, while Expressive TTS is generally available. IBM is adding tooling capabilities and enhancing its SDKs (Node, Java, Python, iOS Swift and Unity) throughout Watson, and is also adding Application Starter Kits to make it easy and fast for developers to customize and build with Watson.

More InformationRelated ArticlesRobot TED Talk - The New Turing Test? Allen Institute Asks "Can You Make An AI Smarter Than An 8th Grader"

To be informed about new articles on I Programmer, sign up for our weekly newsletter,subscribe to the RSS feed and follow us on, Twitter, Facebook, Google+ or Linkedin.

Comments

or email your comment to: comments@i-programmer.info |

| Last Updated ( Thursday, 25 February 2016 ) |