| Kinect Yoga for the Blind |

| Written by Kay Ewbank | |||

| Monday, 28 October 2013 | |||

|

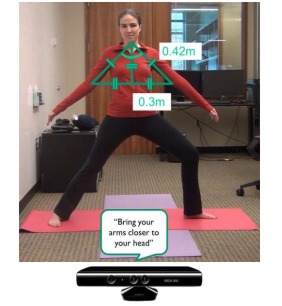

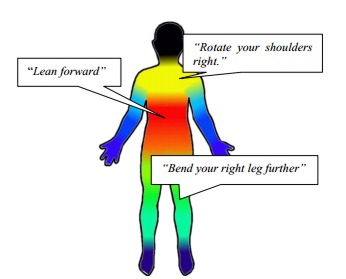

Researchers at the University of Washington have created an app that gives spoken feedback to users on how to accurately complete a yoga pose. The app is intended to solve the problem faced by blind or partially sighted people when attempting to do yoga. In an announcement about the program, the university pointed out: “in a typical yoga class, students watch an instructor to learn how to properly hold a position. But for people who are blind or can’t see well, it can be frustrating to participate in these types of exercises.” Kyle Rector, a UW doctoral student in computer science and engineering, and her collaborators designed a program, called Eyes-Free Yoga, that uses Microsoft Kinect software to track body movements and offer spoken feedback for six yoga poses, including Warrior I and II, Tree and Chair poses. The app uses the Kinect to read a user’s body angles, then tells the user how to adjust his or her arms, legs, neck or back to complete the pose they’re attempting. For example, the program might say: “Rotate your shoulders left,” or “Lean sideways toward your left.”

The report did not say whether you can grumble back at the instructor telling them to try bending their own leg behind their ear if they think it’s so simple. Each of the six poses in the app has about 30 different commands for improvement based on a set of twelve rules that form the basis of how the body should be placed for that yoga position. Rector worked with a number of yoga instructors to specify the criteria for reaching the correct alignment in each pose. The Kinect first checks the user’s body core and suggests alignment changes, then moves to the head and neck area, and finally the arms and legs. It also gives positive feedback when a person is holding a pose correctly.

The app (written in C#) uses simple geometry and cosines to calculate the angles created by the different body parts while working on the yoga position. For example, in some poses a bent leg must be at a 90-degree angle, while the arm spread must form a 160-degree angle. The Kinect reads the angle of the pose using cameras and skeletal-tracking technology, then tells the user how to move to reach the desired angle. This video shows it in action:

Rector and her collaborators published their methodology in the conference proceedings of the recent ACM’s SIGACCESS International Conference on Computers and Accessibility and it is outlined in a paper, Eyes-Free Yoga. They worked with 16 blind and low-vision people around Washington to test the program and get feedback. Several of the participants had never done yoga before, while others had tried it a few times or took yoga classes regularly. Thirteen of the 16 people said they would recommend the program and nearly everyone would use it again. The Kinect was chosen for the app because of its ready accessibility and the fact it’s open source, though Rector says it does have some limitations in the level of detail with which it tracks movement. The plan is to make the app available online so users could download the program, plug in their Kinect and start doing yoga. While the aim of the program is to make it easier for people who are blind or have low levels of sight to be able to enjoy yoga in a more interactive way, the technique provides an interesting model of giving feedback. More InformationYoga accessible for the blind with new Microsoft Kinect-based program Related ArticlesPractical Windows Kinect In C#

To be informed about new articles on I Programmer, install the I Programmer Toolbar, subscribe to the RSS feed, follow us on, Twitter, Facebook, Google+ or Linkedin, or sign up for our weekly newsletter.

Comments

or email your comment to: comments@i-programmer.info

|

|||

| Last Updated ( Monday, 28 October 2013 ) |