| Harlan Goes Public For GPU Coding |

| Written by Kay Ewbank | |||

| Wednesday, 03 July 2013 | |||

|

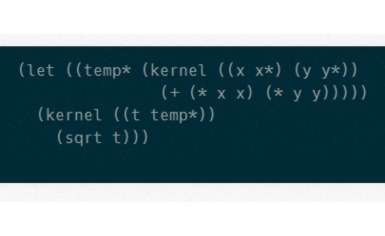

A new language for GPU computing has been made available to the public, although it is currently still ‘research quality’. As a Lisp-like language, Harlan achieves increases in computing performance by making use of the power of the Graphics Processing Unit (GPU). GPU computing offloads the elements of the application that require intensive computing power to the GPU, while the remainder of the code continues running on the CPU. Because the GPU consist of thousands of small efficient cores designed for parallel performance, it is ideally suited for logic that can be run in parallel. Serial portions of the code run on the CPU while parallel portions run on the GPU. This means the entire app runs faster. Harlan is a Lisp-like high level GPU programming language that is designed to ‘push the expressiveness of languages available for the GPU further than has been done before’. Erik Holk ,who has created the language as part of his PhD research explains that has been designed for data parallel computing, and has native support for data structures, including trees and ragged arrays. Support for higher order procedures is planned ‘very soon’. A sample Harlan program looks like this and if you have encountered Lisp before it will look very familiar - if you haven't then it will look like a lot of parentheses: Harlan, which is available on GitHub, is being put forward as an alternative to existing GPU languages such as CUDA, the programming model invented by NVIDIA. Holk says he believes the advantage of Harlan over CUDA is Harlan’s region system, which lets you work with more intricate pointer structures in the GPU. As an example, he says there is an interpreter for the Lambda Calculus as one of the test cases, which would be much harder to do in straight CUDA. Of course Lambda Calculus and Lisp aren't a million miles apart so you might expect Harlan to do better then CUDA. Another benefit of Harlan will be the support for higher order procedures; CUDA, being more like a parallel assembly language currently does not support these. More InformationHarlan Interpreter for Lambda Calculus Related ArticlesCustom Bitmap Effects - Getting started

To be informed about new articles on I Programmer, install the I Programmer Toolbar, subscribe to the RSS feed, follow us on, Twitter, Facebook, Google+ or Linkedin, or sign up for our weekly newsletter.

Comments

or email your comment to: comments@i-programmer.info

|

|||

| Last Updated ( Wednesday, 03 July 2013 ) |