| ImageNet Training Record - 24 Minutes |

| Written by Mike James |

| Thursday, 21 September 2017 |

|

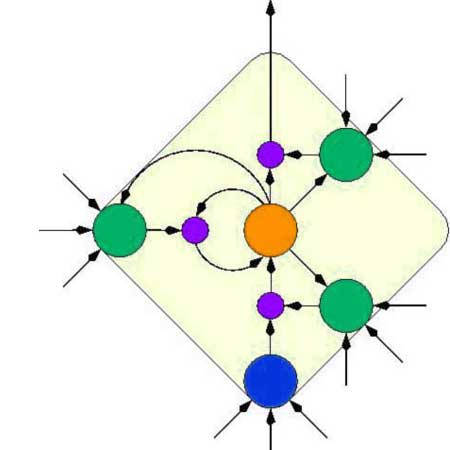

One of the problems with neural networks is how long it takes to train them. Researchers have just smashed the training barrier by reducing the time to train ResNet from 14 days to one hour and have claimed a world record of 24 minutes for AlexNet with a lower hardware budget than used for the task by Facebook. The time it takes to train a network is a fundamental barrier to progress. If it takes two weeks to train a network then you can't easily try out new ideas. If you want to tweak the settings then you have to wait two weeks to see if it works and you can't really reduce the learning time by tackling smaller problems because it's the bigger problems where things either work or don't work. We really do need a faster way to train networks. Currently the most common approach to making thing faster it to throw more and more GPUs at it and hope things speed up. The team of Yang You, Zhao Zhang, James Demmel, Kurt Keutzer, Cho-Jui Hsieh from UC Berkeley, Texas Advanced Computing Center (TACC) and UC Davis have been taking a different approach via the software and the way that it utilizes the parallel hardware: Finishing 90-epoch ImageNet-1k training with ResNet-50 on a NVIDIA M40 GPU takes 14 days. This training requires 1018 single precision operations in total. On the other hand, the world’s current fastest supercomputer can finish 2 × 1017 single precision operations per second. If we can make full use of the supercomputer for DNN training, we should be able to finish the 90-epoch ResNet-50 training in five seconds. However, the current bottleneck for fast DNN training is in the algorithm level. Specifically, the current batch size (e.g. 512) is too small to make efficient use of many processors For large-scale DNN training, we focus on using large-batch data-parallelism synchronous SGD without losing accuracy in the fixed epochs. The LARS algorithm enables us to scale the batch size to extremely large case (e.g. 32K). We finish the 100-epoch ImageNet training with AlexNet in 24 minutes, which is the world record. Same as Facebook’s result, we finish the 90-epoch ImageNet training with ResNet-50 in one hour. However, our hardware budget is only 1.2 million USD, which is 3.4 times lower than Facebook’s 4.1 million USD. TACC has a supercomputer with 4,200 Intel Knights Landing KNLs processors called Stampede 2 - it is Texas after all.

It is also possible to reduce the cost: Facebook (Goyal et al. 2017) finishes the 90-epoch ImageNet training with ResNet-50 in one hour on 32 CPUs and 256 NVIDIA P100 GPUs (32 DGX-1 stations). Consider the price of a single DGX-1 station is 129,000 USD2 , the cost of the whole system is about 32×129,000 = 4.1 million USD. After scaling the batch size to 32K, we are able to use cheaper computer chips. We use 512 KNL chips and the batch size per KNL is 64. We also finish the 90-epoch training in one hour. However, our system cost is much less. The version of our KNL chip is Intel Xeon Phi Processor 7250, which costs 2,436 USD3 . The cost of our system is only about 2, 436 × 512 = 1.2 million USD. This is the lowest budget for ImageNet training in one hour with ResNet-50. It is obvious that we need better ways to train neural networks as, even at $1.2 million, the cost is too high for most researchers. It is also clear that, for the moment, dedicated processors like Google's TPU aren't a match for the really big super computers that are available. The theoretical time of five seconds for what currently takes 20 minutes is tantalizing.

More InformationImageNet Training in 24 Minutes Related ArticlesTPU Is Google's Seven Year Lead In AI RankBrain - AI Comes To Google Search TensorFlow 0.8 Can Use Distributed Computing Google Tensor Flow Course Available Free How Fast Can You Number Crunch In Python Google's Cloud Vision AI - Now We Can All Play TensorFlow - Googles Open Source AI And Computation Engine To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info

|

| Last Updated ( Thursday, 21 September 2017 ) |