| The Triumph Of Deep Learning |

| Written by Mike James | |||

| Friday, 14 December 2012 | |||

Page 1 of 2 Deep Neural Networks are succeeding at AI tasks in a way that can only be described as spectacular. What is the secret behind their success? Artificial Intelligence (AI) is full of false dawns where the next great breakthrough is here and intelligent machines and more will soon be with us. In this case the dawning has been very slow and it's more like progress than a revolution. Deep learning seems to have brought neural networks to their own particular breakthrough moment. How will we look back on this era? To a time when the solution was first found and all we had to do was push on in mostly the same direction? First we need to take a look at the long road that leads to where we are. If you know this history feel free to skip forward.

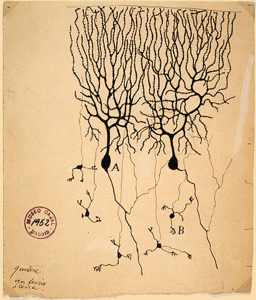

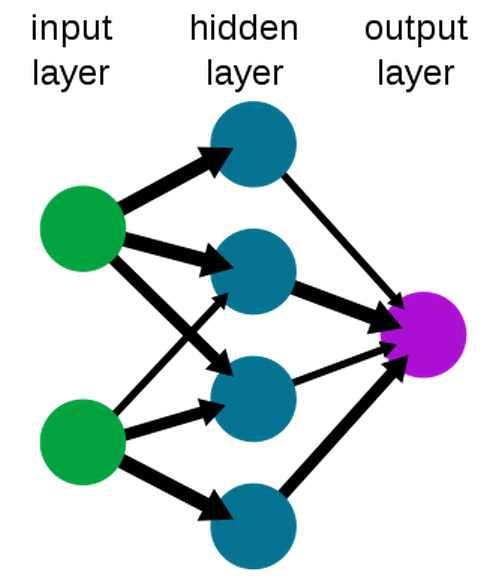

In the BeginningNeural networks represent the "obvious" way of creating artificial intelligence. Look at the brain. Look very hard and work out what its components are doing. Then build the same components, using different technologies and put the components together - you must end up with a brain. This is like not know how to build a computer but trying to do so by creating transistor like elements and putting them together in the same arrangement. This bottom up approach to AI has been going on since the early days of computing and it has been alternately hailed as a great invention or damed as the latest snake oil, perhaps well intentioned but not going anywhere. The problem is that it is fairly easy to create things that behave like neurons, the brains major component. What is not easy is working out what the whole thing does once you have assembled it. It is assumed that neurons get excited by other neurons and when they get excited enough they "fire" and send their excitement on to other connected neurons. This is very easy to model but how do you determine how the neurons should be connected and what should govern the strengths of connection? In other words how do you organize a neural network and how do you train it? One Layer Goes To ManyThis problem was solved for single layers of artificial neurons back in the 1950s and it was the first of the great breakthroughs. Then some one, Minsky and Papert to be precise, pointed out that single layers of neurons couldn't learn a lot of things that we really would like them to learn. The limitations of single layer neuronal nets was a real blow and for some time work on neural networks was regarded as a waste of time. Then in 1970s a learning rule for multi-layer networks - back propagation was invented - and it looked as if neural networks were back in favor. With back propagation neural networks could learn anything.

Multilayer networks could learn complicated things and they did - but very slowly. What emerged from this second neural network revolution was that we had a good theory but learning was slow and results while good but not amazing. It was difficult to believe that the typical neural network of the time could approach human intelligence. It seemed that even multilayer neural networks weren't particularly intelligent. As a result they fell out of favor once again and simpler but more effective techniques such as support vector machines came into fashion. AI went into applied engineering - it was a matter of what could be done with tweaked classical statistics and software. The real question, that received very little attention for such an important one, was - why don't multilayer networks learn? A Mediocre PerformanceThe answer was pieced together in the 1990s and it all had to do with the way the training errors were being passed back from the output layer to the deeper layers of artificial neurons. The so called "vanishing gradient" problem meant that as soon as a neural network got reasonably good at a task the lower layers didn't really get any information about how to change to help do the task better. The problem is that there are many many possible ways for the lower layers to be set up and most of them don't help the upper layers do better but they don't make things worse either. In fact the only time the lower layers helped a neural network much is when they were essential to doing the job at all. The problem is that there may be many configurations of the lower layers that make it much easier for the upper layers to do their job but back-propagation will take a very long time to find them. The problem is made worse by the fact that the optimum configuration of a layer may also depend on the configuration of the layers below. What this means is that as training proceeds a layer may be optimizing its performance based on the non-optimal configuration of lower layers. Put simply the lower layers in a deep network are very difficult to train unless they are absolutely essential for putting up even a reasonable performance. All of this and other considerations account for the reason why neural networks trained using back-propagation generally don't produce great results and when they do it is because they have been hand tuned and coaxed into producing a better performance. For this reason it became common practice to believe that there wasn't much point using lots of layers. Deep neural networks generally didn't perform any better than shallow networks. This was also assumed to be an indication that the whole idea was something of a failure. After all if neural networks are a good idea then the deeper the network surely the better? Deep Networks and StructureNow all of this has changed. Neural networks trained using back-propagation work better than any alternative methods. They are regularly winning competitions and generally showing how good they are. They are making possible speech recognition, translation and all the sorts of things that we thought they would be able to do when first invented. What exactly is the breakthrough? The answer is pre-training. The idea here is that the lower layers of the neural network need to absorb some of the structure of the input so that the later layers have an easier job. One way to think of this is to realize that the raw data has a lot of complexity and redundancy. If this can be reduces to a set of features that summarize it reasonably well then these features are easier to work with than the original data. It seems reasonable that the lower layers of a neural network should be about extracting features from the raw data and the subsequent layers are about extracting features of the features and so on. For example, if you are training a neural network to recognize a face then it would be an advantage if the lower layers recognized features that are the fundamental parts of a face - mouth, eyes, nose and so on. A the next level these features would be organized into higher level features - pairs of eyes with different spacings, mouth and nose and so on. A general neural network directly trained on the data will take a very long time to find an organization that delegates the low level features to the lower layers and so on. Its training doesn't carry with it any notion of a hierarchy and it is simply free to adopt any configuration that gets the job done. It would probably get to a hierarchical organization eventually but it would take far more time than we ever give it. The temptation is to hand create the low level features, and indeed if you do this then the network does learn to recognize a face much faster. Hand-crafted low-level features are the way we have made AI work in the past, but for true AI we need the features to be learned along with the rest of the task. How can we get a structure into the network so that lower levels automatically extract features that might be useful? This is what the pre-training idea is all about. |

|||

| Last Updated ( Tuesday, 11 September 2018 ) |