| Wolfram Image Identification Project - A Neural Network By Any Other Name |

| Written by Mike James | |||

| Thursday, 14 May 2015 | |||

|

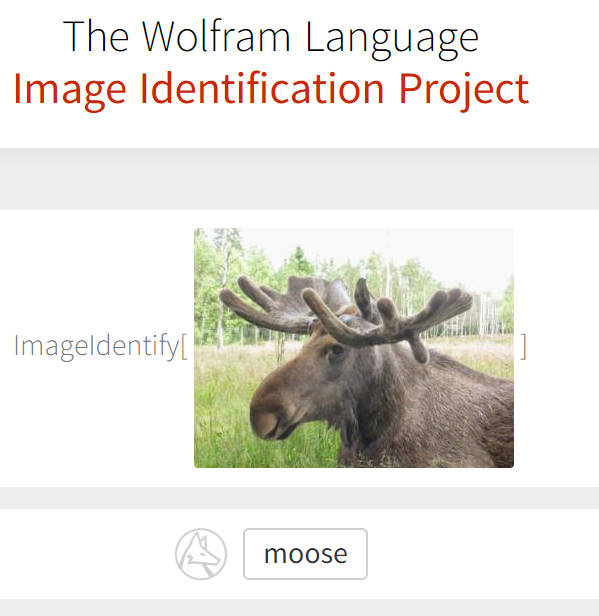

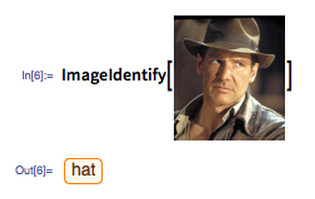

Wolfram's latest project is to bring image identification to the masses with a new ImageIdentify function. How does it work? Some sort of neural network is about as far as you can get. If you work in the AI area, and neural networks in particular, you might be a bit upset to discover that in the latest post to his blog, Steven Wolfram seems to suggest that he has just discovered convolutional neural networks. The post is very low on awareness of anything that anyone else has done recently and presents the image classifier as if it was all Wolfram's own work. A web site has been set up that uses the Wolfram languages ImageIdentify function to return tags for any image you care to upload. You also get the opportunity to supply the correct tag or select a more appropriate tag from the runners up. Notice that you cannot train the classifier on your own image set - it either recognises the image or it doesn't.

The ImageIdentify function is supposed to select the appropriate machine learning method for the image. However, the only method mentioned is a neural network. You pass the image to the function and it passes you back a symbolic entity that can be further processed. Having the recognition built into Wolfram language does mean that you can use it as a component in a more complete system - you could get definitions of objects detected or compile statistics. This is all very reasonable, fun, and perhaps even useful. What is odd it the way that it is represented in the Wolfram blog. After a brief introduction to the function, the post proceeds to speculate on the nature of neural networks and recounts a brief history. Next neural networks are described as being "attractors", which is certainly what a Hopfield network is, but the description seems less appropriate for a standard convolution neural network. However, it gives Wolfram the connection needed to talk about his pet subject, cellular automata. The post then continues to outline the development of the new function, but all without saying what the neural network architecture is; what the training set was; and how it was trained. As the function is just an implementation of a trained neural network, it might even be one of the many published trained networks - although this seems unlikely.

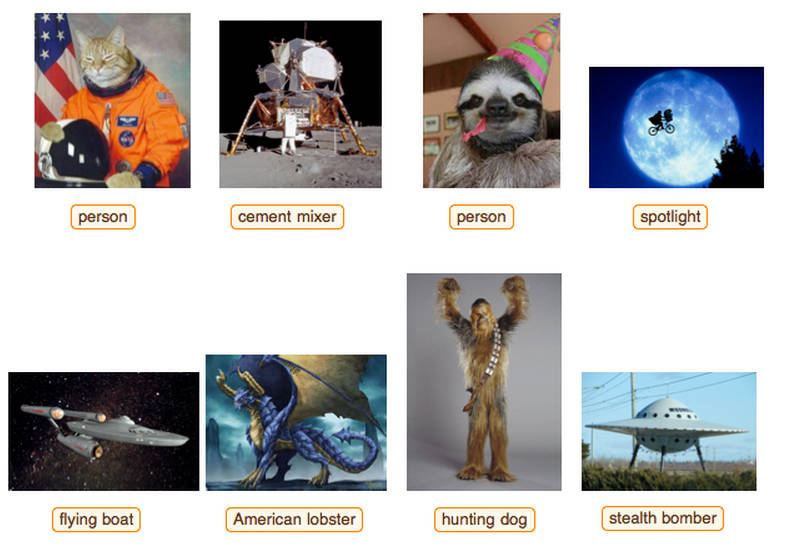

Even when a neural network gets it wrong you can see where it is coming from...

Wolfram doesn't actually claim that this is all newly invented, but if you didn't know otherwise this is the impression you get. The only reference to another type of neural network or worker in the field is to the McCulloch-Pitts Neuron which, while very interesting is hardly a modern neural network. There is no mention of Geoffrey Hinton or Yann LeCun and no mention of the Large Scale Visual Recognition Challenge or ImageNet. It is good to have a deep convolutional neural network in Mathematica, and it might allow non-experts and experts alike to build systems for which image recognition is just one of many steps. However, the presentation of the closed proprietary approach to neural networks without a mention of the prior state of the art leaves a nasty taste in the mouth.

More InformationWolfram Language Artificial Intelligence: The Image Identification Project The Wolfram Language Image Identification Project Related ArticlesHow Old - Fun, Wrong, Potentially Risky? MIcrosoft's Project Oxford AI APIs For The REST Of Us The Deep Flaw In All Neural Networks Neural Turing Machines Learn Their Algorithms Google's Neural Networks See Even Better Google's Deep Learning AI Knows Where You Live And Can Crack CAPTCHA Google Uses AI to Find Where You Live Google Explains How AI Photo Search Works

To be informed about new articles on I Programmer, install the I Programmer Toolbar, subscribe to the RSS feed, follow us on, Twitter, Facebook, Google+ or Linkedin, or sign up for our weekly newsletter.

Comments

or email your comment to: comments@i-programmer.info |

|||

| Last Updated ( Wednesday, 20 May 2015 ) |