| Reverse Polish Notation - RPN |

| Written by Harry Fairhead |

| Sunday, 22 June 2025 |

|

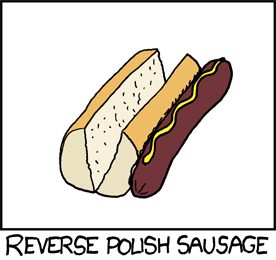

RPN or Reverse Polish Notation used to be a basic of the computer programmer's world, but today it is not as well known. Hence there may be some perfectly clued up programmers who are still left wondering what the sausage is doing outside of the bun. If you don't know what the sausage or the bun is all about you have missed, or forgotten, one of the most inspired of xkcd cartoons:

It isn't good to have to explain a joke, but in this case perhaps there is a good excuse. Let's explainAll programmers and most school children are familiar with the use of operators. For example, x+y is a use of the addition operator to add the value stored in x to the value stored in y. What is less well known is that this notation, called infix notation, which we have borrowed from mathematics is actually very difficult for machines to work with. An infix operator accepts as inputs the two values written to its left and right. You don't have to use operator notation at all in a programming language. For example you could write x+y as add(x,y) which is what a compiler will convert the infix expression to eventually. However we all know math too well to not use arithmetic expressions which form a sort of mini-language inside every, well nearly every, other programming language. Indeed the first really successful programming language - Fortran - was mostly a success because it converted arithmetic expressions i.e. Formulae into Code or For-Tran(slate). Before Fortran you had to write arithmetic some thing like add(a,mult(b,c)). In Cobol the problem of implementing automatic conversion of formulae was thought so difficult that programmers had to write things like Add A To B Multiply By C. So what is wrong with infix?The big problem is that infix operators have properties such as precedence and associativity. This makes working out what an infix expression actually means harder than it should be. For example, multiplication has a higher precedence or priority than addition and this means that: 2+3*4 isn't 2+3 all times 4 which it would be in a strict left to right reading. In fact the expression is 3*4 plus 2 and you can see that evaluating an infix expression often involves reordering the operators and their operands. Then there is the small matter of needing brackets to make some infix expressions clear. For example: (2+3)*(4+5) cannot be written without brackets because: 2+3*4+5 means 3*4 plus 2 plus 5. The order that you have to evaluate operators is something that takes a long time to learn. It is responsible for beginners are arithmetic getting the wrong answer even when they do the actual operations correctly and it gives rise to the need to remember mnemonics like BODMAS - Brackets Of Division, Multiplication, Addition, Subtraction. But there are other ways to write operator notation after all infix notation is just one possible "little language" that you can add to a bigger language. Prefix or Postfix NotationThe two best known alternatives are where you write the operator before or after its operands - known as prefix or postfix notation. Polish logician Jan Łukasiewicz, invented (prefix) Polish notation in the 1920s - hence it is only natural that postfix notation is generally referred to as Reverse Polish Notation or RPN. The only real difference between the two notations is the direction that you read them - left to right or right to left - so lets focus on RPN or postfix. Reverse Polish Notation is where the operator is written after its operands. For example, AB+ is reverse Polish for A+B. One immediate advantage of reverse Polish is that it does generalise to n-adic operators where infix notation is really stuck working with two operands - i.e. it is by its very nature only suitable for binary operations.. For example, ABC@ is a reverse Polish expression using the triadic operator that finds the maximum of A,B and C. In this case the operator acts on the three operands to its left and it translates to a function call something like @(A,B,C). If you try to write the @ operator as an infix operator e.g. A@B C or something similar you will see it just doesn't work. Reverse Polish has another advantage in that operator priorities can be represented by the order that they occur - you never need a bracket to represent an RPN expression although they can be incorporated as operators to make conversion between infix and RPN easier. For example, AB+C* is clearly (A+B)*C because the multiplication cannot be evaluated until the addition is evaluated to provide the second operand for the multiplication. That is if you evaluate A B + C * one operator at a time it goes:

or with numbers to make it clearer:

In RPN an operator looks just like a function which takes as arguments the two things written to its left. If you know about lambda expression this will seem very familiar. Stack MachinesRPN is also a natural notation to use in programming languages because its evaluation corresponds to stack based evaluation - you hardly need any syntax analysis to evaluate RPN. For example, in RPN the expression 2+3*4 would be written 2, 3, 4*,+ and it can be evaluated simply by scanning from left to right and pushing values on the stack. Each time you hit an operator you pop the top two items from the stack, apply the operator and push the result back on the stack. When you get to the end of the RP expression the result is on the top of the stack. For example:

Try the algorithm out and it and you will discover it works no matter how complicated the arithmetic expression is. RPN and stacks are closely related and as you have just seen you can use a stack to evaluate an RPN expression. What is less obvious is that you can use a stack to go the other way and convert standard an operator infix, expressions to RPN. That is you can feed an infix expression into a stack using some simple rules and then read out the equivalent RPN expression. HardwareBack in the days when hardware was more expensive it was thought to be a good idea to get people to work directly in RPN. You could and still can buy calculators that work in RPN notation. To add 2 and 3 you enter 2, then 3 and press the plus button. At first it seems messy and difficult to remember to enter the operands first and then the operator but after a while people become almost addicted to this way of thinking and can't understand why the rest of us insist of the silly infix notation that is so complex and so limited. Burroughs even built a mainframe computer that didn’t have any other type of working memory than a stack. Everything it did was effectively in RPN applied to the central stack. Every thing that the machine could do i.e. all of its op codes were regarded as RPN operators that operated on the top n items on the stack. For example, the Return operator took the return address of the top of the stack and so on. The stack machine architecture was simple but not fast enough to compete with more general architectures. Many however still regret the passing of this most simple and elegant approach to computing where every program was an RPN expression. For a while there were RPN calculators, and some people still prefer them, and there were stack-oriented RPN languages such as Forth - again a language little used today but one that still produces a reaction from its users past and even present.

So what about the RPN sausage?

Well if you regard the sausage as the operator then infix notation would put it between the two slices of bun as per a normal sausage sandwich. In RPN it would be placed on the right of the two slices of bun ready to operate on them by jumping between them when evaluated. Now we come to the difficult part - the mustard. The mustard is shown as applied to the sausage, i.e. it is already evaluated as a unary operator. There is an argument that says that the mustard should also be shown as unevaluated, and hence should be drawn to the right of the sausage... but perhaps this is taking the joke to the point that too large a stack is needed to evaluate it. Related ArticlesStack architecture demystified The LIFO Stack - A Gentle Guide

Comments

or email your comment to: comments@i-programmer.info To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Facebook or Linkedin.

|

| Last Updated ( Sunday, 22 June 2025 ) |