| Tesla Autopilot Easily Confused By Phantom Images |

| Written by Mike James | |||

| Sunday, 25 October 2020 | |||

|

Security researchers have demonstrated that Tesla's self-driving software can easily be fooled into thinking that brief projections of objects are real and so cause it to behave incorrectly. This is worrying, but how worrying? Self driving cars. Your response is either "where are they" or "keep them off my roads". If you know your AI you will almost certainly understand the size of the problem and understand why companies have been trying to perfect the technology for years. Google, in the form or Waymo, has been trying to perfect self-driving since 2009 and is only now allowing it out on to a fairly restricted and highly-mapped area. It is the only company to operate a public service without a safety backup driver - a taxi service in Chandler Arizona. At the same time Tesla is offering a beta of its Full Self Driving FSD software. However this is a strange meaning of "Full Self Driving" as Tesla warns that it needs human supervision and “it may do the wrong thing at the worse time.” What seems to be closer to the truth is that this is just an upgrade to the Tesla Autopilot not full self-driving. By calling it self-driving Tesla is encouraging its misuse and so gaining free beta testing of software in which bugs could kill. No doubt the "needs human supervision" will be the get-out clause when something bad happens. Now what about the attempts to make something bad happen. There is a long version of this story in which many different things were projected or flashed in front of a Tesla and a Mobileye 630 in an attempt to confuse the system. For example, a road sign was presented for a few seconds on a digital billboard and the Tesla's autopilot slammed on the breaks. If you look at the video demo you will likely be horrified that the system can be fooled so easily: If you read the full paper it has many other, slightly horrifying if you have any imagination, examples. The results, however, shouldn't come as a surprise. The reason is that both systems avoid using LIdar. In fact, Elon Musk says that anyone who uses Lidar is on to a loser. However, if you are using a standard 2D camera then unless you opt for bi-sensor parallax depth perception, you are almost certain to end up with a system that is fooled by 2D images. The paper suggests a way of training neural networks to detect real world, as opposed to projected, images - reflected light, context, surface and depth. A 3D-based sensor approach would be better, after all, you are trying not to collide with anything and being 100% sure how far everything is way from the car seems like a no-brainer.

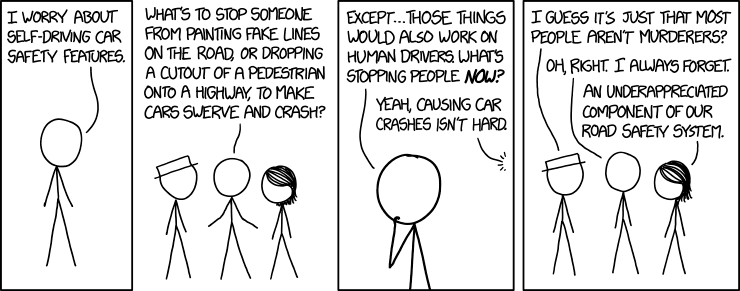

Of course xkcd got to the same conclusion centuries ago! Then we come to the issue of whether or not these results prove that a 2D-based self-driving system is inherently worse than a human. Most of the time driving is relatively easy - keep the car between the lines and try not to run into the back of the vehicle in front. Just occasionally something strange and out of the ordinary happens - an animal jumps out into the road, a parked car suddenly pulls out into the lane and so on. Humans deal with this sort of thing and self driving systems should find it easy. Then we have the really weird things that happen - a plastic bag that suddenly blows up and looks for a split second like a person, the sun shines in your eyes and your vision is impaired, an advertising hording suddenly lights up and you take your eyes off the road. I'm sure that in the split-second that some of these phantoms were presented, I'd brake hard in same situation - if only out of caution.

And yes that is Elon. Self-driving systems are going to kill people and there will be a backlash. What matters is how many people and what the failures are. I don't think that projecting 2D images on the road is something that needs to detract from the success, or add to the failure. What we need are laws that make doing something like this illegal. Attacking life-critical AI should be the same as attacking the life in question. More InformationRelated ArticlesSelf Driving Cars Can Be Easily Made To Not See Pedestrians Udacity's Self-Driving Car Engineer Nanodegree Udacity Launches Flying Car Nanodegree Program More Machine Learning From Udacity Coursera's Machine Learning Specialization

To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info

|

|||

| Last Updated ( Monday, 26 October 2020 ) |