| Kinect's AI breakthrough explained |

| Written by Harry Fairhead |

| Saturday, 26 March 2011 |

Update 30 March 2011Notice that the algorithm described is the one used by current Kinect devices to perform body tracking. The work was done before the Kinect was launched and it accounts for its excellent performance at body tracking - that is it doesn't represent a future update to the Kinect. The NITE software supplied by PrimeSense SDK doesn't use the same approach as the Microsoft body tracking software and because of this it doesn't have the same desirable characteristics.

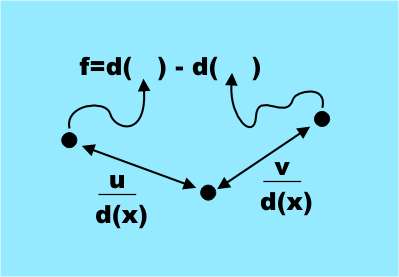

Microsoft Research has recently published a scientific paper and a video showing how the Kinect body tracking algorithm works - it's almost as amazing as some of the uses the Kinect has been put to! There are a number of different components that make Kinect a breakthrough. Its hardware is well designed and does the job at an affordable price. However once you have stopped being amazed by the fast depth measuring hardware your attention then has to fall on the way that it does body tracking. In this case the hero is a fairly classical pattern recognition technique but implemented with style. There have been body tracking devices before but the big problem that they suffer from is that they need the user to stand in a calibration pose so that they can be located by the algorithm using simple matching. From this point on the algorithm uses a tracking algorithm to follow the movement of the body. The basic idea is that if you have an area identified as an arm in the first frame then in the next frame the arm can't have moved very far and so you simply attempt to match areas close to the first position. Tracking algorithms are good in theory but in practice they fail if the body location is lost for any reason and they are particularly bad at dealing with other objects that obscure the person being tracked even for a brief moment. In addition tracking multiple people is difficult and once a track of a body is lost it can take a long time to reacquire if it is possible at all. So what did Microsoft Research do about this problem to make the Kinect work so much better? They went back to first principles and decided to build a body recognition system that didn't depend on tracking but located body parts based on a local analysis of each pixel. Traditional pattern recognition works by training a decision making structure from lots of examples of the target. In order for this to work you generally present the classifier with lots of measurements of "features" which you hope contain the information needed to recognise the object. In many cases it is the task of designing the features to be measured that is the difficult task. The features that were used might surprise you in that they are simple and it is far from obvious that they do contain the information necessary to identify body parts. The features are all based on a simple formula: f=d(x+u/d(x))-d(x+v/d(x)) where (u,v) are a pair of displacement vectors and d(c) is the depth i.e. distance from the Kinect of the pixel at x. This is a very simple feature it is simply the difference in depth to two pixels offset from the target pixel by u and v.

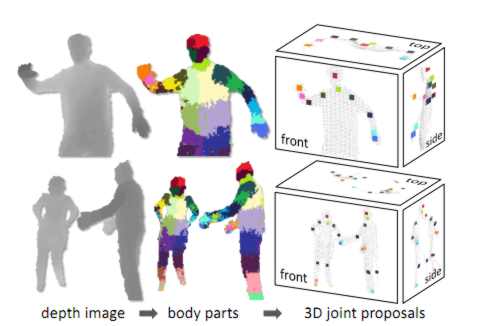

The only complication is that the offset is scaled by the distance of the target pixel i.e. divided by d(x). This makes the offset depth independent and scales them with the apparent size of the body. It is clear that these features measure something to do with the 3D shape of the area around the pixel - that they are sufficient to tell the difference between say an arm or a leg is another matter. What the team did next was to train a type of classifier called a decision forest, i.e. a collection of decision trees. Each tree was trained on a set of features on depth images that were pre-labeled with the target body parts. That is the decision trees were modified until they gave the correct classification for a particular body part across the test set of images. Training just three trees using 1 million test images took about a day using a 1000 core cluster. The trained classifiers assign a probably of a pixel being in each body part and the next stage of the algorithm simply picks out areas of maximum probability for each body part type. So an area will be assigned to the category "leg" if the leg classifier has a probability maximum in the area The final stage is to compute suggested joint positions relative to the areas identified as particular body parts. In the diagram below the different body part probability maxima are indicated as colored areas:

Notice that all of this is easy to calculate as it involves the depth values at three pixels and can be handled by the GPU. For this reason the system can run at 200 frames per second and it doesn't need an initial calibration pose. Because each frame is analysed independently and there is no tracking there is no problem with loss of the body image and it can handle multiple body images at the same time. Now that you know some of the detail of how it all works the following Microsoft Research video should make good sense: (You can also watch the video at: Microsoft Research) The Kinect is a remarkable achievement and it is all based on fairly standard classical pattern recognition but well applied. You also have to take into account the way that the availability of large multicore computational power allows the training set to be very large. One of the properties of pattern recognition techniques is that they might take ages to train but once trained the actually classification can be performed very quickly. Perhaps we are entering a new golden age when at last the computer power needed to make pattern recognition and machine learning work well enough to be practical. More information:All about Kinect - an in depth exploration of its technology and a showcase of its uses Getting Started with PC Kinect Using the Kinect gets much easier If you would like to be informed about new articles on I Programmer you can either follow us on Twitter, on Facebook, Digg or you can subscribe to our weekly newsletter.

|

| Last Updated ( Wednesday, 30 March 2011 ) |