| KinectFusion - instant 3D models |

| Written by Harry Fairhead | |||

| Wednesday, 28 September 2011 | |||

|

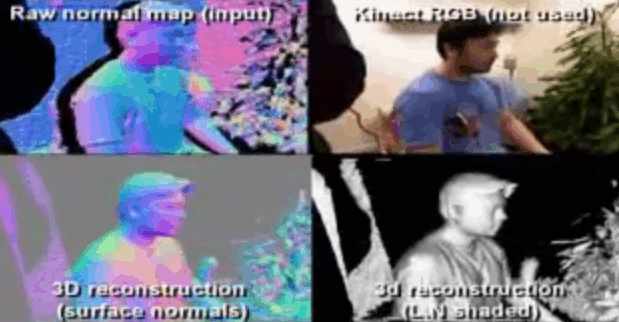

KinectFusion is another amazing use of everyone's favourite input device but this one takes it a step beyond reality. It can map out the local space to create a 3D model that can be used within virtual environments. It's a way of mixing the real and the virtual. We reported on KinectFusion over a month ago (and this news item is an update on the original) and since then the team has been hard at work explaining its ideas. Now we have a new video that shows how it all works more clearly. The polished video is probably something to do with the fact that Microsoft Research is celebrating its 20 years of creating things to amaze and amuse. The new video is worth watching because it shows in detail how the 3D model is built up but the original, lower quality, video is still worth looking at because it shows how Kinect Fusion can be used creatively - if you can call throwing virtual goo over someone as creative! As well as the Kinect's abilities this it is also the result of a convergence of GPU computing power and some clever algorithms implemented by Microsoft Research and presented at this year's SIGGRAPH. The paper still has to appear anywhere and so the tiny details of how it all works are still unclear. The team have promised more detail in papers presented next month at the UIST Symposium in Santa Barbara, California, and ISMAR in Basel, Switzerland. What is going on it in the video is that a 3D model is being built in real-time from the Kinect depth stream. A whole room can be scanned in a few seconds and new sections added to the model as the Kinect is pointed to new areas of the room.

Slowly but surely as the Kinect views the room a complete 3D model is build up. The movement of the Kinect is also important because as it moves it "sees" different areas of the room and fills in the depth details that were hidden from its previous point of view. Notice that this isn't trivial because to unify the scans taken from different points of view - you have to know where the camera is pointing. The method used is called iterative closest point (ICP) which merges the data from multiple scans and works out the orientation of the camera by comparing time adjacent frames. As you watch later on notice how objects can be moved, added or removed and the model is adjusted to keep the representation accurate.

The model that is built up is described as volumetric and not a wireframe model. This is also said to have advantages because it contains predictions of the geometry. Exactly what this means is difficult to determine without the paper describing the method in detail. However it seems likely that the depth measurements are being used to create a point cloud that provides the local geometry by way of smoothing of some kind. From the second video you can see that the algorithm estimates the surface normals and these are used as part of the familiar rendering algorithms.

What is clear from the video is that the model seems to work well enough to allow interaction between it and particle system and textured rendering. The key to the speed of the process is to use the computational capabilities of the GPU. Without the video you might think that a real-time model was a solution in search of a problem, but as you can see it already has lots of exciting applications. The demonstration of drawing on any surface wouldn't be possible without a model of where those surfaces are, and the same is true of the multi-touch interface, the augmented reality and the real-time physics in the particle systems. Which one of these applications you find most impressive depends on what your interests are but I was particularly intrigued with the ability to throw virtual goo over someone - why I'm not sure... Watch the original video and dream of what you could do with a real-time 3D model...

Further reading:Getting started with Microsoft Kinect SDK Kinect goes self aware - a warning we think it's funny and something every Kinect programmer should view before proceeding to create something they regret! Avatar Kinect - a holodeck replacement Kinect augmented reality x-ray (video) <ASIN:B003O6EE4U@COM> <ASIN:B003O6JLZ2@COM> <ASIN:B002BSA298@COM> <ASIN:B0042D7XMO@UK> <ASIN:B003UTUBRA@UK> <ASIN:B0036DDW2G@UK>

|

|||

| Last Updated ( Friday, 30 September 2011 ) |