| Take Stanford's Natural Language Understanding For Free |

| Written by Nikos Vaggalis | |||

| Friday, 04 March 2022 | |||

|

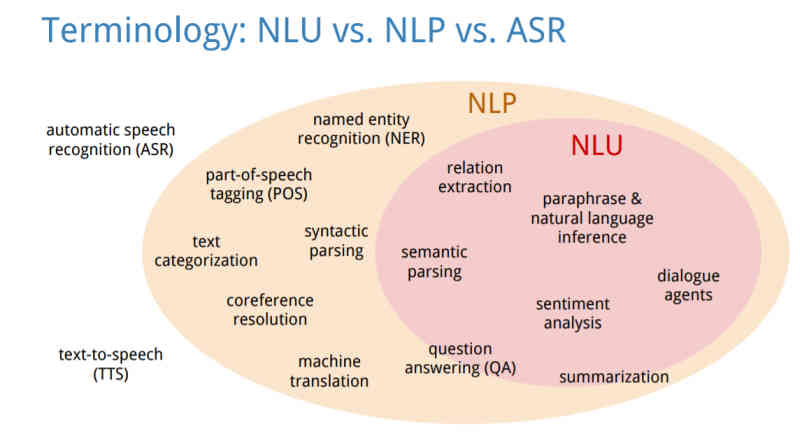

The content of CS224u Natural Language Understanding by Stanford University has been made available in a self-paced version and to anyone for free. A few months ago I covered its sister course CS224n Natural Language Processing with Deep Learning. What is the difference you say between CS224n and CS224u? The answer lies in the following diagram:

As you can see NLU is a subset of NLP which might be considered a prerequisite before tackling NLP. As per Wikipedia's definition: Natural-language understanding (NLU) or natural-language interpretation is a subtopic of natural-language processing in artificial intelligence that deals with machine reading comprehension. There is considerable commercial interest in the field because of its application to automated reasoning, machine translation, question answering, news-gathering, text categorization, voice-activation, archiving, and large-scale content analysis. Specifically NLU is used today in products like

This includes Amazon Alexa, Google Kome Assistant, Cortana and Siri, to name just a few implementations. In this course students will learn how to develop systems and algorithms for the robust machine understanding of human language, and specifically:

The online course's version is an adapted version of the Spring 2021 course which was taught on campus by an all star teaching team spearheaded by Professor Christopher Potts. Beside the lectures, it is heavy on projects and homework. The course begins by covering a wide range of models for distributed word representation and then moves onto topics like relation extraction, natural language inference, and grounded language understanding;important topics which highlight many of the central concepts in NLU. NLU had its roots in the 1960s primarily utilized by the ELIZA algorithm. If you don't know about ELIZA see this account of "her" develpment and conversational output. Subseqent decades have witnessed ups and downs in the field but climaxing in 2011 with IBM Watson winning Jeopardy; a state of the art NLU question-answering system which was drawing on vast amounts of data and doing all sorts of clever things like parsing and distributional analysis of data to understand the question in a human way. The computing power necessary to pull something like that off decreased with time, ending up from needing a supercomputer to that of a mediocre laptop, something that opened the way to the huge advancements that are happening right now;see GPT-3. Dr. Potts explains that when you do a search into Google, various parts come into play When you do a search into Google, you're not just finding the most relevant documents, but rather the most relevant documents as interpreted with your query in the context of things you search for where they take your query and do their best to understand what the intent behind the query is The magic words here are context and intention. The computer should understand both of them in order to return an acceptable result. And this is what you will learn in taking CS224u. As such, the complete syllabus:

Overall, it's a very intriguing course that begins with the history of NLU, showcases the crossover with NLP, teaches its practical applications in like business intelligence, speech recognition or text summarization from which both business and consumers benefit, as well as a looks at the advancements that the future will hold. The full course material including notes can be found on the official course's website, while the recorded lectures can also be found as a complete youtube playlist. More InformationCS224U: Natural Language Understanding Related ArticlesTake Stanford's Natural Language Processing with Deep Learning For Free

To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info |