| Taking The Shake Out Of GoPro |

| Written by Mike James |

| Tuesday, 12 August 2014 |

|

Microsoft Research has outlined a method for effectively taking the shake out of first person videos and condensing them down so that they are actually interesting. SIGGRAPH is currently taking place and lots of amazing graphics and imaging techniques are being put on display. Microsoft has one that takes the shake out of the sort of video captured by point of view cameras such as the GoPro or even Google Glass - after it has been taken. You don't need a steadycam or any sort of stabilization in the camera - the whole process happens as a post-processing stage. The problem is that first person videos tend to be long and nothing much happens for most of the time. You could get a summary of the action by speeding up the frame rate but this amplifies the effect of the camera shake and makes the whole thing unwatchable for a different reason. What the Microsoft team has done is to find a way to speed up the video and remove the camera shake in such a way as to produce a smooth watchable video - called hyperlapse. Take a look at the result:

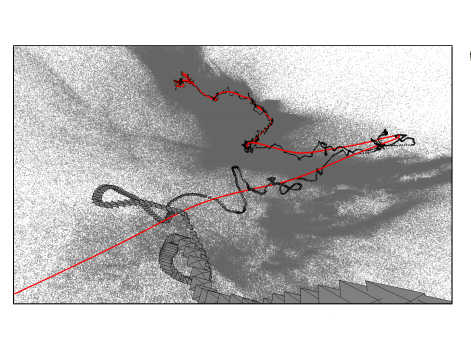

So how is it done? The answer sounds like overkill. First the algorithm constructs the 3D camera path. Because of the typically erratic movement of the first person camera view this is usually very irregular. The next step is to find a smooth path that is as close as possible to the real path. The virtual camera not only has to move along a path that is close to the real path, it also has to point in a direction that is close to the real camera. The camera algorithm uses structure from motion to create a sparse 3D point cloud of objects in each frame. This allows the camera position to be worked out so that objects in different frames can be identified.

Each frame then has a proxy geometry computed and this is used to render the frames from the new view points on the optimized path. The final video is created by using multiple frames and putting them together by stitching, blending and rendering to the new view point. The following video gives a good overview of the method and how it all fits together:

You can read the details in the full paper and I admit that I have left out a lot of the subtle steps that result in the final hyperlapse videos that work so well. What is truly amazing is the amount of computation that goes into this process. It takes an hour of computation to perform the motion to structure for a batch of 1400 video frames. Even so, the team hope to optimize the computation and have plans for a Windows app. So if you have a GoPro, or similar first person view point camera, and want people to watch your otherwise boring videos then you need to wait for this app. My guess is that some of them will still turn out to be boring after they have been hyperlapsed! More InformationFirst-person Hyper-lapse Videos (pdf)

Related ArticlesGoogle - We Have Ways Of Making You Smile Blink If You Don't Want To Miss it Almost Still Photos - Free Cliplet App From MS Research New Algorithm Takes Spoilers Out of Pics

To be informed about new articles on I Programmer, install the I Programmer Toolbar, subscribe to the RSS feed, follow us on, Twitter, Facebook, Google+ or Linkedin, or sign up for our weekly newsletter.

Comments

or email your comment to: comments@i-programmer.info

|

| Last Updated ( Tuesday, 12 August 2014 ) |