| Better Than Floating - New Number Format Avoids Imprecision |

| Written by Mike James | |||

| Friday, 06 May 2016 | |||

|

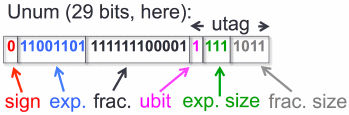

It is well known that the way computers do arithmetic isn't the same way we do arithmetic, but if you thought that IEEE 754 floating point was the last word then you need to rethink. A new format called unum saves bits in all senses of the word and promises better precision. The was a time when it was a real problem. Knowing how to do arithmetic on a computer was a tough call - integer, fixed point, bcd or floating point. Slowly but surely floating point proved the best option for general and scientific number crunching. What is more, the official IEEE 754 standard not only made floating point the format we all use, but it made a specific set of choices of format the one we use. Its standardisation also resulted in floating point hardware becoming the norm rather than an optional extra. Only small IoT style processors lack a floating point processor these days. However, floating point has some problems. We all know that if you take the difference between two numbers that are numerically close the result can be as good as noise. For example 1.000 007 - 1.000 006 we get 1.000 000×10-6 this looks fine but notice that we now have a number that seems to be claiming accuracy down to 10-12, but in fact all of the zeros are generated by digits not in the original pair of numbers. The zeros are really "don't know" values but they are presented as full precision values. The unum format, explained by John Gustafson in a recent interview in ACM Ubiquity magazine, aims to solve this sort of problem by keeping track of and varying the number of bits of precision used. The name stands for universal number. It is still a floating point number, but it has some additional fields:

The exp.size and fac.size give the number of bits dedicated to representing the number. The really important bit however is ubit. This is a 1 if the number is exact and a 0 otherwise. Consider the subtraction example given earlier. If both numbers are marked as exact then the zeros that are generated are real and deserve to be used in further calculations. If the numbers are inexact then the extra precision isn't warranted and the number should show 1 significant digit and the fraction bits can be reduced. Similarly the problem of over or underflow is solved. Numbers are represented with enough precision and enough dynamic range. The number of exponent bits is adjusted to keep the result of arithmetic representable. Of course, it is still possible that the system can run out of space to store the number, but in this case the final result is the largest number representable and not a special case marker like infinity. The use of variable exp and frac sizes isn't as revolutionary as the idea of marking values as exact or inexact. This single feature means that a single unum can cover a range of values by stating that it is inexact. For example, the exact unum 0 is just the point 0 on the number line but the inexact 0 represents all values between 0 and 1. In other words, unums include interval arithmetic. There is a lot more detail in the interview, but it is claimed the unums get right all of the difficult problems that floats get wrong. Gustafson also claims that so far he has been unable to find a problem that breaks unum math. This is a strong claim - can you find one? When asked when unums would find their way into computer languages the reply was: "The Python port is already done, and several groups are working on a C port. Because the Julia language is particularly good at adding new data types without sacrificing speed, several people jumped on making a port to Julia, including the MIT founders of that language. I learned of an effort to put unum math into Java and into D, the proposed successor to C++. Depending on the language, unums can be a new data type with overloaded operators, or handled with library calls. And of course, Mathematica is a programming language and the prototype environment in Mathematica is free for the downloading from the CRC Press web site. I'd really like to see a version for Matlab." Gustafson's opinion of the approach is glowing: "I really think that once people try this approach out, they will not want to go back to using just floats. Unums are to floats what floats are to integers. They are the next step in the evolution of computer arithmetic."

More InformationThe end of (numeric) error: an interview with John L. Gustafson Unum Computing: An Energy Efficient and Massively Parallel Approach to Valid Numerics Related ArticlesLet HERBIE Make Your Floating Point Better CheckCell Detects Bugs In Spreadsheets MathJS A Math Library For JavaScript Free Sage Math Cloud - Python And Symbolic Math

To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on, Twitter, Facebook, Google+ or Linkedin.

Comments

or email your comment to: comments@i-programmer.info

|

|||

| Last Updated ( Friday, 06 May 2016 ) |