| Page Size Matters |

| Written by Janet Swift | |||

| Sunday, 08 January 2012 | |||

|

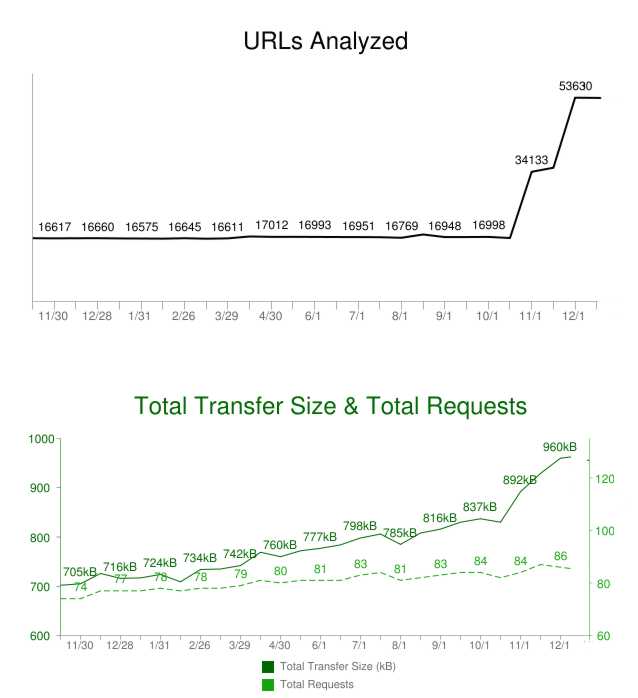

Recent research results have been interpreted as web page size suddenly ramping up, which is a cause for concern. Is this the real deal? The data that led to the headline "Web pages show end of year bulge" on BBC News, a site not known for sensationalism comes from HTTP Archive a site that monitors web content and performance. The BBC reported: average webpage sizes were trending steadily upward throughout 2011 and jumped sharply in October Another report of the trends reported by HTPP Archive concluded: the average size of a single web page is now 965 kilobytes up 30% from last year's average of 720 KB pointing out that this presents a problems for mobile surfers who are subject to a data cap. However, while there is some truth in both these reports they miss the fact that in November 2011 HTTP Archive changed the basis on which it collects its data. Prior to this date it monitored 18,026 URLs from a variety of sources including Alexa but then switched to data from Alexa's top 1,000,000 sites.

(Click in chart to enlarge)

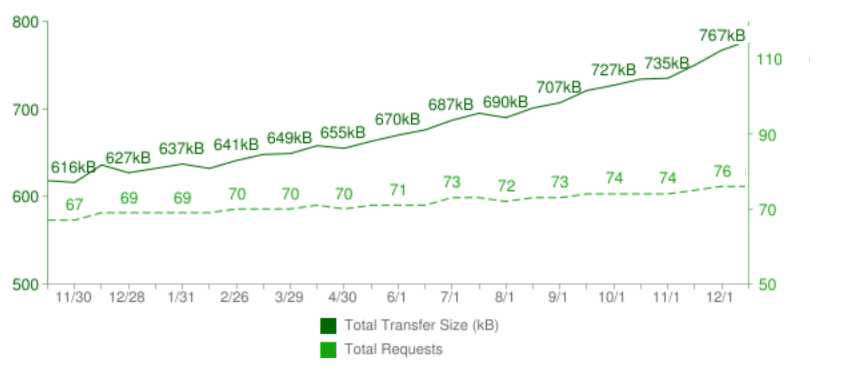

From this pair of charts, the jump in the total transfer size at the end of 2011 appears to be an artifact of the fact that a much larger number of sites were included in the analysis. To investigate this look at the trend when we select only the smaller subset of 12706 sites for which data was collected in every time period - while it shows a steady increase there is no sharp jump.

(Click in chart to enlarge)

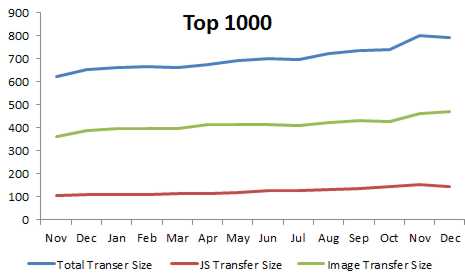

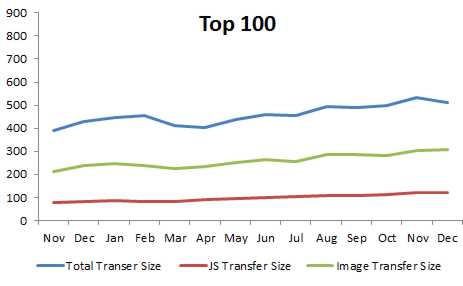

So what accounts for the increase, which works out at around 20% over the year? Looking at the data for JavaScript Transfer Size and Image Transfer Size for the most popular web sites they both increase and at the same rate as Total Transfer Size and the increase in transfer size is primarily to larger graphics files.

What is also very noticeable by comparing these two charts is that the Top 100 sites manage to keep total transfer size down well below the average page size. So why is there a jump when more websites are included. Well partly its chicken and egg. Faster websites are more popular so when you relax the restriction and widen the net, slower websites are included. Top websites can afford to optimize their performance (or perhaps it is that they can't afford to lose popularity by becoming slower) and so employ teams of web developers to maintain speed and efficiency. Put simply if you increase the range of your survey to include the less special websites you have to expect that the technical quality will fall. More information:

Comments

or email your comment to: comments@i-programmer.info

To be informed about new articles on I Programmer, subscribe to the RSS feed, follow us on Google+, Twitter, Linkedin or Facebook or sign up for our weekly newsletter.

|

|||

| Last Updated ( Friday, 03 May 2013 ) |