| Artificial Intelligence - Strong and Weak |

| Written by Alex Armstrong | ||||

| Monday, 04 May 2015 | ||||

Page 1 of 3 The search for intelligent machines started long before the computer was invented and AI has many different strands. So many that it can be difficult to see what it is trying to do or what it is for. We already have an easy way to create intelligent beings from scratch why do we need another one? Perhaps the simplest answer is that we don't understand how the biology actually does it - and we do so like to understand. AI is Artificial Intelligence but most people have difficulty with the idea of “Intelligence” let alone the idea of trying to create it artificially. There is also the philosophical question of whether or not it is a good idea to even try and also the economic question of why bother? Weak v Strong AIThe search for intelligent machines started long before the computer was invented and it has many different strands that can make it difficult to see how it all fits together.

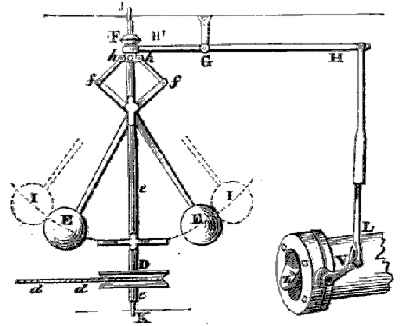

The Watt governor – intelligent control?

Originally machines were devices that simply did work. But even in the days of the steam engine some devices did more than simple work. For example, consider a speed regulator, the Watt governor. This consists of two heavy balls on the end of spinning arms. They rise up as the speed increases and via a system of links they turn down the steam supply so maintaining a constant speed. Clearly this isn’t an intelligent device - it doesn’t think - but without it you need to employ a human to watch the speed and control the steam. So without it a human has a job that needs some intelligence. With it the solution is just a mechanism. This replacement of intelligence by something that doesn't seem to be quite intelligent is a recurring theme in the history of AI. So in a sense the Watt governor does do a job that otherwise needs intelligence – it is an example of an early “servo” mechanism and the simplest example of what you might call “weak AI”. You will discover that there are two very general reasons why people get involved in AI. Some take the engineering approach and simply want to create mechanisms that do what humans do – play chess, read addresses, drive cars, recognise speech and so on. This is “weak” AI and contrasts to the intent of the “strong” AI enthusiasts who aim to do nothing less than create a thinking, conscious machine that is the equal or better than a human. At its most extreme strong AI aims to create an artificial consciousness. Something that can say, and really mean in the way that humans do, that it knows it exists and wants to continue to exist. This is, of course the premise of many a good Sci Fi move. As you can see, moving from simple mechanisms like the Watt governor to a thinking conscious machine gives AI a huge range and this is partly the reason for the difficulty in getting to grips with it. The Curse Of Strong AIOne of the biggest problems with AI is that it tends to invent itself out of existence. For example, if you were back in the days of the early industrial revolution a steam engine would have seemed amazing and one that regulated its own speed would have been close to miraculous. Of course once, you know how it works the magic simply evaporates. Innocent users did regard steam engines as mechanical beasts only to be disappointed to discover that all they were was a lifeless mechanism that just did what it was designed to do and no more. So it is with most areas of AI. When you first hear about some program or machine which does something only humans can do it seems impressive - intelligent even. For example, when computers play chess they sometimes give their human opponents the feeling that they are thinking about the game. They begin to see patterns of play that reveals an approach to the game that suggests that there is "someone" rather than something making the moves. From a position of ignorance, computer chess players might be taken as evidence that we have succeeded in making an intelligent thinking machine but… When you know how chess playing programs actually work you KNOW for a fact, a certainty, that they don’t think. You also know that they are about as clever as the Watt governor! This is the curse of strong AI. Whenever you make something work, you know how it works and it no longer seems intelligent. Of course if your aim in life is weak AI this is no problem because a chess program still beats the grand master even if you know it isn’t doing anything intelligent in any general sense. It is as if pinning down the butterfly destroys it at once. Intelligence, by its very nature is something that cannot be understood but not because understanding it is impossible but because understanding it destroys our perception of it as intelligence. Logic, Symbols And SearchingThere are really two general approaches to the quest for AI. The first is what you might call the “engineering” approach. This is a state of mind that says that humans do intelligent things by essentially simple methods that can be translated into programs. For example, what happens when you play a simple game such as noughts and crosses? In many cases the most difficult part of playing a game is learning the rules and recognising the pieces! Once you have this mastered then most game play involves evaluating each possible next move. You can make a computer do this very same task simply by writing a program that represents the game positions as symbols and has the rules built in. Then you need a scoring system that tells the machine how good a position is. You might give 5 points for occupying the centre square, 10 points for two symbols in a row and so on. The scoring system is usually called an “evaluation function” but, no matter what you call it, all it does is give you a number that is related to the quality of the game play position. To pick a good move all the program does is to try each possible move and rate it using the evaluation function – and naturally it picks the best move. It may look impressive, it may even be able to beat you, but is it intelligent?

Of course good game players not only consider their next move but their opponent’s best response and so on. The same trick can be built into a game-playing program. The same evaluation function can be used to rate the opponent’s responses. Only in this case a high score for the opponent is bad news for the player! The opponent is clearly going to pick the response with the largest score and what you want to do is to pick a move that gives him the smallest largest score possible. This may sound complicated but if you think about it all you are doing it limiting the worst damage the opponent can do. This is called the MiniMax principle and it is widely applied in all AI situations where there is conflict between players, or “agents”. You can extend the look-ahead in such games as many moves as you like and the surprising news is that as you look further and further ahead your performance gets better, no matter how poor your evaluation function is. In other words, you don’t even have to know very much about the game to play well by looking ahead. A poor evaluation function coupled with a long look ahead nearly always works well. This is all game playing programs do and given sufficient computer power they tend to win. Of course, in practice game playing programs use additional techniques such as a list of opening “book” moves but look ahead MiniMax search is the main reason they win! So is a game-playing program an example of AI? Well it has to be an example of “weak” AI but there is no way you can imagine that it thinks, or even plays in the same way as a human. The results may be the same but the methods are certainly very different. Human players even claim that they can tell the difference between a real human player and a machine even if this is sometime phrased as being down to a strange personality. This leads us on to the idea of the Turing Test which has played such a prominent part in AI. <ASIN:185168607X> <ASIN:B00003CXXP> |

||||

| Last Updated ( Tuesday, 05 May 2015 ) |