| Artificial Intelligence - Strong and Weak |

| Written by Alex Armstrong | ||||

| Monday, 04 May 2015 | ||||

Page 2 of 3

Turing And His TestAlan Turing was a pioneer of AI and invented the test of the same name!

Alan Turing was an early pioneer of many aspects of computing and was one of the earliest proponents of AI. He argued that the only real test of strong AI was to see if anyone could tell the difference. This was a "black box" approach to the problem which basically comes down to "it doesn't matter how it works - if it behaves like a human intelligence then it is a human intelligence". You can see how this test avoids the problem of understanding killing off the very appearance of intelligence. In this case we don't ask to understand how the machine or the human works we simply ask if they are the same. He suggested that a human was hidden behind a screen and communicated with a judge using whatever methods the AI program used. If the judge could tell that there was a human or a program behind the screen then the AI program was not a thinking conscious entity equivalent to a human. On the other hand if the program could fool the judge into believing that there really was a human behind the screen then by all practical definitions of human intelligence that is what the program possesses. This is the Turing Test for strong AI and it has caused a great deal of controversy. Philosophers have argued for years about whether or not the Turing test is valid. Essentially one group of philosophers argue that it takes more than just equivalence of performance to make something intelligent and conscious. Most of the arguments come down to the supposed mind/brain split. In this model the brain isn’t just the machinery that creates human intelligence, it is also the residence of the mind, which is some how different and separate. If you believe in this worldview then strong AI is doomed to failure. This is a big argument that is more about religion and belief than hard facts and it is best avoided! Notice however that the Turing test is a weak AI approach to the problem of proving strong AI. Turing proposed an operational definition of what intelligence is that is every bit as mechanical as a Watt governor. There is no attempt to bring any aspect of "how it works" into the picture and this is, of course why it is so simple. You can imagine a candidate passing the Turing test and then the curtain is removed and the participants in the test learn how the machine did it. Presumably the illusion of intelligence is lost as soon as the understanding gets good enough. Oh Eliza!Originally the Turing Test was nothing more than a theoretical plaything but in 1990 Hugh Loebner set up an annual contest to win a $3000 annual prize and a Grand Prize of $100,000 plus a Gold Medal for any program that could pass the Turing Test. The contest has been run every year and so far the results have been either poor or impressive depending on your understanding of what is going on. The programs chat with humans – you can try some of them out if you want to at Chatbots or Pandorabots. As with all aspects of AI, what seems to be intelligent quickly loses its magic once you know how it works. So far all of the test competitors have been based on the Eliza model. Eliza was a very early attempt at AI and all it did was to scan the input text, pick out key words and respond with vaguely connected phrases. For example, if you type “I feel ill” it might well trigger on the word “ill” and respond “I’m sorry to hear that”. It also used simple rules for turning phrases around to create new ones. For example you might type “I like cars” and it would transform this into “Why do you like cars?” What is surprising about Eliza is that it seems to work! People are fairly convinced that there is an intelligence behind the program even though the program makes no attempt to analyse or understand in any sense what is being said. In fact once you know that this is the approach it is very easy to create conversations that reveal how the program is working and how it lacks understanding. Many new Eliza programs attempt to cover their lack of understanding by being “quirky”. If you ask them a direct question such as “what is 2 times 2?” or “What colour is the sky?” they respond with something like “That’s boring, let’s talk about something else.” Of course a human could respond in this way because humans are quirky but if you press the human they will eventually tell you that the answer is four or the sky is blue (sometimes). Of course once a comment like "chatbots can't do 2+2" appears in public most chatbots quickly acquire the answer - it gets added to an ever growing play book. But if you try something new and equally direct they will go back to the ploy of being quirky.

What Eliza and the current attempts at passing the Turing test reveal more clearly than anything else is how willing humans are to share their intelligence with non-intelligent things. We all do it. When you car doesn’t start you attribute it a malicious intent, we talk to plants, animals and machines as if they understood and it all seems very natural.

Symbols with meaning

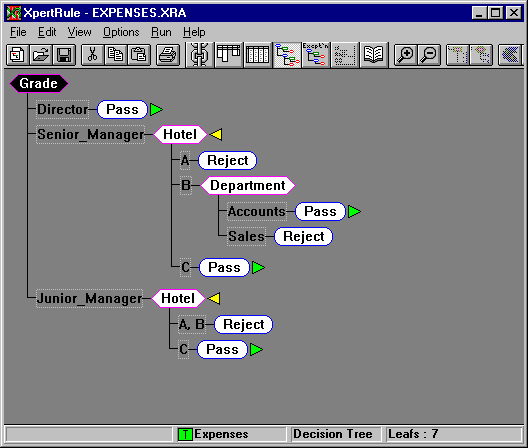

A diagram showing the rules for an expert system that accepts or rejects expense claims!

The shallowness of the Eliza type program, and the whole current approach to the Turing Test, doesn’t mean that we can’t tackle the problem of meaning using symbols and rules. The first real practical success of AI was the expert system. Expert systems can diagnose illness, find oil, fix complex systems and so on. As with all AI products at first they appear magical and when you find out how they work they seem trivial – remember that this is not a criticism but a fact of AI methods! Expert systems work by using a collection of rules of the form IF something THEN something The program gets some information and then searches though its database for rules that match. For example, a rule might be IF red spots THEN measles The program might ask “Have you any red spots?” and if the answer is “Yes” it would conclude that you had measles. I told you it was simple! In practice the rules don’t always get you to the solution in one step and one rule’s conclusion could very well trigger another and so on. One of the advantages of the expert system approach is that knowledge about a subject can be collected as small simple rules but the resulting rule base can still be used to deduce more complicated outcomes. You can also have rules that include a measure of how strongly the conclusion follows from the information. All in all expert systems are actually very useful and another good example of weak AI. However there are people how claim that this method can be extended to produce strong AI – a thinking machine! The Cyc project for example, aims to build a rule database that is so complete and all encompassing that it will become intelligent in the sense that it will be able to discuss topics and eventually reach the limits of human knowledge. Once it is at the limits of human knowledge it will push on and invent new knowledge. Of course Cyc is more than just an expert system. The types of rules that it uses have been extended to include logical expressions. The program is also able to modify and add to the rule database and every night it is left to “think over” the day’s input. In the morning researchers checkout the new rules it has created to see if they are reasonable. This is a very ambitious project and many claim that the rule base will become hopelessly inconsistent long before it is complete. At the moment the project has served more to point out the difficulties of this approach than it has demonstrated that it works.

|

||||

| Last Updated ( Tuesday, 05 May 2015 ) |