| Hashing - The Greatest Idea In Programming |

| Written by Mike James | ||||||

| Thursday, 03 September 2020 | ||||||

Page 1 of 3 Although it is a matter of opinion, you can't help but admire the idea of the hash function. It not only solves one of the basic problems of computing - finding something that you have stored somewhere - but it helps with detecting file tampering, password security and more.

Killer Algorithms

Contents

* First Draft

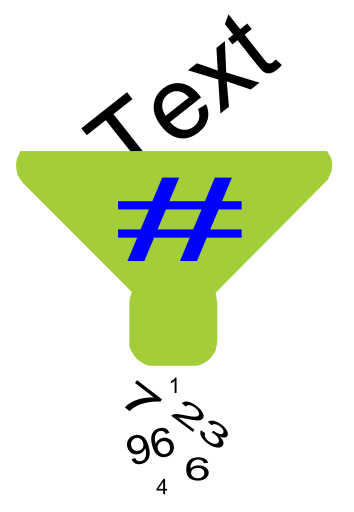

One of the basic problems of computing is finding something that you have stored somewhere. For example, if you are keeping a table of names and telephone numbers how can you organise the list so that you can find the phone number given the name? The most obvious solution is to keep the list sorted into alphabetical order and use some sort of search, usually binary, to locate the name. This is efficient but only as long as the list is kept in strict order. If you only have to add names and number infrequently then you could use an overflow table that isn't sorted into order. Then to find a name you would use a binary search on the sorted table and if you didn't find the name there a linear search on the overflow table would either find the name or confirm that the name wasn't known. Using an overflow table in this way works as long as the overflow table is keep short. If you are adding a lot of names then the time to perform the linear search in the overflow table will quickly become too much. In this case it is time to think of hashing. Hashing is one of the great ideas of computing and every programmer should know something about it. The basic idea is remarkably simple, in fact it is so simple you can spend time worrying about where the magic comes from. Suppose you have a function,

that will compute a number in the range 1 to N depending in some way on the value of name then why not store the name, and phone number at hash(name) in the table. Notice that the hash function is a bit strange. It has to be completely deterministic in the sense that you give it a name and it always gives you the same number. However it also has to provide you with a different number for each name and as we will see this isn't always possible. Using this scheme finding a name in the table is just a matter of computing hash(name) and looking at this location in the table to see if name is stored there! Yes, that's all there is to it. Well there are a few practical details but using hash(name) to give the location where name is stored is the key idea. An ExampleTo make sure that there can be no confusion about how simple hashing really is let's look at a small example. If the hashing function is the sum of the character codes of the first two letters of the name minus 128 i.e.

where ASC returns the character code, then

would be stored at

or, in plain English, at location 11 in the table. If you want to know if you have a phone number for JAMES you simply compute hash("JAMES") and look in location 11 to see if it's there. No sorting and no searching required. When you compute the hash function you know where to store the data and you know where to find the data. The key idea is that a hash function takes in text or any sort of data and outputs a set of numbers based on that data.

This, or something similar, is the way most computer languages implement advanced data structures such as dictionaries are implemented using hashing. For example in Python a dictionary object is implemented using a hash table. So even if you don't explicitly build a hash table you could well be using one. |

||||||

| Last Updated ( Thursday, 03 September 2020 ) |