| Introduction To Kinect |

| Written by Harry Fairhead | |||||

Page 2 of 4

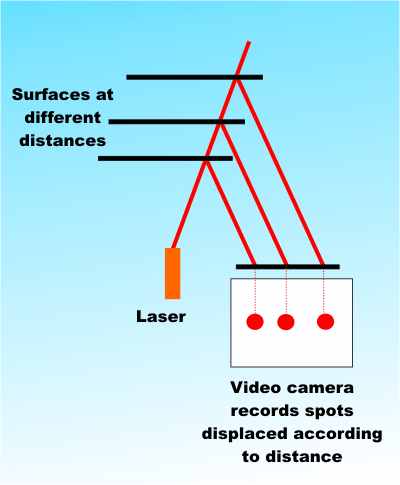

The depth mapSo how does the Kinect create a depth map? It has been suggested that the way that the Kinect works is as a sort of laser radar or LIDAR. That is the laser fires a pulse and then times how long it takes for the pulse to reflect off a surface. This is most definitely not how the system works. The accuracy and speed needed to implement so called "time of flight" distance measuring equipment is too much for a low cost device like the Kinect. Instead the Kinect uses a much simpler method that is equally effective over the distances we are concerned with called "structured light". The idea is simple. If you have a light source offset from a detector by a small distance then the a projected spot of light is shifted according to the distance it is reflected back from.

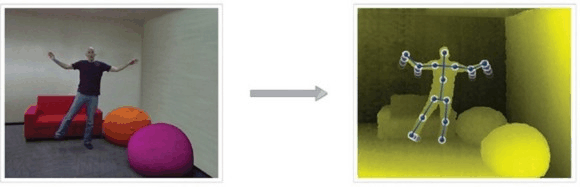

So by projecting fixed grid of dots onto a scene and measuring how much each one has shifted when viewed with a video camera you can work how far away each dot was reflected back from. The actual details are more complicated than this because the IR laser in the Kinect uses a hologram to project a random speckle pattern onto the scene. It then measures the offset of each of the points to generate an 11 bit depth map. A custom chip does the computation involved in converting the dot map into a depth map so that it can process the data at the standard frame rate. The exact details can be found in patent US 2010/0118123 A1 which PrimeSense registered. Once you know the trick it is tempting to think that it is easy however to measure accurately the optics has to be precise and you will discover that the engineering of the Kinect goes to great lengths to keep the whole thing cool - there is even a peltier cooling device on the back of the IR projector to stop heat expanding the holographic interference filter. Why depth?At this point you might be wondering why a depth map is so important. The answer is that many visual recognition tasks are much easier with depth information. If you try to process a flat 2D image then pixels with similar colors that are near to each other might not belong to the same object. If you have 3D information then pixels that correspond to locations physically near to each other tend to belong to the same object, irrespective of their color! It has often been said that pattern recognition has been made artificially difficult because most systems rely on 2D data. There is also the fact that a depth image is much more redundant in the information theory sense than a color image. What this means is that if you look at two adjacent pixels in a depth image they are very likely to have the same sort of depth value. The only locations where depth changes rapidly is at the edge of an object and edges are relatively uncommon compared to the total area of the image. In a color image. However, adjacent pixels often have very different colors even if they are part of the same object. This gives rise to false edges and all sorts of artifacts when you try to process the image. Another consequence of this difference is that if you compress a depth image you can achieve much higher compression ratios than for a color image. This can be a useful fact if you plan to send a depth image to a remote location. The conclusion is that once you have a depth field many seemingly difficult tasks become much easier. You can use fairly simple algorithms to recognise objects. You can even use the data to reconstruct 3D models and use the depth data to guide robots with collision avoidance and so on. There is no doubt the depth map is essential to the Kinect's working and it will be some time before the same tricks will be possible using standard video cameras. The softwareThe depth sensing hardware is the first feature that makes the Kinect special but without software it is just so much clever optics and processing. When Microsoft first released the Kinect it was very much XBOX and Microsoft applications only. It didn't take long for the USB connection to be decoded and open source USB drivers appeared on the web. With these you could connect the Kinect to a Linux or Windows machine and access the raw data. Only a few weeks later developing for Kinect became easier thanks to an open source initiative setup by PrimeSense the original designers of the Kinect hardware. All of the necessary APIs available as a project called OpenNI with drivers for Windows and Ubuntu. The only problem is that the drivers that they supply are for their own reference hardware. However changing the driver is a matter of modifying configuration files and this has been done for the Kinect and this is explained in the article Getting Started with PC Kinect However, this book is about using the Microsoft SDK and so we move on...

<ASIN:3540669353> <ASIN:019852451X> <ASIN:3642125522> |