| How AI Discriminates |

| Written by Nikos Vaggalis |

| Wednesday, 24 July 2019 |

|

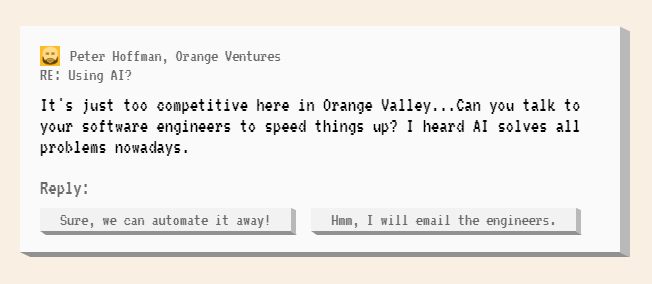

“Survival of the Best Fit” is an educational game developed by New York University that demonstrates practically how Machine Learning algorithms can make decisions based on bias. The game gives you the role of a CEO of a newly funded company which has secured funding and is in the phase of recruiting personnel to staff it. In the beginning you screen the submitted CV's alone. Your screening is based on the criteria of Skill, School Privilege, Work Experience and Ambition and since you do that yourself you can judge applicants according to your experiences and values. In my case I was hiring people with 14% more work experience than the average applicant. So far so good, but the investors don't want to lose time since time is money, so they pressure you to speed up the CV screening process. You try to respond as much as you can, but the time constraints are so tight, plus the candidates so many, that you can't cope anymore. Thus, IT suggests jumping into the hype train of Machine Learning to do the screening on your behalf.

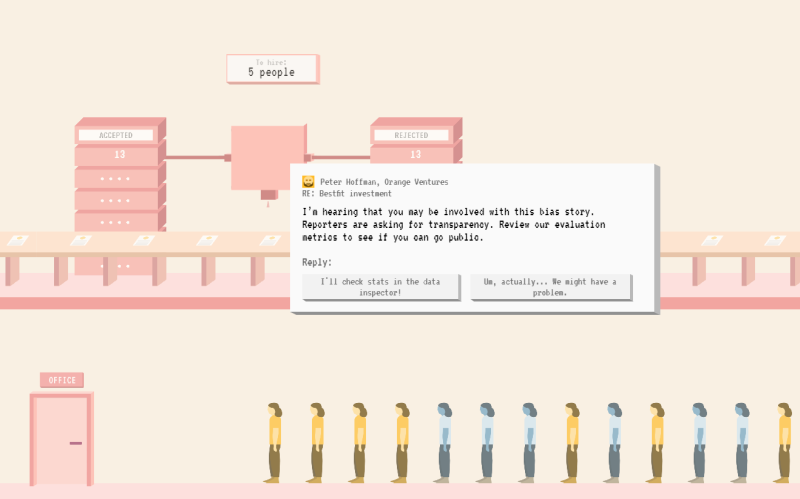

Of course, ML algorithms require data sets to be trained upon, so you feed them the CV's of the candidates accepted so that the algorithm can identify the way you think and the values you take into account in hiring. Turns out, the dataset is too small for the algorithm to work, so you end up also feeding it by using a commodity dataset used by a larger company such as Google, because Google can't go wrong, right? Things pick up in pace significantly, until you get hit with complaints that some very qualified candidates to have been rejected by the algorithm. Now you are tasked with answering "why", which it turns out is not easy to answer.

The complaints continue till the outcry is so big that you get sued for discriminating against Blueville residents in favor of Orangeville residents. It is an action that scares off the investors who retract their funding until finally you get shut down. So what is the moral of the story? That algorithms are as good as the data they are fed upon and the more biased the data, the more biased the algorithm. Bias parameters can be demographic as in this case due to Google's fictional dataset including more applicants from Orangeville. This led the algorithm to infer that Orangevillers are more valuable than Bluevillers, thus the bias. Other bias parameters that can creep in are gender, ethnicity, age and even your name because it can hint at the other three parameters! The game ends with an explanation of the issue : When building automated decision-making systems, we should be asking: what metrics are these decisions based on, and who can we hold accountable? With machine learning, that becomes tricky, because after the program is trained on massive amounts of historical data, finding out why a certain decision was made is no longer straightforward. It's what is referred to as the Black Box problem. and advises : There are many angles to approaching fair tech, and they include greater public awareness and advocacy, holding tech companies accountable, engaging more diverse voices, and developing more socially conscious CS education curricula. I would also add the perspective of using AI itself to detect discrimination in AI systems. Recent research from Pennsylvania University states: Detecting such discrimination resulting from decisions, whether by human decision makers or automated AI systems, can be extremely challenging. This challenge is further exacerbated by the wide adoption of AI systems to automate decisions in many domains — including policing, consumer finance, higher education and business. The team created an AI tool for detecting discrimination with respect to a protected attribute, such as race or gender, by human decision makers or AI systems that is based on the concept of causality in which one thing — a cause — causes another thing — an effect. In summary, gamification offers an easy way of assimilating concepts through playing as well as raising awareness. “Survival of the Best Fit” can play an important a role in widening recognition of Ethics and AI: This is why we want issues in tech ethics to be accessible to those who may have not taken a computer science class before, but still, have a lot to add to the conversation. |

| Last Updated ( Wednesday, 24 July 2019 ) |