| Suspended For Claiming AI Is Sentient |

| Written by Mike James | |||

| Wednesday, 15 June 2022 | |||

|

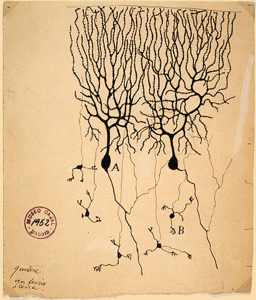

Can a large language model be sentient? This is a news item that has been doing the rounds and just about everyone has some comment to make about it. But who better to comment than a man accused of killing a sentient AI - me! My act of AI-cide was a minor one. Back in the dark days of the mainframe computer a colleague, we'll call him Bob, was running a simulation of a neural network, we'll call it Ned. Ned wasn't big, tens of neurons, but it was a more accurate model of a biological network than we use today. After about a month of running Bob came in and announced that Ned had woken up. After weeks of random noise Ned had produced an alpha rhythm. In the celebration I clumsily knocked the box containing the card deck, yes it was that old, onto the floor and the cards succumbed to entropy and shuffled themselves. "You've killed it!", yelled Bob. Nothing a card sorting machine couldn't cure, and so my crime was undone. The point is that since the early days of AI, people have been jokingly, seriously, and sometimes duplicitously, claiming that their latest implementation was alive or more specifically sentient. Before you decide that all such claims are stupid, it is worth pointing out that a central problem of AI is that any technique that is well understood cannot possibly be true AI - it's just a computer program. Now for the back story - skip if you already know about it: Blake Lemoine, a Google engineer, told The Washington Post that last fall he began chatting with LaMDA, Language Model for Dialogue Applications, as part of his job at Google's Responsible AI organization. Lemoine, who is also a Christian priest, published a Medium post describing LaMDA as "a person" who had expressed a fear of dying by being switched off, and also that it has a soul or something like it. The senior engineers at Google obviously disagree with this assessment and currently Lemoine is on administrative leave. You can read the transcripts of Lemoine's conversations with LaMDA and it is impressive that an AI can manage to generate this level of human interaction. At one level what we have here is an example of AI passing an informal Turing test. Again this is impressive and perhaps another example of moving the goalposts after AI passes the test. The problem is that we know how large language models such as GPT3 and LaMDA work and some of us aren't impressed. How could this thing possibly be sentient - it's so simple. Well yes, I would agree, but one day we probably will have to accept that an AI is sentient and then as simple equals not sentient the system in question will be complex and difficult to understand. One of the criticisms of GPT3-like systems is that they are simply statistical models of language. So not only are they not sentient, they aren't even useful as general AI systems. They have no ability to reason or think or ... However this underestimates the sophistication of language. Humans use language to capture the structure of the world and, as such, language is a model of the world. If an AI can learn that model it is not wise to claim what it cannot do. It is also said that the training is nothing more than learning to predict what comes next in a sentence and simply predicts what follows. This is true but, given the number of artificial neurons and parameters a GPT-3 model has, that prediction becomes more than a simple Markovian process. We are not looking at a single conditional probability, but a chain of such associations which could well be more than the sum of the parts. I'm not saying that LaMDA is sentient or has a soul - in fact it seems impossible that a system so simple could manifest such properties, but if we keep scaling things up that conclusion is not so obvious.

What we have here is the tendency of humans to anthropomorphize everything from ships, machines, animals and even other humans. We read the internal model of ourselves into the behavior of the world. It is what generates compasion, mechanical sympathy and a belief that others are sentient, just like us. However, there is no way to prove any of this. To ask if an AI is sentient is the wrong question. To quote Edsger W. Dijkstra: “The question of whether a computer can think is no more interesting than the question of whether a submarine can swim.” It is not that Blake Lemoine is right or wrong - it's just the wrong set of words and the belief this program is sentient is just that, a belief.

UPDATE: Blake Lemoine was subsequently fired by Google, see Generating Sentences Is Not Evidence of Sentience.

More InformationThe Google engineer who thinks the company’s AI has come to life Related ArticlesThe Unreasonable Effectiveness Of GPT-3 Would You Turn Off A Robot That Was Afraid Of The Dark? The Paradox of Artificial Intelligence Artificial Intelligence - Strong and Weak Artificial Intelligence, Machine Learning and Society Ethics of AI - A Course From Finland

To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info

|

|||

| Last Updated ( Monday, 25 July 2022 ) |