| Trouble At The Heart Of AI? |

| Written by Mike James | |||

| Wednesday, 29 September 2021 | |||

|

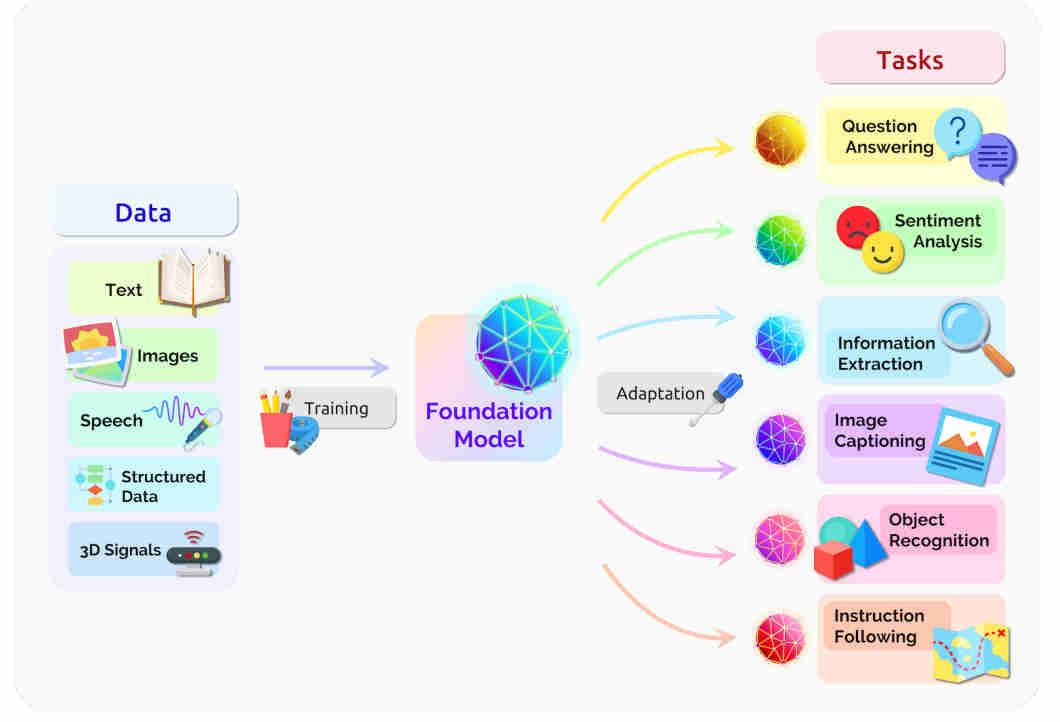

A recent paper by dozens of Stanford researchers calls AI models similar to the massive GPT-3 "foundation models", sparking a polarized response from other AI researchers and onlookers alike. The discovery of the transformer approach to building neural networks that model language is probably the second big breakthrough in AI in recent years. The first was the deep network customized for vision by way of a convolutional structure which suddenly made computer vision seem possible.The second is the ability to train really big networks using the huge quantity of language data available online. The beauty of the system is that you don't need labeled data or supervised learning. All you have to do is delete words from the text and train the network to predict the missing words. Training is this simple and there is no shortage of text that can be used. What is surprising is how well these big models work. They can be used to generate lots of fairly relevant text to the point where they can be used to summarize novels, answer questions, write programs and generally do things that you might not think possible from something so simple. In fact it is this discovery that such models seem to do more than they reasonable should that is the important point. In trying to model language the models seem to have captured something more. But how much more? This is what the new paper is about and it goes so far as to label them "foundation models": The significance of foundation models can be summarized with two words: emergence and homogenization. Emergence means that the behavior of a system is implicitly induced rather than explicitly constructed; it is both the source of scientific excitement and anxiety about unanticipated consequences. Homogenization indicates the consolidation of methodologies for building machine learning systems across a wide range of applications; it provides strong leverage towards many tasks but also creates single points of failure. Put more simply such models do more than expected and they offer a generalizable ability which can be used, with some tuning, to implement a range of different applications.

All seems reasonable, but the idea that there is something foundational about such models seems to be upsetting a lot of people. For example, Jitendra Malik, a professor at UC Berkeley and a well-known AI expert, said to a workshop on the topic: "These models are really castles in the air; they have no foundation whatsoever. The language we have in these models is not grounded, there is this fakeness, there is no real understanding.." The problem seems to be philosophical as much as anything and it harks back to philosopher John Searle's Chinese room argument - which boils down to the idea that a lookup table cannot be intelligent. The technique is also being compared to a dumb chatbot that simply looks for key phases and responds with reasonably appropriate sentences. It is argued, rightly in the case ot the chatbot, that this is not an approach that is intelligent in any sense. Can anything that approaches intelligence result from some simple statistical analysis and representation of language patterns? The case for saying no isn't proven, even though so many speak out for it. The idea that language is the world is one that has fallen out of favor since Wittgenstein proposed it. The point is that language is a model of the world constructed by humans and the foundation models simply learn this structure. Viewing neural networks as learning statistical distributions is correct, but it doesn't give much insight into the way deep networks extract hierarchical features that often makes them better at generalization that we have any right to expect. The unreasonable effectiveness of foundation models is just the same unreasonable effectiveness we find in deep neural networks. It isn't obvious that the foundation model approach cannot produce a general AI. It feels uncomfortable because it appears that simply learning the regularities of language isn't enough. It just isn't thinking. This is why they are considered "castles in the air" and "without foundation", but this is a matter of perception rather than fact. If you understand a model then it can't be intelligent, but who says we understand foundation models in any real sense other than a very vague characterization as a statistical model. What is slightly worse is that many are interpreting Stanford's move as a political one to gain prestige and a front seat in the development of the models. Stanford proposes a National Research Cloud to make big hardware available for training big models for academics to use: "It is for this reason that we are calling for the creation of a U.S. Government-led task force from academia, government, and industry to establish a National Research Cloud. Support from Congress and the President could have a meaningful impact on American innovation through the creation of such a task force. Indeed, we believe that this could be one of the most strategic research investments the federal government has ever made." Whatever the reasons, political or academic, the idea is a good one and we need to find out more about deep networks trained on huge amounts of data. Until someone proves that this is not a way to general AI, it remains a possiblity that it is.

More InformationOn the Opportunities and Risks of Foundation Models with far too many authors to list. National Research Cloud: Ensuring the Continuation of American Innovation Jitendra Malik's take on “Foundation Models” at Stanford's Workshop on Foundation Models Related ArticlesThe Unreasonable Effectiveness Of GPT-3 Cohere - Pioneering Natural Language Understanding The Paradox of Artificial Intelligence Artificial Intelligence - Strong and Weak To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info |

|||

| Last Updated ( Wednesday, 15 June 2022 ) |