| PhotoGuard - Protecting Images From Misuse By Generative AI |

| Written by Sue Gee | |||

| Sunday, 30 July 2023 | |||

|

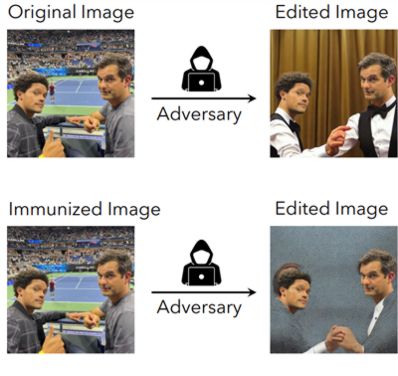

Researchers at MIT have come up with a technique to prevent images being altered by AI-powered photo editing. The result of manipulating an image protected by PhotoGuard will immediately reveal it to be fake. While there's a lot of fun to be had with tools such as DALL-E and Stable Diffusion that let you manipulate images by simply by providing a text prompt or asking for one image to be changed into another there is also the obvious danger of any photos posted online being used maliciously. Hadi Salman, a PhD Researcher at MIT who contributed to the PhotoGuard app and was lead author of the paper presented at this month's International Conference on Machine Learning puts outlines the potential threat: anyone can take our image, modify it however they want, put us in very bad-looking situations, and blackmail us To combat such malicious use of images the MIT researchers have developed a tool to "immunize" images against manipulation via AI-powered image editing. Called PhotoGuard it adds imperceptible perturbations to images to prevent the AI model from performing realistic edits. In response to the prompt "Two men ballroom dancing" using the original image below the stable diffusion model on Hugging Face would produce the top-right image. Whie the Immunized Image appears to be exactly the same as the original, the added perturbations cause the AI to blur the edited image to produce result in the bottom right.

The MIT team used two alternative techniques. In the first, called an encoder attack, PhotoGuard adds imperceptible signals to the image so that the AI model interprets it as something else, for example, causing the AI to categorize the subject of an image to be a block of pure gray. The second more effective technique, called a diffusion attack, disrupts the way the AI models generate images, essentially by encoding them with secret signals that alter how they’re processed by the model. Again the result is obviously not what was intended. Almost a decade ago, when neural networks were just starting to make progress in recognizing objects, the whole problem of adversarial perturbations was thought to be a big problem, see The Flaw Lurking In Every Deep Neural Net. If the network correctly recognized an object in a photograph then, by adjusting the pixels by an imperceptible amount, the network could be made to classify it as something else. This led to the often ludicrous situation of a neural network claiming that a picture of a cat was, say, a banana, while the human looking on could see only a cat. This was the flaw in every neural network and it is still present in today's generative networks. Only, instead of making fun of misclassification, we now seem to have found a use for it. Is PhotoGuard a lasting fix to the problem? Almost certainly not. As the team point out in a blog post by re-training a diffusion model malicious perpetrators could get around the app's adaptations. There are also lots of earlier work on defeating adversarial perturbations. What they hope will happen is that companies like Stability.ai and OpenAI that provide the models used by generative AI can provide an API to safeguard users' images against editing. More InformationRaising the Cost of Malicious AI-Powered Image Editing Interactive Demo on Hugging Face Related ArticlesNeural Networks Have A Universal Flaw DALL-E Images API In Public Beta Photo Upscaling With Diffusion Models Stable Diffusion Animation SDK For Python

To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info

|

|||

| Last Updated ( Sunday, 30 July 2023 ) |

There's an online interactive demo of PhotoGuard that lets you try it for yourself - or you can just watch the video of the demo:

There's an online interactive demo of PhotoGuard that lets you try it for yourself - or you can just watch the video of the demo: