| Hand Tracking With Nothing But A Webcam |

| Written by Harry Fairhead | |||

| Saturday, 09 June 2018 | |||

|

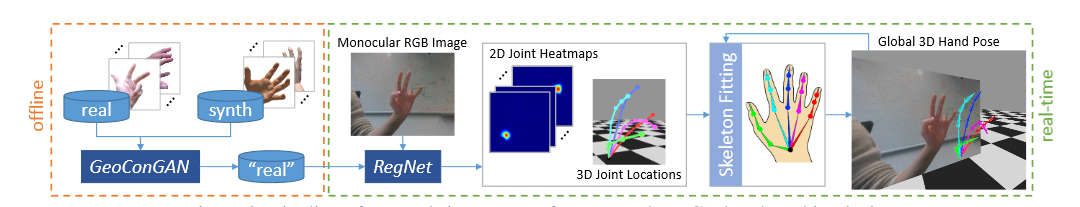

There have been hand trackers and they didn't really make much of an impression, but a hand tracker that uses just a webcam could be the breakthrough. Webcams are everywhere and the software could add hand tracking to almost any app and at almost no cost. There have been hand trackers before - the Leap motion controller leaps to mind. But we have the usual chicken and egg situation. Who is going to take the trouble of including hand tracking in their code when there are so few devices out there, and who is going to buy a specialist device when there is so little software. Approaches that don't need specialist devices have still required the user to have an RGBD depth camera or at best two RBG webcams. The latest research seems to have solved the problem using just a single RBG webcam. This breaks the chicken and egg problem because most laptops, tablets, phones and desktop machines have a webcam. Even those that don't have a webcam can be fitted with one for very little and it's useful for other tasks. The team from the Max Planck Institute for Computer Science, Stanford University and the King Juan Carlos University are presenting their work at Cebit, which is a little unusual for an academic project. You might guess that the method is based on a Convolutional Neural Network (CNN), but it isn't quite as straightforward as specify a network and train it. In addition to the network there is also a kinematic 3D hand model, which means the CNN always generates plausible hand positions, i.e. none with fingers in positions that look like a broken hand, and the predictions are temporally smooth - the model moves from one plausible hand position to another smoothly.

The problem of acquiring labeled training data was solved by using a generative procedure that created synthetic images of hands in known positions. Starting from a database of 28,903 real hand images, a neural network was used in a fairly complex way to generate additional synthetic images that were then enhanced using the real image to be as good as real. Take a look at it in action - it looks fast and accurate:

The only problem is that there is no source code to download for the implementation. There is a note on the website that says CNN Model+weights and the generated dataset are "coming soon". With the model and weights it would be relatively easy to implement the tracker. There is no indication as to the status of the licence for the software or the model, but the fact that it is being demoed at Cebit suggests that there are some commercial aspirations.

More InformationGANerated Hands for Real-Time 3D Hand Tracking from Monocular RGB Related ArticlesTurn Any Webcam Into A Microsoft Kinect Easy 3D Display With Leap Control Leap 3D Sensor - Too Good To Be True? Intel Ships New RealSense Cameras Is This The Kinect Replacement We Have Been Looking For? To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info |

|||

| Last Updated ( Saturday, 09 June 2018 ) |