| Landoop Lenses Adds Protobuf Support |

| Written by Kay Ewbank | |||

| Wednesday, 15 August 2018 | |||

|

The Lenses SQL streaming engine for Apache Kafka can now handle any type of serialization format, including Google’s Protobuf. Lenses is a streaming data management platform that works on top of Apache Kafka. It supports the core elements of Kafka with a web user interface alongside features for querying real-time data and creating and monitoring Kafka topologies. It can be integrated with other systems including Kubernetes, and there's a free edition called Lenses Box that provides the entire Apache Kafka ecosystem in one Docker command. It has JDBC connectivity and clients for Python, Golang and Redux.

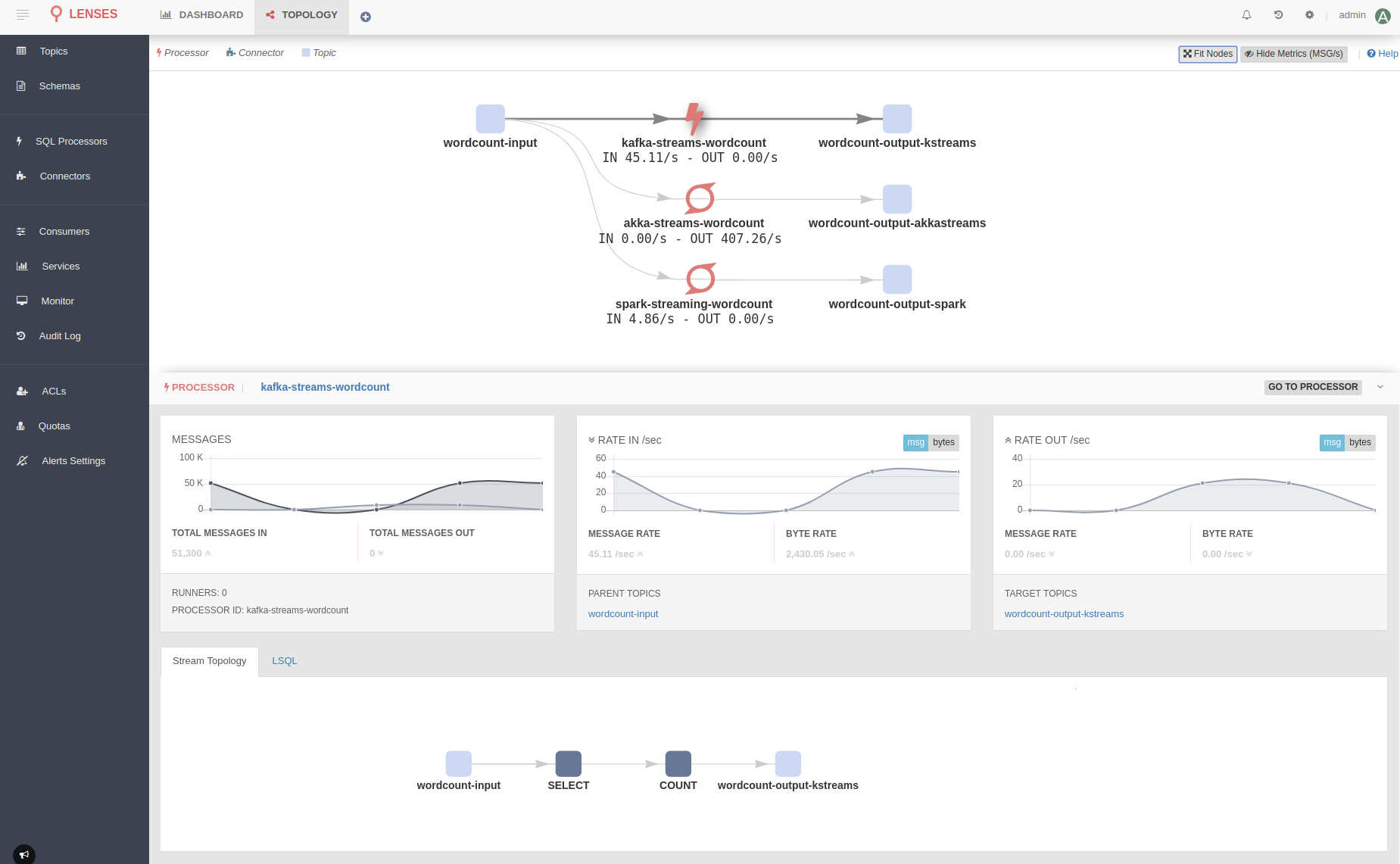

The updated version has support for streaming XML in addition to SQL. The XML decoder is used just as though the data was in SQL format, apart from the fact that as XML doesn't infer field types, all fields are String, and you have to use the Cast function to convert fields to the correct type if they should really be numeric. The support for Google's Protobuf is another change in this release. Protobuf is short for Protocol Buffers, and these are Google's language-neutral, platform-neutral, extensible mechanism for serializing structured data. Landoop now supports Protobuf, along with any other custom formats, meaning companies can use their own serializers.The support for any serialization format covers both bound and unbound streaming SQL in Lenses, so joining and aggregating Protobuf data is as simple as working with JSON and Avro. Array support has also been added to Lenses, and you can now address arrays and their elements using standard array syntax Another addition to the new version is a topology graph. This provides a high-level view of how data moves in and out of Kafka, and is intended to provide answers to questions such as:

The developers say: "Until now, Lenses has been answering the question on where your data is originating from, where it is moving to as well as who is processing and how covering both Connectors and Kafka SQL processors. With this new release Lenses fully supports all your micro-services and data processors." A micro-service can be a simple Kafka consumer or producer, or even have a higher level of complexity using Kafka Streams, Akka Streams or even Apache Spark Streaming to handle real-time stream processing. The final changes of note are that Lenses SQL is now context aware so you no longer need to explicitly define the payload; and there's a new SQL management page that provides access to topics, schemas and fields to build queries; and the CLI and Python library has been revamped.

More InformationRelated ArticlesKafka Graphs Framework Extends Kafka Streams Apache Kafka Adds New Streams API

To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info |

|||

| Last Updated ( Tuesday, 01 August 2023 ) |