| DARPA Funds Big Code Database Project |

| Written by Sue Gee | |||

| Wednesday, 12 November 2014 | |||

|

DARPA has awarded $11 million to a project initiated at Rice University that aims to "autocomplete" and "autocorrect" code using a database of "all the available code in the world". Is this a folly? The funds, which extend over a period of four years. are for the PLINY project that will will involve more than two dozen computer scientists from Rice, the University of Texas-Austin, the University of Wisconsin-Madison and the company GrammaTech as part of DARPA's Mining and Understanding Software Enclaves (MUSE) program. MUSE, which was announced at the beginning of this year: "seeks to make significant advances in the way software is built, debugged, verified, maintained and understood. Central to its approach is the creation of a community infrastructure built around a large, diverse and evolving corpus of software drawn from the hundreds of billions of lines of open source code available today" The PLINY project takes its name from Pliny the Elder, author in the first century of the common era of one of the world's first encyclopedias and its aim is to create and use a massive repository of open source code so that a programmer can write the first few lines of their own code and have the rest automatically appear, "much like the software to complete search queries and correct spelling on today's Web browsers and smartphones."

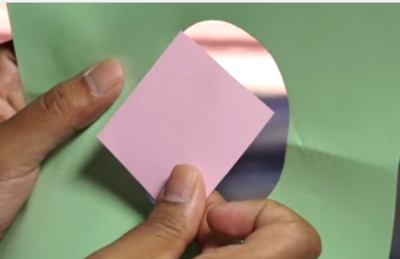

Giving details of PLINY in this video Vivek Sarkar who is Rice's Chair of the Department of Computer Science and the project's principal investigator uses a stack of files and bits of colored paper to illustrate how it will work. He explains that it's not just a matter of putting the text of all the available code in the world the database instead the researchers want to extract interesting features like functions, variables, dependencies. With the terabytes of data thus amassed in a “big code database", Sarkad goes on, “the question is how it can be used to help programmers to write new code or to fix old code." This is answered by Swarat Chaudhuri, assistant professor of Computer Science at Rice who, with the aid of both paper and scissors, explains how Pliny will take a piece of incomplete code (a piece of green paper with a large hole in it) and generate a query to search the large corpus of code snippets (scraps of pink paper) to find the best fit. Having identified something that isn't a perfect match, PLINY will use sophisticated analysis techniques (picks up scissors and trims pink shape), give it back to the programmer and we have a result that fits what the programmer already wrote.

Sarkar concludes, saying Rice has been historically strong in programming languages now have a growing strength in big data and the exciting part about this project is that the two are really going to come together. [We have] a dream team that is gong to crack a really important problem for the future of computer software Why am I not convinced – after all the pitch has raised $11m from DARPA? Loads of reasons – I'm sure you'll be able to come up with more. To list just three

Even in natural languages there is the problem of syntactic rules giving way to semantic freedom as the length of the text increases. For short pieces of text the syntax constrains what can come next to a small set of possibilities. If you try to predict further and further ahead then the syntax becomes less constraining and you can choose what comes next according to the meaning. The task of code completion on large chunks of code is like trying to predict the text of a novel from the first sentence. On a small scale the syntax constrains. On a larger scale the semantic possibilities multiply. This is what makes language meaningful. The there is an even deeper reason - computer languages are not at all like natural languages. This makes analogies like auto-correct inappropriate in the first place. You could argue that computer languages have a strict syntax and so they should be subject to even more statistical regularities. The big problem is that computer languages are always critically unstable. Take a human language and delete an word or even swap a word and while it might seem wrong or odd the meaning will persist. Now try that with a program and the result will be something that cannot run at all, and if it does run the result will be unrelated to what it did before. Code that is close in text can be very different in behaviour. Computer languages have a high sensitivity to small changes - and working programs are language systems on the edge of chaos. This makes statistical methods more than challenging.

|

Advent Of Code 2025 Commences 01/12/2025 It's Advent, the time of year when we countdown the days to Christmas having fun doing daily coding challenges. Advents, in the programming sense, are events hosting programming puzzles announced ever [ ... ] |

PostgreSQL Extension for Visual Studio Code 08/12/2025 Connect to PostgreSQL database instances, run queries, create and manage connections and more, all from inside VSCode. Just announced, the extension simplifies talking to and managing PostgreSQL [ ... ] |

More News

|

Comments

or email your comment to: comments@i-programmer.info