| JavaScript Canvas - WebGL 3D |

| Written by Ian Elliot | ||||||

| Monday, 06 December 2021 | ||||||

Page 5 of 5

Vertex DataWe have spent a lot of time setting up the transformation matrices, but there is still the matter of providing the 3D vertex data that provides the geometry of the model to be rendered. To do this we have to make another connection between data in our JavaScript program and in the vertex shader. In this case, however, the connection is to an array of vertex data in the JavaScript and an attribute in the shader. As in most first examples, we are going to pass a 3D array to the shader that gives the position of each vertex, but it is important to know that you can pass data that is something other than position. You can even pass multiple arrays to different attributes. Each attribute is read one element at a time when the vertex shader is run. Think of a for loop processing two or more arrays in parallel. Making the connection between a JavaScript object and an attribute follows a standard set of steps:

This is more complicated than processing a uniform, but we are creating and using an array of data. In this simple case the only vertex attribute that the shader processes is its position. The shader has a variable called: attribute vec3 vertexPosition; and we have to deal with making the connection between this and the vertex position attribute that we are going to specify in the JavaScript. First we need to get a reference to vertexPosition: var vertexPos = gl.getAttribLocation( program, For it to behave like an array attribute we have to associate it with a buffer in the GPU. To do this we first have to create a buffer object - this is a completely general buffer without any particular structure - and bind it to the WebGL object's array buffer: var vertexBuffer = gl.createBuffer(); gl.bindBuffer(gl.ARRAY_BUFFER,vertexBuffer); After this we have a buffer in the GPU, but it isn’t associated with any attribute in the vertex shader. To associate the structure-less, name-less buffer we have just created with the attribute in the vertex shader we need to use: gl.vertexAttribPointer(vertexPos,

3.0,

gl.FLOAT,

false,

0, 0);

This says that the vertexPos attribute is a three-component entity of type gl.FLOAT, and they should be un-normalized. The final two parameters are rarely used. The first specifies the stride of the data, i.e. the amount of storage allocated to each element, and the second specifies the offset, i.e. where the data starts. For standard JavaScript arrays both are set to 0 to indicate tight packing and no offset. The buffer that we have just created is an internal buffer in the sense that WebGL uses it to store and display whatever vertex data you have transferred to it. At this point we have a buffer set up and associated with an attribute and we can transfer data from the JavaScript program and then expect it to be used by the shader when the model is rendered. Let’s draw a single triangle to get started: var z = 4;

var vertices = new Float32Array(

[-0.5, 0.5, z,

0.5, 0.5, z,

0.5, -0.5, z]);

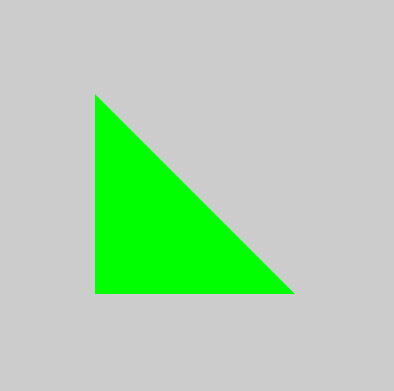

gl.bufferData(gl.ARRAY_BUFFER, vertices,

You can vary z to see the effect of the perspective transformation. The JavaScript array vertices is loaded into the buffer that is currently bound to ARRAY_BUFFER. You can repeat this entire procedure to define additional attributes each with their own buffer. The bufferData method transfers the vertex data in the JavaScript object to the WebGL object's buffer. The STATIC_DRAW states that we are only going to write to this buffer very infrequently and the system can optimize for this situation. It doesn't stop us from writing to the buffer again, but it might not be as efficient. Finally at some point before you draw the data in the buffer you need to enable it: gl.enableVertexAttribArray(vertexPos); If you enable it, WebGL will use its data whenever you ask for a draw or re-draw of the scene. If you don’t enable it then the attribute will behave like a uniform and you can set it in the usual way. Final Setup and DrawingNow we are almost ready to render the model, but there are still some very simple initialization steps we need to take. The first is to set the value that the color will be cleared to: gl.clearColor(0.0, 0.0, 0.0, 1.0); This doesn’t clear the buffer, it just sets the default color that it will be cleared to. Next we set the way that "culling" is performed. gl.enable(gl.DEPTH_TEST); gl.depthFunc(gl.LEQUAL); These two methods set up the system to remove pixels that are behind other pixels in the 2D rendered scene. Without them you would be able to see distant objects mixed in with closer objects. Now we can clear the buffers using the values we just set: gl.clear(gl.COLOR_BUFFER_BIT | gl.DEPTH_BUFFER_BIT); and finally we can ask the system to draw the triangle, or in general whatever is in the buffer: gl.drawArrays(gl.TRIANGLES, 0, vertices.length / 3.0); gl.flush(); } Notice that we have to specify the number of vertices that are in the buffer as the last parameter. The result is a fairly unimpressive green triangle. However, it is a 3D green triangle. For example, if you change the z value the triangle changes its size. If you change the z value for different vertices then the triangle changes its appearance as it becomes skewed. Don't expect any lighting effects or shadows because we haven't used a shader that creates them.

From here you need to create more sophisticated shaders, use matrix operations to set up the view and position, define more complex models with color and texture attributes and experiment with lighting and animation. You can see a complete listing of this program at www.iopress.info. In Chapter But Not In This Extract

Summary

Now available as a paperback or ebook from Amazon.JavaScript Bitmap Graphics

|

||||||

| Last Updated ( Monday, 06 December 2021 ) |