| Processor Design - RISC,CISC & ROPS |

| Written by Harry Fairhead | ||||||

| Friday, 23 March 2018 | ||||||

Page 2 of 2

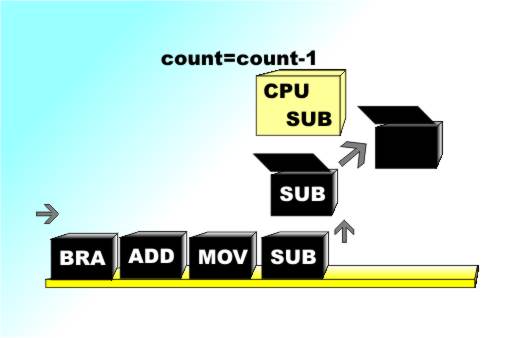

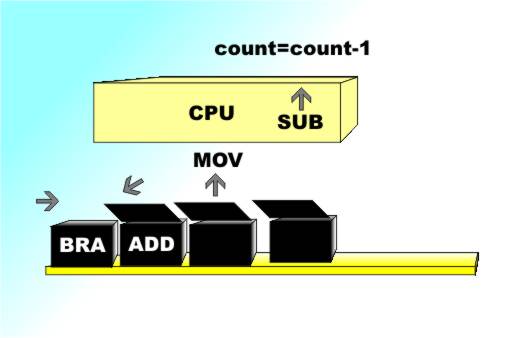

ROPs?Why do we seem to prefer CISC processors? The reason is mainly due to the need to be backward compatible with the early microprocessors and the way that processors evolve by accumulating features. Over time the x86 family of processors has evolved beyond its initial CISC design. What has happened is that RISC design principles have been incorporated into the core of processors starting with the Pentium line that leads up to today's multicore x86 chips without changing their overall nature. This was a technique first used by Intel’s competitors to produce chips that outperformed the early x86 family processors. NexGen was the first and was so successful that it was bought by AMD, but it didn't take long for Intel to use the same ideas to make faster Pentiums. The way that this works is that the processor core executes a set of reduced instructions called ROPs. The standard complex instructions of the Pentium instruction set are first broken down into a sequence of ROPs and then obeyed. Believe it or not this strange two-stage execution system is actually faster! The programmer doesn’t have to know anything about the deep architectural changes in the chip because it translates the old instruction set using yet more hardware. This is very similar to the use of a Just In Time (JIT) compiler for a high level language that converts complex instructions into a set of simpler machine code or intermediate code instructions just before they are executed. There is also a move towards Very Long Instruction Word computers which looks like a rejection of the RISC principle – but it isn’t really. Once you have a reduced instruction set there isn’t much scope for making it faster. One way is to increase the size of each instruction so that once again more gets done per clock cycle. This may look like a return to CISC but again there are only a few, highly optimised, VLIW instructions. The big split in design technologies, RISC v CISC, is just part of the story. It gets even more interesting when you start looking at what can be done to tweak the basic design of a processor. PipeliningIf software can be multi-tasking so can hardware. The early processor designs carried out part of a single instruction at every clock cycle. First the instruction had to be fetched from memory, then it had to be decoded, then perhaps data had to be fetched, the operation was then carried out and so on. The exact steps vary according to the processor but there are always a number of steps involved in completing an instruction.

A non-pipeline processor has to do everything before moving on to the next instruction.

Modern processors can speed things up by overlapping the execution of commands. In other words, by starting a new instruction before the current one is completed, the number of instructions per clock cycle can be increased. This idea is called “pipelining” and you can think of it as bringing the ideas of a production line to executing instructions.

A pipeline processor on the other hand can be working on more than one instruction at a time – just like a production line.

The x86 Core architecture, for example, has up to 14 stages of pipelining. Longer pipelines have to be better?! Not necessarily. The big problem with pipelining is what happens if the instruction that you’ve just completed makes the partially completed instructions still in the pipeline invalid. In this case the pipeline has to be restarted and this costs clock cycles. This can be so expensive that most benchmarks showed that the Pentium 4 with a 20 stage pipeline was slower than a Pentium III with a 10 stage pipleine at the same clock speed. Branch predictionOne way of avoiding the need to restart a pipeline is to always make sure that it is filled with the right instructions. When a processor reaches a branch instruction it has the choice of one of two possible sets of instructions that it could follow. Branch prediction aims to guess which set is the right set! it sounds like fortune telling but it works because picking the wrong branch isn't any worse and picking the right branch pays off. Basically is a question of choosing the branch most likely to be taken and this is a matter of statistics. SuperScalar And MoreIn physics a scalar is a single number - as opposed to a vector which is a lot of numbers. Most processors do arithmetic one data value at a time and these are called scalar processors. A superscalar processor can spilt its instruction stream into two or more pipelines and get things done twice as quickly. In most cases this doesn’t happen because one pipeline ends up having to wait for the other. The Pentium "hyperthreading" architecture has two parallel pipelined executions paths and it uses out-of-order execution to try to keep them running. Out-of-order execution is a difficult thing to implement because in any sensible program the results of one instruction depend on the instructions that went before it. Out-of-order execution is sometimes called “speculative execution” because at the end of the day you may well have to throw away the results! You can see that this all relates to branch prediction and a general tendency for processors to do extra work just in case it proves to save them time! Branch prediction and its extension to speculative execution i.e. carrying out instructions just in case they might be om the branch that is eventually taken is one of the main tools in making processor go fast. Unfortunately it has security problems. It turns out that you can get a modern processor to execute code that the program would ensure is never executed - like read beyond the end of a buffer. In principle speculative execution has no side effects but modern processors are so complex that its almost impossible to ensure this. In this case it turned out the speculative execution modifies the cache and this can be detected by another program. The problem results in the Meltdown and Spectre exploits which have made the majority of modern processors open to hacking. SIMDAlong with the superscalar idea there is also SIMD – Single Instruction Multiple Data. This is another way of getting the job done faster by doing the same operation on multiple data items. For example, if you want to add 10 numbers together why not bring them all in to the processor in one fetch and add them using one huge adder. This is exactly what the MMX extensions to the Pentium and related processors allow programmers to do. The MMX instructions perform arithmetic on arrays of values in one operation. The Pentium III extended MMX to 128-bit floating point values and the Pentium 4 took this another step further with SSE2 and double precision values. The Intel Core architecture extends this to SSE4. The big problem with SIMD hardware is that it only makes a difference if you can make use of it. That is, if you only have one number to add then the ability to add ten in the same time is wasted. What this means is that SIMD improvements,like SSE, really only make a difference to multimedia, graphics, games and signal processing software. If all you want to do is run Excel or Word then they have little impact. The best example of SIMD processors in use to day are the many different types of GPU - Graphics Processing Units. A GPU is a SIMD processor optimized to work on the sort of data operations that are needed in graphics. Back to clock speeds?Sadly, for all our cleverness, increasing clock speeds is still the best way to make a processor go faster. The Pentium 4 was expected to reach 10GHz but it stuck at around 2GHz. The highest speeds achievable seem to be below 4GHz. Only very recently has a production processor managed to reach the speed of 5GHz - the AMD FX-9590 (June 2013). People have achieved overclocking rate of just short of 9GHz but only by taking extreme measures to cool the device. The problem is that while transistors have been shrinking allowing us to get more on a single chip the law that smaller transistors use less power ran out.The amount of power a processor uses goes up as the clock speed increases. Until about the mid 2000s the power went down with shrinking transistors and hence the clock rate could go up but after this time things stopped being so convenient. The result is that you can run a processor faster than say 3GHz but only if you are prepared to watch it melt. Fast logic is expensive logic and so one thing that processor designers have been doing for some time is to use split clock speeds. They run the external logic at much lower speeds than the processor and even running different parts of the processor at different speeds.This of course is another opportunity to make use of the cache principle to speed things up when systems run at different speeds. Intel always planned to make faster versions of the Pentium architecture but now manufacturers have mostly withdrawn from the clock speed race. Instead the hope for the future is to put ever more processors or cores on the same chip. Multi-core processors can be seen as the only thing you can do when all of the other ways to make a processor go faster have run out of steam. The big problem is that you may have multiple cores but just like SIMD, if you don't have work for them to do they don't help. The big challenge for the future is making the software that can take advantage of the multi-processor configurations. I wouldn't say that the days of the ever faster processor were over but they seem to have dried up for the moment. Today the hopes for the future speed increases are in more cores in more heterogeneous systems. Today it isn't only the multicore CPU that does processing but the GPU with tens of simple but fast processing cores. Related ArticlesThe Computer - What's The Big Idea? Flash Memory - Changing Storage Inside the Computer - Addressing Risc Pioneers Gain Turing Award

Comments

or email your comment to: comments@i-programmer.info To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

|

||||||

| Last Updated ( Friday, 23 March 2018 ) |