| Microsoft Goes All Out On Generative AI |

| Written by Nikos Vaggalis |

| Wednesday, 19 June 2024 |

|

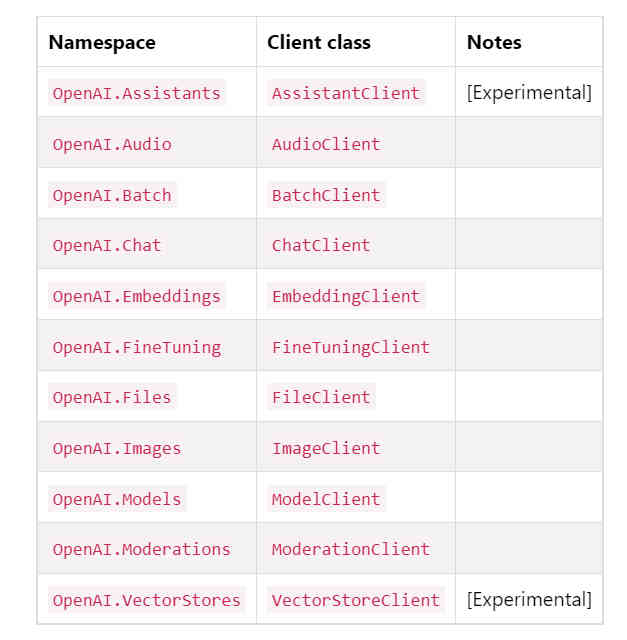

Over recent days, Microsoft has announced both the official OpenAI library for .NET and the AI Toolkit for Visual Studio Code. From self-paced courses, as described in "Microsoft's Generative AI for Beginners", to adding extensions for turning PostgreSQL into a vector store, or integrating it with Azure's OpenAI services, to partnerships with LLM makers like Mistral AI, Microsoft is upping its GenAI game. To continue that trend we now have news of the general availability of the OpenAI library for .NET and of the AI Toolkit for Visual Studio Code. The OpenAI .NET library expands the AI ecosystem for .NET developers, allowing them to integrate the OpenAI and Azure OpenAI services through their respective REST APIs in their code. The new version in question is 2.0.0-beta and the new features it comes with are:

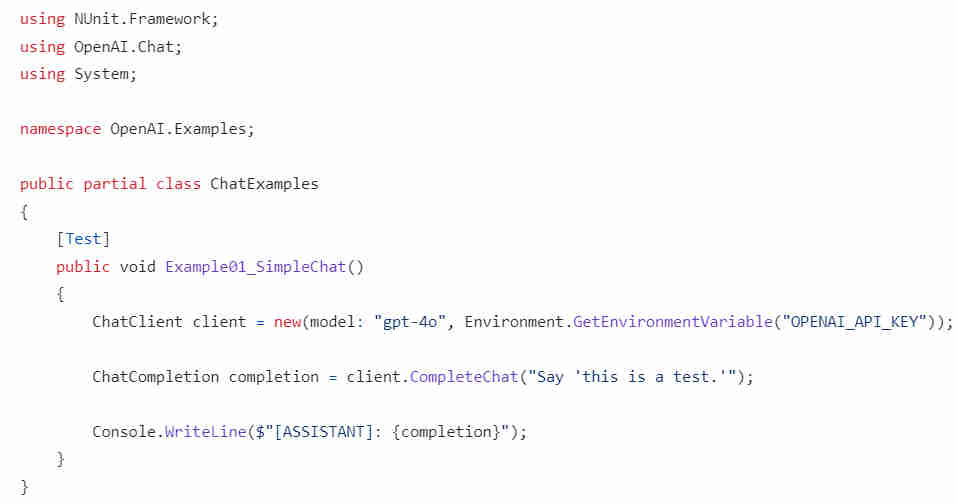

To call the OpenAI REST API, you will need an API key. To obtain one, first create a new OpenAI account or log in. Next, navigate to the API key page and select "Create new secret key", optionally naming the key. Add the client library to your .NET project with NuGet using your IDE or the dotnet CLI: dotnet add package OpenAI --prerelease To put it to use, the following snippet illustrates how to use the chat completions API:

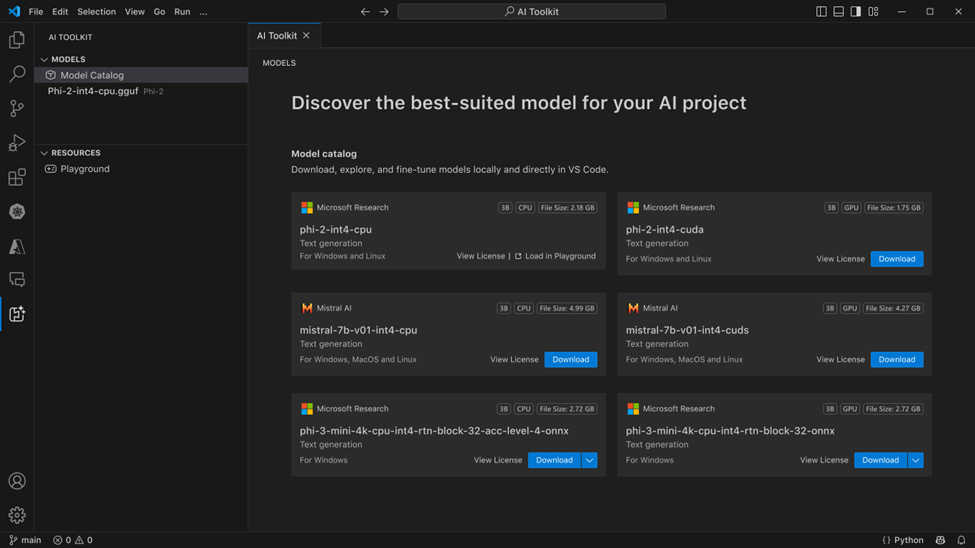

That said, it's interesting that this library is released in parallel to another SDK, that of Semantic Kernel which serves a similar purpose in accessing LLMs from your code. The main difference is that with Semantic you get access to multiple models, and not just OpenAI’s, plus you can choose between the C#, Java, Python and Javascript versions. It's best to explore both options before rushing to write code. For an overview make sure to check our "Access LLMs From Java code With Semantic Kernel". Now let's turn our attention to the new VSCode extension called AI Toolkit. The AI Toolkit enables you to download, test, fine-tune, and deploy AI models from Azure AI Studio and HuggingFace, locally or on the cloud. To get access to the models, you invoke the extension's Model Catalogue discovery menu, which includes models that run both on Windows and Linux on CPU and GPU. The AI Toolkit comes with a local REST API web server that uses the OpenAI chat completions format. ??This enables you to test your application locally without having to rely on a cloud AI model service, by using the endpoint: http://127.0.0.1:5272/v1/chat/completions Use this option if you intend to switch to a cloud endpoint in production. It also comes with the ONNX Runtime. Use this option if you intend to ship the model with your application with inferencing on device. In summary, the AI Toolkit makes it dead easy to discover and evaluate models from the comfort of your IDE. Note, however, that this is not a code assistant like Visual Studio IntelliCode which provides help for writing code. The gist is that the big players like Amazon, IBM and Microsoft have all started incorporating LLMs in their products to give their customers the edge. When the differences between those products are too little, the deciding factor becomes the degree of convenience offered by the interface they provide to their users. And Microsoft looks like winning in this game.

More InformationOpenAI .NET API library Related ArticlesAccess LLMs From Java code With Semantic Kernel Azure AI And Pgvector Run Generative AI Directly On Postgres Azure Database Flexible Server for PostgreSQL Boosted By AI Microsoft's Generative AI for Beginners

To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info |

| Last Updated ( Wednesday, 01 January 2025 ) |