| Can An LLM Hear And See? |

| Written by Mike James | |||

| Wednesday, 14 May 2025 | |||

|

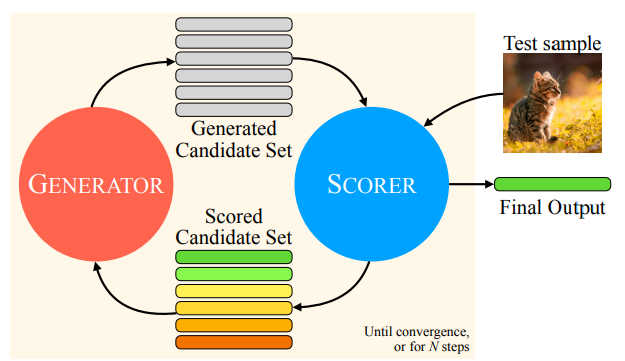

Large Language Models are fascinating and are probably practically important, but if you know how they work what they manage to achieve is remarkable. Is it possible that a language model can both see and hear without further training? This is the claim made by a team of researchers affiliated to,Meta AI and I will tell you now that the answer isn't clear. but what has been done may well be important nevertheless. The paper reporting the work is called "LLMs can see and hear without any training". For me this is a hype too far. The experiments take an LLM and use it to implement a Multimodal Interactive LLM Solver, or MILS. The architecture is the well-known actor-critic which in turn is just a feedback mechanism. The LLM is used as a generator of texts that a scorer or critic then evaluates in terms of how well the text meets the requirements. The evaluations are fed back to the LLM and it tries to improve. "The goal of the GENERATOR is to produce candidate outputs C, that solve a given task. It takes as input some text, T , which contains a description of the task, along with scores S (if any) from the SCORER for the previous optimization step. It leverages this signal to produce the next set of candidate generations. The GENERATOR is typically modeled using an LLM, given its ability to take text as input and reason over it." Notice that this is learning, but it isn't gradient decent or anything simple. The LLM appears to be "reasoning" how to improve.

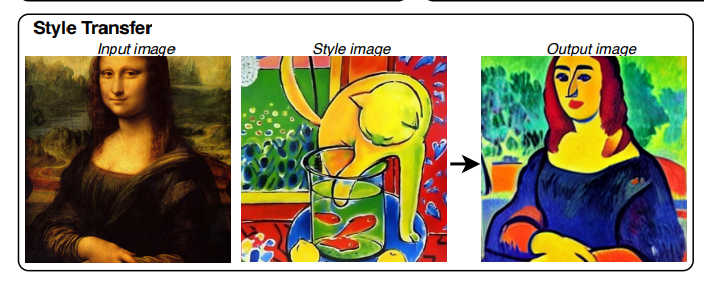

The experiments weren't limited to text outputs, however, as it is very easy to put a text description of a scene into a generative AI and let it create an image which the SCORER can then evaluate. The experiments demonstrate that MILS can effectively caption photos and video clips. The text can be converted into audio using a text-to-speech system and then we also have audio captioning. The same idea can be used to implement image generation the LLM describes a photo which is generated by another AI and then Scored on how well it fits the specification. A later experiment extends this to style transfer. In this case the Scorer evaluates how well the style has been transferred to the target image.

You can see that these experiment prove that an LLM can reason about audio and video but I don't think they demonstrate that an LLM can see or hear. If you think that an LLM is just a sophisticated auto-complete then perhaps these results leave you with an unchanged outlook. But ... if an LLM captures the statistical structure of language then perhaps it also captures the statistical structure of the world. Language is special because it is an abstract model of the world and the fact that LLMs can improve their outputs based on guidance provided by a critic suggests that it can "reason" about real world things within its model of language. This is what the title of the paper is intended to suggest.

More InformationLLMs can see and hear without any training Related ArticlesNeural Networks Learn How To Run A Motor It Matters What Language AI Thinks In Google's Large Language Model Takes Control To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info

|

|||

| Last Updated ( Wednesday, 14 May 2025 ) |