| Style Transfer Makes A Movie |

| Written by David Conrad | |||

| Sunday, 22 January 2017 | |||

|

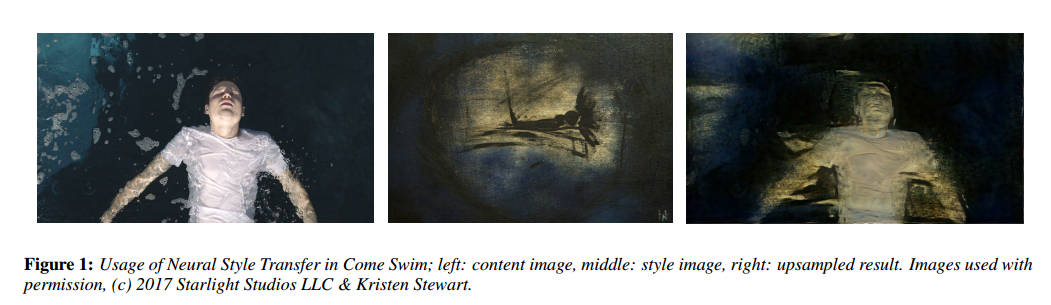

It is only a few months ago that neural network based style transfer was the latest thing. Now we have a short and serious movie which contains sections that were generated by style transfer. The director is actress Kristen Stewart who also co-authors the paper. It is highly probable that most of the heavy lifting was done by Adobe software engineer Bhautik Joshi, but the credits to Stewart and producer David Shapiro were probably justified on creative grounds and simply being able to understand and use a cutting edge technique enough to realize their artistic intent. The film, Come Swim was written and directed by Stewart and makes use of a painting by her to establish the style. You can see a tiny clip from the film in the video, although there are no style-transferred portions:

Style transfer is achieved by training a neural network to recognize the style of a particular painter and then extracting the features that characterize the style. These can then be applied to a new image to transform it into the same style. In this case the processing pipeline used lots of GPUs provided by Amazon EC2. Even then the resolution was only 1024 pixels wide and this needed to be upscaled to 2048 pixles to be incorporated into the rest of the movie. The processing time was about 40 minutes per frame per instance used.

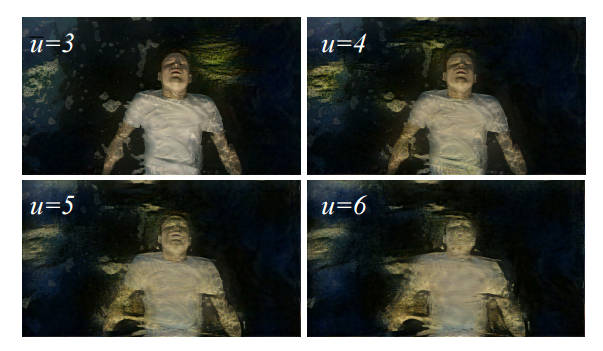

The problem to be solved is how do you provide the creative control to the director? Using the technical parameters of the network doesn't make it easy to achieve a desired result. The key factor in this work is that a measure of "unrealness" u was derived, allowing the strength of the style transfer to be controlled. The content of the paper doesn't rate a "breakthrough" headline, and to be honest most of the attention it has received is more to do with the inclusion of actress Kristen Stewart - wow an actress authors an AI paper - but it is an indication of how fast advanced AI is making inroads into what we usually think of as the creative arts. For a long while installation art and other more esoteric "happenings" have needed their creators to know about languages such as Processing and hardware such as the Arduino or the Raspberry Pi. Now you can add neural networks to the list. Ever since Google's inception network started to dream up surrealistic images, AI has been a creative force in its own right. More InformationBringing Impressionism to Life with Neural Style Transfer in Come Swim Related ArticlesA Neural Net Creates Movies In The Style Of Any Artist

Automatic Colorizing Photos With A Neural Net Removing Reflections And Obstructions From Photos See Invisible Motion, Hear Silent Sounds Cool? Creepy? Computational Camouflage Hides Things In Plain Sight Google Has Software To Make Cameras Worse

To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info

|

|||

| Last Updated ( Sunday, 22 January 2017 ) |