| NVIDIA CUDA Dive Using Python |

| Written by Nikos Vaggalis | |||

| Thursday, 15 May 2025 | |||

|

NVIDIA adds native support to CUDA for Python, making it more accessible to developers at large. CUDA is, of course, NVIDIA's toolkit and programming model which provides a development environment for speeding up computing applications by harnessing the power of GPUs. It's not easy to conquer since it requires the code to be written code in C++ and as C++ by default is not user-friendly and difficult to master, these properties subsequently rub off on the toolkit itself. Back in 2021, we looked at an alternative to accessing CUDA using the most user friendly language there is - Python. CuPy, the open-source array library for GPU-accelerated computing with Python, was another option too. But the time has come for NVIDIA to realize that having Python as a first class citizen is very beneficial for the toolkit's adoption by both developers and other communities such as scientists. Adoption is one thing; the other is that with the rise of AI, GPU accessible programming is on demand and NVIDIA wants everybody working on its chips. As such, the emergence of Pythonic CUDA-accessing NVIDIA’s CUDA platform from Python. While the project existed for a couple of years, it is now in version 12.9 that gets really usable. Native support means brand new APIs and components:

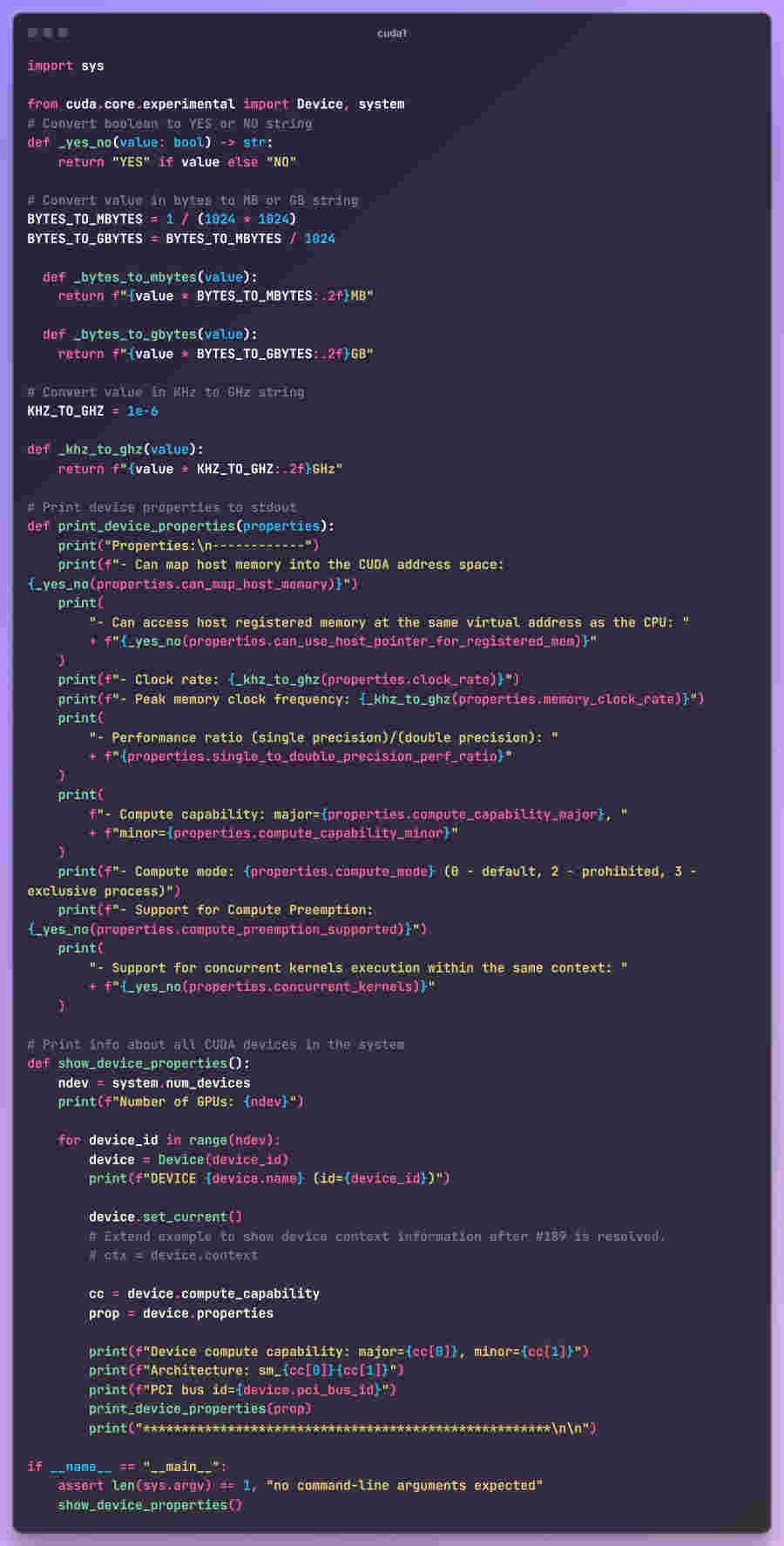

A simple example of the new core API in action that enumerates the device's properties in Python code, follows:

As you see the API is experimental, but upon stabilization it will be moved out of the experimental namespace. cuda.core supports Python 3.9 - 3.13, on Linux (x86-64, arm64) and Windows (x86-64) and of course to run CUDA Python, you’ll need the CUDA Toolkit installed on a system with CUDA-capable GPUs. If you don’t have a CUDA-capable GPU, you can access one from cloud service providers, like Amazon AWS and Microsoft Azure. With that said, just use Python for everything, even on the GPU. More InformationRelated ArticlesUnderstanding GPU Architecture With Cornell Program Deep Learning on the GPU with Triton

To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info |