| Geoffrey Hinton's New Way To Train Neural Networks |

| Written by Mike James | |||

| Wednesday, 11 January 2023 | |||

|

All of the amazing things that have been achieved with AI over the past few years are down to the neural network and the way that it is trained using backpropagation. Now long time AI researcher Geoffrey Hinton has a new way of doing the job and it is interesting.

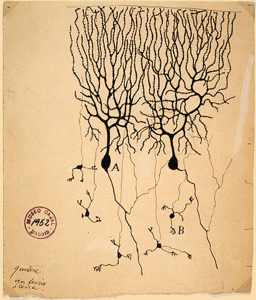

Neural networks have some claim to be based on a biological model, but not so the way they are trained. An artificial neuron has inputs and weights that correspond to dendrites and an output that corresponds to an axon. However the actual behavior of the artificial neuron is nothing like that of a real neuron. A real neuron fires off pulses at a rate that depends on how much it is stimulated. An artificial neuron just sends out a steady level corresponding to how much it is stimulated. There are even studies which suggest that real neurons are much more complicated than we ever give them credit for. Even so we still hold that artificial neural networks are biologically inspired, if not an accurate representation.

When if comes to training a neural network then things are very different. The principle of backpropagation is based in calculus. We apply an input and observe the output. We then calculate how far the output is from the desired output, i.e we work out an error signal. Using this and a knowledge of the network's derivative we work back through it, adjusting the connections to move the output in the direction of the correct output. All of this is very un-biological even though people have tried to find ways that it might be implemented in a real brain. This is a very general algorithm. Backprop is just a special case of differentiable programming. If you have a system and you know its derivative then the derivative gives you the rule for how to change the parameters of the system to improve it. By going round a loop, moving the system in the direction the derivative suggests, slowly makes it as good as it can be. Of course, the problem is finding the magic derivative and applying it. This is not a likely candidate for biology. Hinton's new idea is clever and it avoids explicit derivatives and any need to backpropagate errors. All that happens is that each neuron in the network has an evaluation function. The one suggested is simply its total excitation, i.e. the sum of the squares of all of its inputs. Instead of a forward pass followed by a backward pass, the new Forward-Forward (FF) algorithm works by implementing a forward pass where positive examples are presented to the network and each neuron adjusts its weights to increase its evaluation function. Next a second pass is made where the input is a negative example and in this case the network attempts to reduce the evaluation function at each layer in turn. You keep doing this forward positive followed by forward negative and the network learns to give the output you want depending on the input. There are a lot of claimed advantages for the method. It seems to learn just as fast, but it doesn't need to cycle round the same examples. It can be fed realtime data of positive and negative examples. It also doesn't have to know the details of the network, i.e. no derivative needs to be worked out. Hinton's paper, The Forward-Forward Algorithm: Some Preliminary Investigations gives some example of training using an FF algorithm and it seems to work well given the limited size of the data and training. You might be wondering where the negative examples come from? In training the network to recognize handwritten digits the negative examples are constructed by putting together masked version of digits - the negatives are clearly not nice neat digits like the positive examples. It is clear that this is yet another example of Hebbian learning - one of the earliest learning principles suggested by the psychologist Donald Hebb in 1949 often summarized as "cells that fire together wire together". In this case we have a slightly different form which could be expressed as "get active on positive examples and suppress activity on negative examples". There is also the connection to adversarial examples. You can think of these as negative examples which are very close to positive examples - so close that a backprop-trained network will classify the negative as a positive. Presumably an FF-trained network would be more robust against adversarial negatives. You can tell that there is a great deal of work to be done to find out about FF and to improve it. The paper lists eight open questions and it doesn't take much to think of others. Is this an important breakthrough? We'll have to wait and see. More Informationhttps://www.cs.toronto.edu/~hinton/ Related ArticlesGeoffrey Hinton Says AI Needs To Start Over Neurons Are Smarter Than We Thought Neurons Are Two-Layer Networks Geoffrey Hinton Awarded Royal Society's Premier Medal Evolution Is Better Than Backprop? Hinton, LeCun and Bengio Receive 2018 Turing Award To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info |

|||

| Last Updated ( Monday, 14 October 2024 ) |