| Kolosal AI-Run LLMs Locally On Your Workstation Or Edge Devices |

| Written by Nikos Vaggalis | |||

| Thursday, 17 April 2025 | |||

|

Kolosal is a new player in the LLM ecosystem, heralded as the lightweight alternative to LM Studio by requiring fewer system resources while offering similar functionality. Packaged as a compiled binary with size under 20MB, it allows you to chat with LLM models locally on your device, thus guaranteeing complete privacy and control. Supported devices encompass both workstations and edge devices, such as Raspberry Pi, smartphones, etc. The latter is pretty important since those kind of devices are low on resources and the ability to run LLMs to process data directly on the device where the data is generated, is a godsend for businesses since that way they can take decisions quicker based on the analysis of the data. That is, there's now no need to upload the data to the cloud to do the processing centralized. Kolosal true to this promise, sports the following key features: Universal Hardware Support

Lightweight & Portable

Wide Model Compatibility

Easy Dataset Generation & Training

On-Premise & On-Edge Focus

And what do you have to pay for all that? zero, since Kolosal is free, open source and can be compiled in any platform that has got C++17 and CMake with a few other standard dependencies

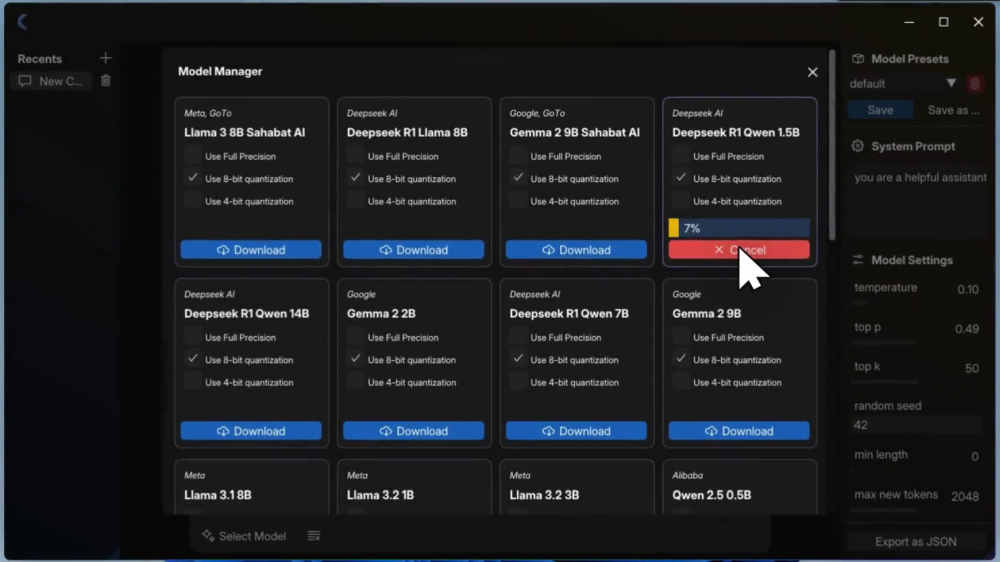

To install, you first have to download the repo and follow the instructions to build it. If you're on Windows, then you're in luck since Kolosal comes already packaged as a single binary As such, after you download and install it, you can choose which LLM foundation models to download and use.

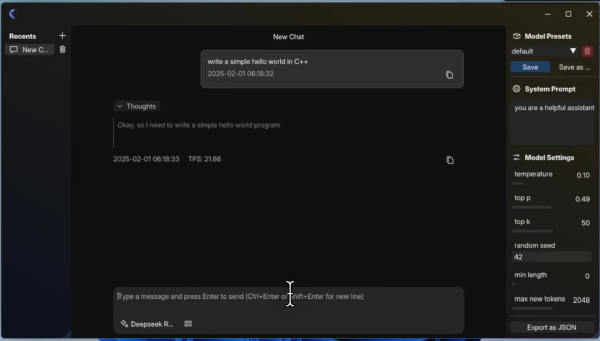

Lastly, TBA there's going to be the ability to fine-tune local LLM models with your own data on your own specific needs or domain knowledge, including data synthesis capabilities and support for multiple LoRA adapters. You can right now however fine-tune your local LLM experience by adjusting key model settings like temperature and context length. In conclusion, Kolosal appears to be a game changer, laying the foundation for the next era of AI, bringing intelligence directly to where the data resides.

More InformationRelated ArticlesPotpie - Agentic AI On Your Codebase

To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info |

|||

| Last Updated ( Thursday, 17 April 2025 ) |