|

Page 1 of 2 Having seen the potential of AI, the European Commission has released a set of ongoing guidelines on how to build AIs that can be trusted by society. We present an annotated analysis.

It is refreshing to see that the EU Commission follows the trend in the technological advancements, setting up pilot groups to understand how these advancements can be used for its own prosperity. Examples of that are the EU Blockchain Observatory which we've looked into in the article "EU Blockchain Observatory and Forum Blockchain AMA" or the EU bug bounty initiative which we've looked into in "EU Bug Bounty - Software Security as a Civil Right".

The Commission's instrument in this case is the High-Level Expert Group on AI (AI HLEG), an independent expert group set up in June 2018. The aim of the HLEG is to draft two deliverables: AI Ethics Guidelines and Policy and Investment Recommendations. It's the former that we'll be focusing on here.

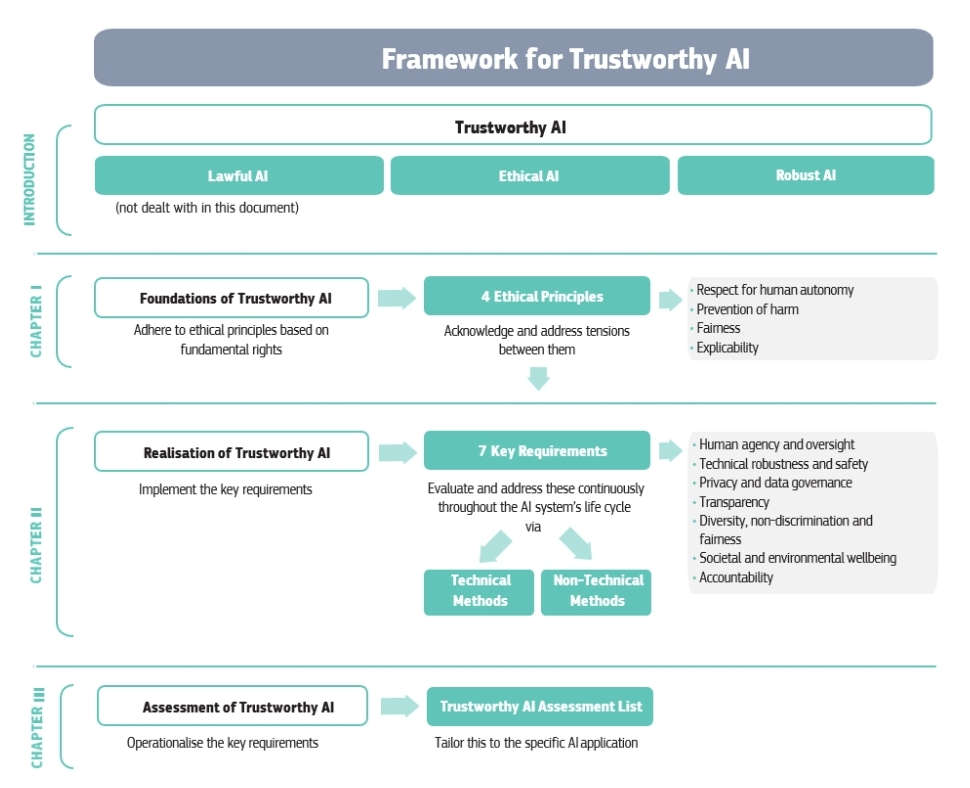

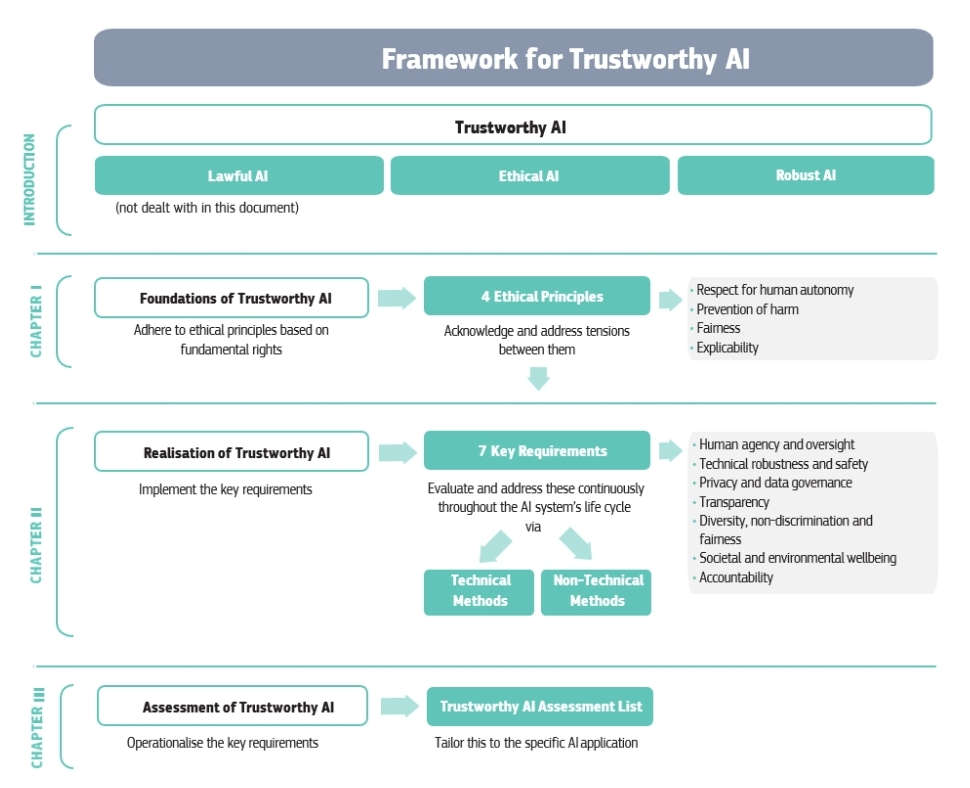

The aim of these guidelines is to promote so-called "Trustworthy AI", comprising of the following three components:

- It should be lawful, complying with all applicable laws and regulations

- It should be ethical, ensuring adherence to ethical principles and values

- It should be robust, both from a technical and social perspective since, even with good intentions, AI systems can cause unintentional harm

No 1 is not part of the group's mandate but 2 and 3 are.

Taken individually, these rules look difficult to follow, but nevertheless achievable. What complicates matters, a lot, is:

Each of these three components is necessary but not sufficient in itself to achieve Trustworthy AI. Ideally, all three work in harmony and overlap in their operation. In practice, however, there may be tensions between these elements (e.g. at times the scope and content of existing law might be out of step with ethical norms).

Despite that, these rules mostly concern "typical" AIs, the ones in our phones, the diagnostic ones in the doctor's office, the resume sorting ones or the ones inside autonomous vehicles; they can also also be applied to super-advanced AIs such as those in cybernetics and autonomous robots. In fact, the 3-rule interaction, together with the tensions between them. may be too complex for a machine to handle, instead requiring the human-machine intersection of cybernetics, something already explored by the sci-fi genre.

If that sounds too futuristic, keep in mind that Brain Implants and Cyborgs are already here, not in the sense of the movies though. Their most outspoken representative is UK scientist "Captain Cyborg", aka Dr Kevin Warwick, a pioneer of leading research projects:

which investigate the use of machine learning and artificial intelligence techniques to suitably stimulate and translate patterns of electrical activity from living cultured neural networks to use the networks for the control of mobile robots.Hence a biological brain actually provided the behavior process for each robot.

He underwent surgery to implant a silicon chip transponder in his forearm with which he could "operate doors, lights, heaters and other computers without lifting a finger". That was in 2002 with Project-Cyborg 1.0. With the commencing of Project-Cyborg 2.0 he looked at how a new implant could send signals back and forth between Warwick’s nervous system and a computer:

Professor Warwick was able to control an electric wheelchair and an intelligent artificial hand, developed by Dr Peter Kyberd, using the neural interface. In addition to being able to measure the nerve signals transmitted along the nerve fibres in Professor Wariwck’s left arm, the implant was also able to create artificial sensation by stimulating via individual electrodes within the array. This bi-directional functionality was demonstrated with the aid of Kevin’s wife Irena and a second, less complex implant connecting to her nervous system.

These human enhancement experiments already raise serious issues on BioEthics; imagine also adding AI to the mix.

In the past we've looked at cases which demonstrate the the power that AI technology has already achieved.One is "Atlas Robot - The Next Generation" which showcases the capabilities of the new generation of the Atlas robots, and another is "Achieving Autonomous AI Is Closer Than We Think" where we looked into the USAF project of AI powered software running on a Raspberry Pis capable of beating an experienced pilot in simulated air combat.

There is another issue that AI ethics have to cope with -Autonomous Robot Weaponry. Before you rush to declare it unethical by default, remember that even in war there are still rules and ethics that should be adhered to, such as the Geneva convention.

HLEG's consortium is not the first of its kind. The non-profit organization "Formation of Partnership On AI" by Amazon, DeepMind/Google, Facebook, IBM, and Microsoft serves the same cause, beating them to it by almost 3 years. But while the "Partnership" is a private sector initiative, HLEG is endorsed by the public sector, which goes to show that despite the private sector being quicker to the news, there's still forward thinking among bureaucracy. More importantly, HLEG tries to fill the void left exploitable by the law's and governments' struggle in keeping up with the latest technological advancements.

So what does HLEG try to address? In its own words:

We believe that AI has the potential to significantly transform society. AI is not an end in itself, but rather a promising means to increase human flourishing, thereby enhancing individual and societal well -being and the common good, as well as bringing progress and innovation.

In particular, AI systems can help to facilitate the achievement of the UN’s Sustainable Development Goals, such as promoting gender balance and tackling climate change, rationalizing our use of natural resources, enhancing our health, mobility and production processes, and supporting how we monitor progress against sustainability and social cohesion indicators.

To do this, AI systems need to be human-centric, resting on a commitment to their use in the service of humanity and the common good, with the goal of improving human welfare and freedom.

While offering great opportunities, AI systems also give rise to certain risks that must be handled appropriately and proportionately. We now have an important window of opportunity to shape their development. We want to ensure that we can trust the sociotechnical environments in which they are embedded.

In other words, as it happens with every technology out there, AI can be turned to good or evil and HLEG is trying to funnel this unstoppable river of evolution towards the right, ethical, direction. The notion is that human beings and communities will have confidence in AI only when a clear and comprehensive framework for achieving its trustworthiness is in place.

The talk is on the socio-economic issues raised, which these guidelines try to address. For example:

- Who is responsible when a self-driven car crashes or an intelligent medical device fails?

- How can AI applications be prevented from promulgating racial discrimination or financial cheating?

- Who should reap the gains of efficiencies enabled by AI technologies and what protections should be afforded to people whose skills are rendered obsolete?

Because ultimately, as people integrate AI more broadly and deeply into industrial processes and consumer products, best practices need to be spread and regulatory regimes adapted.

From HLEG's perspective:

"the guidelines aim to provide guidance for stakeholders designing, developing, deploying, implementing, using or being affected by AI who voluntarily opt to use them as a method to operationalise their commitment".

The key word here is voluntarily; they can't force anyone to live by those rules. But, what they could very well do in the near future, especially given that they act as an instrument of the EU Commission and subsequently of the public sector, is to recommend to governments that as part of their procurement procedures, they should only accept contracts by the private sector when they abide by those guidelines, as such acting as a certificate of ethical quality assurance.

The Guidelines themselves are split into three chapters:

Chapter I – Foundations of Trustworthy AI identifies and describes the ethical principles that must be adhered to in order to ensure ethical and robust AI.

Chapter II – Realising Trustworthy AI translates these ethical principles into seven key requirements that AI systems should implement and meet throughout their entire life cycle.

Chapter III – Assessing Trustworthy AI sets out a concrete and non-exhaustive Trustworthy AI assessment list to operationalise the requirements of Chapter II, offering AI practitioners practical guidance.

We'll consider each chapter in turn.

|