| The Memory Principle - Computer Memory and Pigeonholes |

| Written by Mike James | ||||

| Thursday, 07 January 2021 | ||||

Page 1 of 3 We discover why computer memory can be likened to pigeonholes and even include instructions for you to build your own memory device. What Programmers Know

Contents

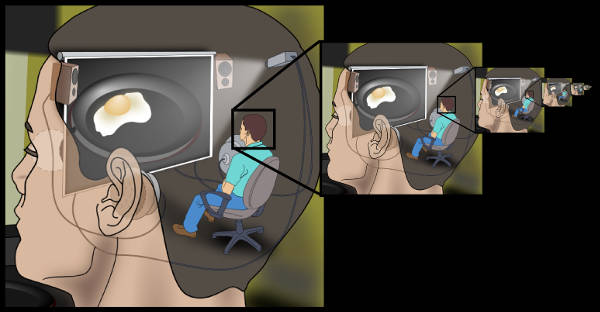

* Recently revised The idea of computer memory is basic to the whole idea of the computer. It raises most clearly the way a computational mechanism actually does the computation in a way that is much clearer than other aspects of computation. A memory stores data and a memory retrieves data - but so does my paper notebook and it isn't a computer? What exactly is going on is subtle but understanding it, and not all programmers do, is the key to really understanding the nature of computation. In this slightly whimsical account of the memory principle all will be revealed... It is difficult to recapture the amazing moment when you finally understand how a computer works. I don’t mean the understanding of a particular computer, its specific peculiarities etc, but the general understanding of how this wonderful trick works and what a simple trick it is. Now the worrying part here is that some readers will have passed this moment of truth many years ago and will have forgotten the pleasure it gave them. Some readers will think they have grasped it when really they haven’t. And finally of course there are the readers who know they don’t know what I am talking about at all! This all makes for a big communication problem made worse by the fact that, to give you the underlying theory, I have no choice but to talk about particular realisations of it. Let me give you a short example of this mismatch of world views. The problem is very similar to thinking about the way a human might work. You can think of a human as a sort of machine in which a tiny human lives - presumably up in the head. The tiny human looks out thought the eyes and probably pulls leavers to make the real human do things. This is the homunculus theory of how humans work and it is clearly nonsense because you simply end up with an infinite regression of ever smaller humans pulling ever smaller levers. However humans work it isn't by having another smaller human living inside.

"Infinite regress of homunculus" by Jennifer Garcia

The same sort of explanation tends to be used when it comes to computers only in this case the error is far less obvious. People often describe how a computer works by imagining a small human inside the circuits looking to see what has to be done and doing it. Of course this isn't how computers work and it isn't even close. In fact the use of this sort of argument can completely hide the wonderful way that computers actually work and mystify the beginner. Let me give you an example. A long, long time ago, in a country where they did things different, in the days when computers had valves, kept people warm and lived in huge buildings (one computer per building), I went to a “Young Person’s Lecture”. It was all about the new (then) art and science of the computer. I knew as little about this subject as it is possible to know, despite having written my first six-line Fortran program only a few weeks earlier. The lecture was interesting and it had lots of pleasing “lantern slides” (a sort of early LCD projection unit) and the man pointed to a blackboard (like a whiteboard but black) with a stick (like a laser pointer but made of wood). I found the whole thing really interesting, but I sat up most in the section where he promised to tell me how computer memory worked. I knew a tiny amount about memory – it was where my six-line Fortran program lived before the computer obeyed it – so this was my chance to find out how it worked. The lecturer showed a slide of a large wooden construction something like a bookcase but divided up into compartments which he called “pigeonholes” and, yes, you could see that a family of pigeons might want to take shelter there. He then went on to describe how each pigeonhole had an “address” and this enabled someone to store a pigeon at a particular “location” and then “retrieve” it. “This is how a computer’s memory works” is the final sentence I remember. |

||||

| Last Updated ( Thursday, 07 January 2021 ) |