| Get To Grips With Transformers And LLMs |

| Written by Nikos Vaggalis | |||

| Tuesday, 20 January 2026 | |||

|

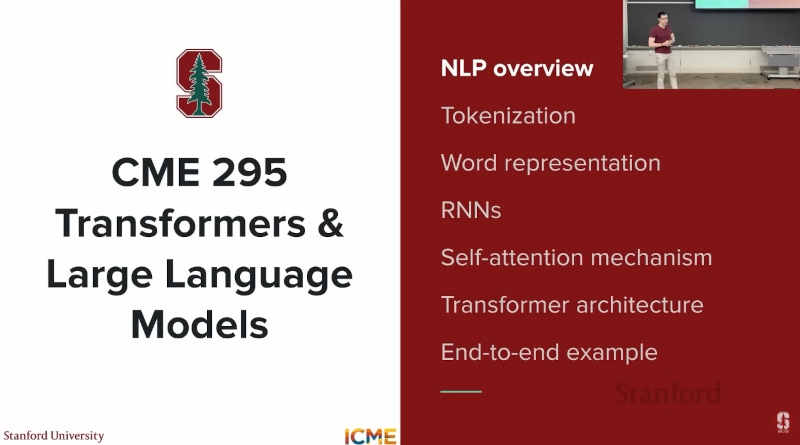

This isn't just a course, it's the complete curriculum of Stanford’s CME295 Transformers and Large Language Models from Autumn 2025. It's a course build in the open that holds nothing back. As such the videos themselves are the actual recordings of the on-campus lectures. The slides of the lectures are also available. There's no homework, however there's two exams: a midterm and a final which questions as well as their solutions are available too. Material wise, the course teaches the fundamentals of AI which are necessary to build a solid background. A solid background subsequently will allow navigating the AI landscape with confidence, understand the terminology and utilize that knowledge for building AI powered products. Spanning 9 lectures, it covers everything that needs to be known:

In more detail, the first three lectures explore topics ranging from Tokenization and Embeddings, Word2vec, BERT and its derivatives to Prompting, in-context learning and Chain of thought.

Chapters 4 and 5 cover LLM training and fine tuning; Pretraining, Quantization RLHF, DPO. The next three chapters cover LLM reasoning, Agentic LLMs and LLM evaluation.Topics: Reasoning models, Retrieval-augmented generation, Function calling, LLMs-as-a-judge. The course wraps it up by looking at the current trends and what the future holds. So in the end when should you take this class? You should take it if you have an existing overall understanding of linear algebra concepts, basic machine learning and Python, and you're looking to understand how the Transformer architecture works as well as become informed on the ongoing LLM trends. That said, even if you're not interested in the workings of an LLM but are instead a practiocioner, lectures 7 Agentic LLMs and 8 LLM evaluation, focusing on those hot and trendy subjects, can be watched in isolation from the rest of the material, and will answers many questions like :

More InformationRelated Articles

To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info |

|||

| Last Updated ( Tuesday, 20 January 2026 ) |