| Computational Photography Moves Beyond The Camera |

| Written by David Conrad | |||

| Sunday, 01 October 2017 | |||

|

Computational photography is the big second photographic revolution - the first being the move from film to digital. Now we have algorithms that can compose a photo after it has been taken and create effects that would require light to move in curved paths through lenses. We have moved far beyond the simple camera.

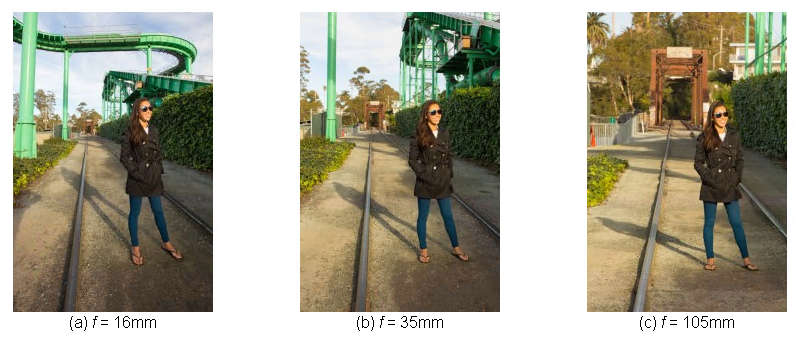

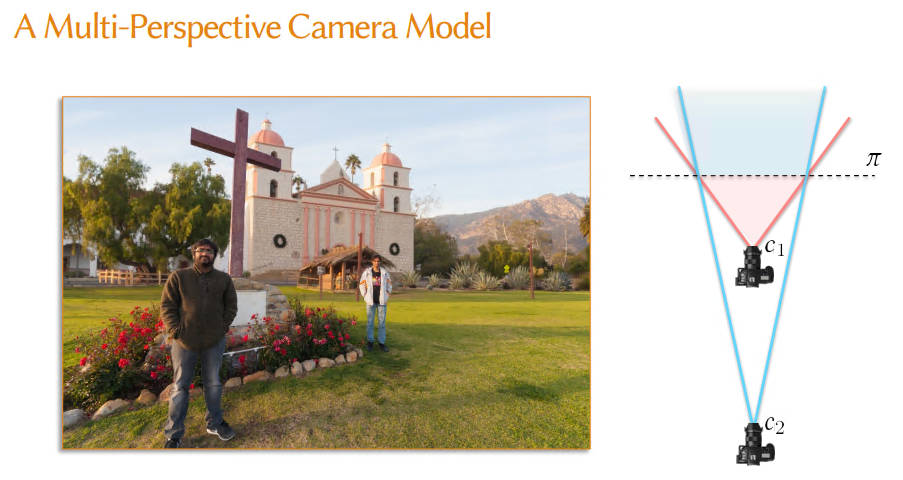

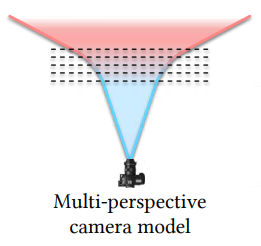

One of the marks of a professional photographer is the understanding of depth of field and perspective. By changing the focal length of the lens the entire feel of a photo can be altered. To the beginner it looks as if all that happens when you move from a wide angle, short focus, lens to a narrow angle, telephoto, lens is that you get closer to your subject and as a side issue the field of view changes. However, there is a much more subtle, and often more important effect, the perspective changes. This means the composition of the photo changes. If you use a wide angle lens then things in the background are small compared to the foreground. If you use a telephoto lens then there isn't so much difference and foreground and background are closer together. Until now it had always been a choice that you had to make at the time the photo was taken and you really had to pick either a short or a long focal length lens. Now researchers at UC Santa Barbara and NVIDIA have invented computational zoom. This allows the photographer to select the zoom after the photo has been taken and even blend together different zoom factors to create photos that couldn't be taken with a simple digital camera. The trick is a familiar one - take a stack of images, moving the camera closer to the scene between each shot and without changing the focal length of the lens. The stack of images is used to estimate depth and hence to construct a 3D model of the scene which can then be used to synthesize particular views, including some that couldn't have been taken with a traditional setup.

To see what can be achieved take a look at the following video and concentrate on the relationship between the background and foreground:

The big problem with the current technique is the need to take multiple photos at different distances. An alternative approach using a single camera with multiple focal length lenses could make capturing a stack as easy as "click" - which is the one aspect of film photography that will never be replaced.

More InformationComputational Zoom: A Framework for Post-Capture Image Composition by Abhishek Badki, University of California, Santa Barbara and NVIDIA; Orazio Gallo, NVIDIA; Jan Kautz, NVIDIA, Pradeep Sen, University of California, Santa Barbara Related ArticlesGoogle Implements AI Landscape Photographer Using Super Seeing To See Photosynthesis Selfie Righteous - Corrects Selfie Perspective Computational Photography Shows Hi-Res Mars

To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info |

|||

| Last Updated ( Sunday, 01 October 2017 ) |