| Shakes On A Plane - Easy 3D Imaging |

| Written by David Conrad | |||

| Sunday, 01 January 2023 | |||

|

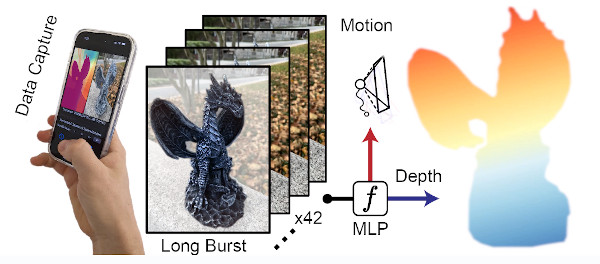

You just got to love titles of academic papers like "Shakes on a Plane". In this case it's appropriate. Take one standard camera a burst shot of images and you can create a depth image due to the unsteadiness of the hand-held camera. One of the things I like about computational photography is the way that you can get impressive new results with the same old hardware. What matters is what you do with the data. The latest research from Princeton Computational Imaging Lab fits right in with this idea and, as already mentioned, has a good sense of humour about its title. What is more it turns an archenemy of the photographer - camera shake - into something useful. You may have practiced the art of holding your breath and timing the shutter release to the gap between heartbeats, but from now on a little camera shake could be desirable. The idea is that if you take an automatic burst of image then the inevitable camera shake can be used to deduce 3D depth from the apparent parallax. You know about parallax - when you look out of a moving window things that are near to you move faster in the image plane than things that are far away. The new work demonstrates that in a two-second burst of 42 images there is enough information to recover high quality scene depth.

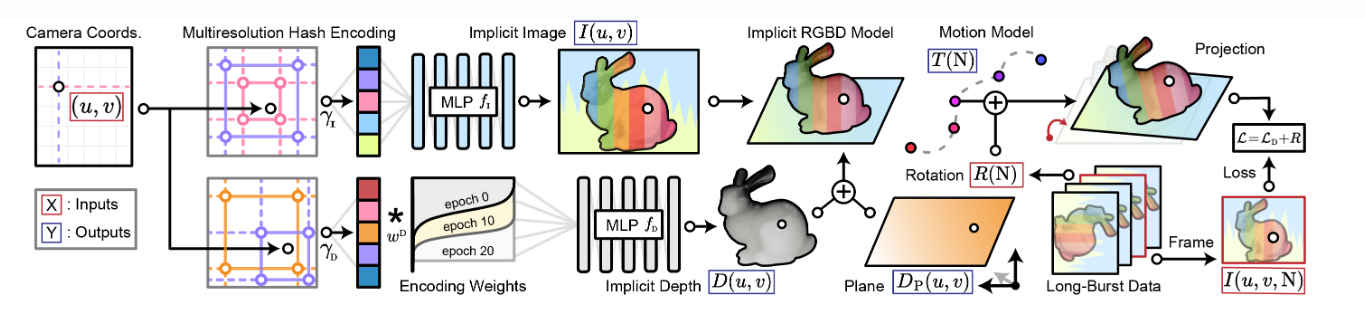

What is interesting about the approach to the problem is that the raw images are fed into a neural network which has been trained to infer depth from parallax. The big problem with this approach is that you don't have labelled training data, i.e. you don't have lots of burst photos with depth data to train the neural network on. The solution is to train a network to create a image that you would get without camera shake and then use the learned depth estimates to create a RGBD model. If this model is correct then it should predict what you see in the different burst images after accounting for the motion, i.e. the camera shake. You can use this as feedback to correct the neural network and so learn to do better. Notice that all of this is achieved without needing to use a position sensor of any kind.

This is an application of the idea that you can use a prediction to match what you actually see and use any discrepancies to correct the prediction. Over time the process converges and you have a network that can produce a reliable depth model.

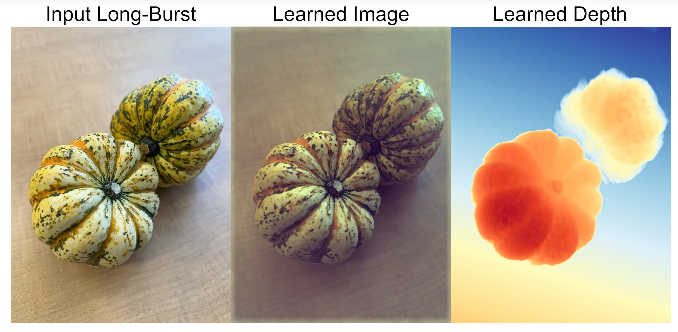

If you look at the examples included in the paper you can see it really does work well. Who would have thought that camera shake could actually be useful? If you want to try it out for yourself then you can download the code from GitHub - when it finally gets there.

More InformationShakes on a Plane: Unsupervised Depth Estimation from Unstabilized Photography by Ilya Chugunov, Yuxuan Zhang, Felix Heide Related Articles3-Sweep - 3D Models From Photos Generate 3D Flythroughs from Still Photos Seeing Buildings Shake With Software Megastereo - Panoramas With Depth

To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Facebook or Linkedin.

|

|||

| Last Updated ( Sunday, 01 January 2023 ) |